Difference between revisions of "Stock Analysis Log"

Keithkamons (talk | contribs) |

m (Protected "Stock Analysis Log" ([Edit=Allow only administrators] (indefinite) [Move=Allow only administrators] (indefinite))) |

||

| (89 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | ===Aug. 27 -- Sept. 2=== | + | Link to Project Page: [[Stock Analysis]] |

| + | |||

| + | ===Week 1: (Aug. 27 -- Sept. 2)=== | ||

(1.5 hours) All group members discussed how to target what data we should find and what property of a home we find important. | (1.5 hours) All group members discussed how to target what data we should find and what property of a home we find important. | ||

| − | + | ||

| − | ===Sept. 3 -- Sept 9=== | + | ===Week 2: (Sept. 3 -- Sept 9)=== |

(1.0 hours) Brandt, Keith, and Jessica met with Prof. Fehr to review the project and receive further guidance. | (1.0 hours) Brandt, Keith, and Jessica met with Prof. Fehr to review the project and receive further guidance. | ||

| − | ===Sept. 10 -- Sept. 16=== | + | ===Week 3: (Sept. 10 -- Sept. 16)=== |

(3.0 hours) Brandt and Keith further refined the project: investigated data scraping, how to add data to a database, and sites which are easily scrappable and have the data were looking for. Keith downloaded PyCharm so that we could begin experimenting with our ideas using python. | (3.0 hours) Brandt and Keith further refined the project: investigated data scraping, how to add data to a database, and sites which are easily scrappable and have the data were looking for. Keith downloaded PyCharm so that we could begin experimenting with our ideas using python. | ||

| Line 12: | Line 14: | ||

(9/15 Keith: 2 hrs.) Worked towards scraping data from google finance. Google protects financial information which will be a challenge. Currently working on a work around. | (9/15 Keith: 2 hrs.) Worked towards scraping data from google finance. Google protects financial information which will be a challenge. Currently working on a work around. | ||

| − | ===Sept. 17 -- Sept. 23=== | + | ===Week 4: (Sept. 17 -- Sept. 23)=== |

| + | (2 hours) Brandt, Keith, and Jessica met to discuss and refine the project idea as well as flesh out the wiki. | ||

| + | (2 hours) Jessica created and polished the presentation. | ||

| − | ===Sept. 24 -- Sept. 30=== | + | ===Week 4: (Sept. 24 -- Sept. 30)=== |

(3 hours) Brandt compiled data and began to join the data in excel. The result was uploaded to excel. I investigated uploading these tables of data to a SQL server, and created accounts on AWS and Microsoft AZURE, however had issues figuring out how to upload the data. | (3 hours) Brandt compiled data and began to join the data in excel. The result was uploaded to excel. I investigated uploading these tables of data to a SQL server, and created accounts on AWS and Microsoft AZURE, however had issues figuring out how to upload the data. | ||

| Line 21: | Line 25: | ||

(3 hours) Keith researched data scraping, ran code which successfully scrapped data through both Yahoo and Quandl, and discussed with Brandt on how we can load our data into a cloud SQL server. | (3 hours) Keith researched data scraping, ran code which successfully scrapped data through both Yahoo and Quandl, and discussed with Brandt on how we can load our data into a cloud SQL server. | ||

| − | === | + | (2 hours) Keith setup MySQL and SQLite and worked towards figuring out a way to make data accessible to everyone. |

| − | ===Oct. | + | |

| − | ===Oct. | + | (3 hours) Brandt Used Pandas to read-in the excel data, and MatPlotLib to display the results graphically in a plot. The code was uploaded to GitHub to enable the group to view and edit. I also Identified another type of data that could be used later on in the project for further analysis; technical data on SP 500 such as Simple moving average, Stochastic RSI, and bollinger bands. This data can be obtained statically for free via our Bloomberg terminals in the business library. Problems; how to handle data that is N/A (unavailable at the time like EUR before it was a thing or Libor before it existed) |

| − | + | ||

| − | ===Oct. | + | (1 Hour) Keith played around with Numpy and matrix manipulations and how to set up functions and define the main method. |

| − | ===Nov. | + | |

| − | ===Nov. | + | (2 hours) Jessica figured out how to use Python and got part of a GUI set up. |

| − | ===Nov. | + | |

| − | ===Nov. | + | (2.5 hours) Jessica worked on GUI. Got a rough version done. |

| − | + | ||

| − | ===Dec. | + | (1.5 hours) Keith set up AWS EC2 instance and configured apache, so now the instance is connected to internet. Had to overcome Permission denied (public key) error. [http://ec2-13-59-57-197.us-east-2.compute.amazonaws.com/~ese205 Check it out here] |

| + | |||

| + | (1 hour) Keith and Brandt talked to Madjid Zeggane about how to attack this problem and how to obtain data from bloomberg. We need to finalize which tickers, industries and indexes we need download. He advised us to narrow the scope of the project and make out make our goals more reachable for the scope of our course. | ||

| + | |||

| + | Possible Issues: _Setup second AWS EC2 instance in a way such that all of us can ssh into it. Remembering python | ||

| + | |||

| + | ===Week 5: (Sept. 30 -- Oct. 6)=== | ||

| + | |||

| + | (4 hours) Brandt completed intro/intermediate python module found on Kaggle and DataCamp. Now I'm more familiar with pandas and matplotlib. They have lessons over sci-kit learn which I will be going over as well. Check out my github [https://github.com/brandtlawson/EnDesign205v1 Here] | ||

| + | |||

| + | (45 min) Keith configured mysql database, created a non-root user for accessing information online and configured phpmyadmin to have a user interface with mysql. | ||

| + | |||

| + | (1 hour) Keith downloaded anaconda and python and configured to AWS EC2 instance. Then set up IPython and jupyter notebook. The [https://ec2-13-59-57-197.us-east-2.compute.amazonaws.com:8888/ link] to the notebook is password protected and your browser may have security issues with the link. To overcome just proceed to advanced settings and add this as a security exception. This is just to see the python files in our notebook. | ||

| + | |||

| + | (1 hour) Keith, Jessica, and Brandt met. | ||

| + | |||

| + | (1 hour) Brandt applied what was learned from and Kaggle and DataCamp to produce visualizations of the data using MatPlotLib. Then I utilized Seaborn in order to create a visualization of the correlation matrix of the features, and a second matrix with an OLS regression line draw through the graph. | ||

| + | |||

| + | (2 hours) Keith fixed the phpMyAdmin issue (took more research than anticipated), so you an now see the the databases, structure and content. Content may be hard to view in browser since there are over 17,000 entries. Additionally loaded data into SQL. | ||

| + | |||

| + | (3 hours) Jessica worked on finding a way to display the model chart in the GUI. | ||

| + | |||

| + | (1 Hour 10/8) Keith looked into how to use the newly installed python and anaconda to interact with the sql database. | ||

| + | |||

| + | (3 hours) Brandt added a correlation matrix to the github so that we can see empirically which variables are correlated and by how much. Brandt and Keith brainstormed about how to proceed with the project. Coordinated with Keith in an effort to get the EC2 instance to be accessible from My computer. | ||

| + | |||

| + | (3 hours) Keith formatted more data in excel to be loaded into sql. Error experienced errors with FIlezilla loading data from local machine to EC2 instance. Compiled list of commodities, bonds and sectors to be pulled from the Bloomberg machine. | ||

| + | |||

| + | Next steps: put Additional data into database, migrate GitHub code over to ec2 Jupiter Notebook, finish OLS and backtest somehow. Ask Chang how to backtest. | ||

| + | |||

| + | Challenges: Using python in the EC2 to interact with the data in SQL. Using python to display the model chart in a GUI. Methods for backtesting. | ||

| + | |||

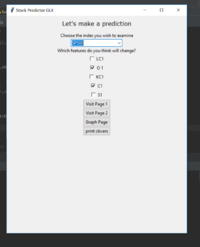

| + | [[File:GUI Pic.png|thumb]] | ||

| + | |||

| + | ===Week 6: (Oct. 14 -- Oct. 20)=== | ||

| + | (1.5 hours 10/14) Jessica worked on finding a way to display the model chart in the GUI. | ||

| + | |||

| + | (3 hours 10/16) Jessica successfully found a way to display the model chart on the GUI and changed the code. Also found a potential way to have live updates on the chart, but needs more research. | ||

| + | |||

| + | (2.5 hours 10/19) Keith met with Madjid in the Kopolow library to learn how to use a Bloomberg terminal to collect and export data. Also learned about the different ways that the commodities are prices and different techniques to analyze the data. Madjid suggested using the Bollinger bands to detect trends in the market. Anticipate challenges in collecting and parsing data. | ||

| + | |||

| + | ===Week 7: (Oct. 21 -- Oct. 27)=== | ||

| + | |||

| + | (3 hours 10/23) Brandt started a simple linear regression model on the Jupyter Notebook. The latest file on GitHub can be found here XXXXXXXXXXXXXXXX. The fit looks decent when a regression line is drawn graphically, and the R^2 gets better obviously as more features are made available. I am continuing researching on the most appropriate way to backtest our regression, any of the premade libraries I have found in python cater to Algorithmic-Trading and are designed to track Profit&Loss rather than some type of confidence interval of predictions. This could take longer than expected. I looked into k-fold cross validation, which essentially is a multi-iteraton version of doing a train/test split. | ||

| + | |||

| + | (1 hour 10/22) Keith researched on Bloomberg which commodity contracts are long lasting in order to determine which data to pull. Anticipate challenges in finding and cleaning data since some contracts can be short term. | ||

| + | |||

| + | (1.5 hours 10/23) Keith and Brandt went to pull data from Bloomberg machine, but the school's monthly limit has been met. Madjid will contact me when we can pull data again. Limits are reset monthly. Then met with Brandt to go over the current understanding of the math models and how we want to go forwards with testing and training (rolling forwards testing). | ||

| + | |||

| + | (1.5 hours 10/23) Brandt and Keith went to pull data from Bloomberg terminals, but someone used up the schools data limit for the next few days :(. Me and Keith discussed each others progress so as to channel our efforts most effectively. | ||

| + | |||

| + | ( 4 hours 10/23) Brandt finished constructing a few OLS regression models using 1 or more variables, and implemented several different model validation techniques . As discussed in the last log entry, first I implemented the k-fold validation. each fold has an R^2 so I took the mean of each fold. The R^2 values looked pretty awful, something had to be wrong. After doing some research, I discovered that apparently time series data must be treated differently when backtesting and cross-validating. So k-fold is not legitimate. Instead, multiple sources, (XXXXXXXXXXXXXXX) pointed to a modified k fold where the test data is never before the train data. I found a method in sci-kit learn that helps us do this, TimeSeriesSplit(), and implemented it. The R^2 make more sense but in some iterations are still negative or very small. Now that we have a method for cross-validating, we can implement other models until we are able to produce a more acceptable R^2. | ||

| + | |||

| + | |||

| + | |||

| + | The table structure at the moment is as follows:<br> | ||

| + | +-----------+---------------------+------+-----+---------+-------+<br> | ||

| + | | Field | Type | Null | Key | Default | Extra |<br> | ||

| + | +-----------+---------------------+------+-----+---------+-------+<br> | ||

| + | | ref_date | date | NO | | NULL | |<br> | ||

| + | | open | decimal(20,10) | NO | | NULL | |<br> | ||

| + | | high | decimal(20,10) | NO | | NULL | |<br> | ||

| + | | low | decimal(20,10) | NO | | NULL | |<br> | ||

| + | | adj_close | decimal(20,10) | NO | | NULL | |<br> | ||

| + | | close | decimal(20,10) | NO | | NULL | |<br> | ||

| + | | volume | bigint(20) unsigned | NO | | NULL | |<br> | ||

| + | +-----------+---------------------+------+-----+---------+-------+<br> | ||

| + | <br> | ||

| + | |||

| + | (10/25 2 hours) Keith and Brandt pulled data from the Bloomberg machine. We searched for commodities that had a long price history and had to figure out the best way to collect them. | ||

| + | |||

| + | Potential issues: TimeSeries annoying, R^2 bad, Hard to debug with more than 1 variable in regression b/c you can't view it graphically | ||

| + | |||

| + | (10/27 2 hours) Jessica worked on GUI. | ||

| + | |||

| + | Next steps: More Models, Gui, More Data | ||

| + | |||

| + | ===Week 8: (Oct. 28 -- Nov. 3)=== | ||

| + | |||

| + | (10/28 1 hour) Keith recollected data and saved it in a proper way (so that the values were save, not the queries). Then formatted the data in a way that can be inserted into SQL. | ||

| + | |||

| + | (10/28 1 hour) Jessica worked on the GUI, plot does not refresh with new inputted values. | ||

| + | |||

| + | (10/29 3.5 hours) Jessica worked on the GUI. Plot does update with new inputted data, but does not update inside of the GUI. It only updates plots properly when the plot is open in a different window. Trying to get the updates inside of the GUI. | ||

| + | |||

| + | (10/30 1 hour) Keith and Jessica met up to go over bugs in GUI. | ||

| + | |||

| + | (10/30 2.5 hours) Keith and Brandt discussed the objective of the project. It is pretty good at the moment, so our reevaluation is to keep it the same. Otherwise we worked on configuring arima (a machine learning toolbox) with our data. We are having trouble feeding in multiple variables. This should come with more time and familiarity with the software. | ||

| + | |||

| + | (10/30 1 hour) Brandt researched time series analysis methods and discovered new toolbox. Arima is similar to what Prof. Feher recommended. Worked on tuning the hyper parameters. | ||

| + | |||

| + | (11/02 2 hour) Keith cleaned combined the S&P500 data with the commodity data in one file. Preformed more research in support vector machines and started demo data camp. | ||

| + | |||

| + | (11/03 2 hour) Keith researched the underlying mechanics of the SVM and implemented the SVM but in doing so found errors in the data. There were 185 (.) instead of numerical values in the file. | ||

| + | |||

| + | (11/02 4 hours) Brandt researched ARIMA and beat his head for hours trying to get it to work. Now I know that ARIMA is for a single variable, but that "VARIMAX" may work for multiple exogenous variables, so we need to use that. | ||

| + | |||

| + | ===Week 9: (Nov. 4 -- Nov. 10)=== | ||

| + | (11/05 1.5 hour) Keith researched the SVM error and implementation. No results. | ||

| + | |||

| + | (11/5 2 hours) Jessica researched different regression models. | ||

| + | |||

| + | (11/06 4 hours) Brandt worked on finishing the forward rolling cross validation technique, and plotting the color coded results so that we can more easily debug bad fits. Also I cleaned ups the code a little bit. A read me os in the work. | ||

| + | |||

| + | (11/07 1 hour) Brandt met with Keith to go over our progress this week. We discussed a few different models and how to proceed going forward | ||

| + | |||

| + | (11/07 2.5 hours) Brandt derived/proved the Multivariate Ordinary Least Squares equations. This will be going onto the GitHub! | ||

| + | |||

| + | (11/08 1 hour) Keith set up and the SVM to train data, then let run for a few hours until program crashed. | ||

| + | |||

| + | (11/09 4 hours) Brandt and Keith talked about how to make make predictions in time given the relational coefficients and the best way to present this to users. Brandt further worked developing the regression and Keith worked on upgrading the GUI so that it can data from our modeling file. | ||

| + | |||

| + | Goals: Work out the error in the SVM code. Also get started on writing out and gain a better understanding of the support vector machine algorithms. (I linked a reference which has a pretty good mathematical formulation of what it is). Figure out a way to run the SVM without crashing the program. | ||

| + | |||

| + | ===Week 10: (Nov 11. -- Nov. 17)=== | ||

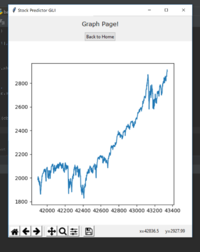

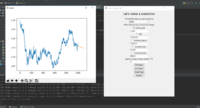

| + | (11/11 3 hours) Keith created a new GUI. Tkinter is best implemented with Object-oriented programming techniques which caused flaws in trying to implement the old one. The current GUI can take in data from outside python files (using pandas dataframes or numpy arrays) and can plot them using matplotlib in a Tkinter GUI (see link in references). The current configuration generates a plot in a window, but there is a return button to the homepage. There will be more style implemented later once the functionality of our forecasting is nailed down. | ||

| + | |||

| + | [[File:NewGuiUI.png | 200px | thumb| right | User Interface]] | ||

| + | |||

| + | [[File: NewGuiPlot.png | 200px | thumb| right|Plot Results]] | ||

| + | |||

| + | (11/12 1.5 hours) Jessica created Lasso regression model. | ||

| + | |||

| + | (11/13 1 hour) Keith and Brandt discussed their code and went through how each programed worked to start to merge the two. | ||

| + | |||

| + | (11/15 1 hour) Jessica began working on the poster for demonstration. [https://docs.google.com/presentation/d/1_B-JxY1cexO6_1PyznlvhnW9ugBHcQ8vgk51bDKUldI/edit?usp=sharing Demonstration Slide] | ||

| + | |||

| + | (11/16 2 hours) Keith and Brandt came up with new design for the gui and decided how we will construct the estimate based off of user input. | ||

| + | |||

| + | (11/19 4 hours) Brandt created an answer matrix so that our coefficients are pre calculated | ||

| + | |||

| + | Goals should be to complete the forecasting techniques and get some work done on the poster so that Chang and Prof. Feher can look it over before break. | ||

| + | |||

| + | ===Week 11: (Nov 18. -- Nov. 24)=== | ||

| + | |||

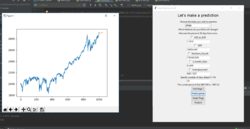

| + | (11/18 3 hours) Keith completed the reconfiguration of the GUI. Currently can use the interface to collect user input and predict what the price will be. The textboxes are automatically set to contain the most recent price for that feature. Currently can only predict based off of one feature. Faced troubles in handling bad user input so created functions to handle error (when collecting numbers ensuring they are floats or ints). Faced issues with updating the matplotlib graph embedded in tkinter, so just created a new popup window for the time being. | ||

| + | |||

| + | [[File: nov18gui.png | 200px | thumb| right| GUI]] | ||

| + | |||

| + | (11/19 2 hours) Keith and Brandt met up to talk about what exactly the user experience should be. Users should be able to specify the number of days then we predict the price of the individual features and the S&P 500. Users should also be able to input what features they think will change, and in how many days will that feature reach that price, then we calculate the price of the S&P500 for them. Brandt also took this time to work on the poster. | ||

| + | |||

| + | (11/19 5 hours) Brandt researched, then implemented ARIMA. ARIMA has 3 parameters, and the optimum hyper-parameters are estimated using an integer grid search over possible hyper parameter values and saving the parameter combination which has the least AIC (Akine Information Criterion) | ||

| + | |||

| + | (11/20 2.5 hours) Keith implemented the methods discussed in the previous post. Issues arose in the results of the regression. Our projected S&P 500 price is significantly off of what it should be. We can generate and display results, but they are not very accurate. The updated GUI now shows the current prices of the features and the S&P500 price on start up. Once the user specifies the number of days in advance and hits predict, the prices for all features and the S&P500 price are then updated and the results are displayed graphically. | ||

| + | |||

| + | [[File: 1120gui.png | 250px | thumb| right| 11/20 GUI]] | ||

| + | |||

| + | (11/23 1 hour) Jessica worked on poster. | ||

| + | |||

| + | ===Week 12: (Nov 25. -- Dec. 1)=== | ||

| + | |||

| + | (11/27 2 hours) Keith worked out bug with regression results after discussing issue with Brandt. I forgot to collect and add the y-intercept to the equation. Now the gui is able to take in user's predicted changes in the market and forecast the price of the S&P500 based off of their input. | ||

| + | |||

| + | (11/27 1 hour) Jessica worked on the proof for the poster. | ||

| + | |||

| + | ===Week 13: (Dec. 2 -- Dec. 8)=== | ||

| + | |||

| + | (12/4 1 hour) Jessica reviewed the code. | ||

| + | (12/5 2 hours) Jessica worked on the report. | ||

| + | (12/6 2 hours) Jessica planned out what needed to be written for the report and wrote some more. | ||

| + | Test | ||

[[Category:Logs]] | [[Category:Logs]] | ||

[[Category:Fall 2018 Logs]] | [[Category:Fall 2018 Logs]] | ||

Latest revision as of 16:46, 17 December 2018

Link to Project Page: Stock Analysis

Contents

- 1 Week 1: (Aug. 27 -- Sept. 2)

- 2 Week 2: (Sept. 3 -- Sept 9)

- 3 Week 3: (Sept. 10 -- Sept. 16)

- 4 Week 4: (Sept. 17 -- Sept. 23)

- 5 Week 4: (Sept. 24 -- Sept. 30)

- 6 Week 5: (Sept. 30 -- Oct. 6)

- 7 Week 6: (Oct. 14 -- Oct. 20)

- 8 Week 7: (Oct. 21 -- Oct. 27)

- 9 Week 8: (Oct. 28 -- Nov. 3)

- 10 Week 9: (Nov. 4 -- Nov. 10)

- 11 Week 10: (Nov 11. -- Nov. 17)

- 12 Week 11: (Nov 18. -- Nov. 24)

- 13 Week 12: (Nov 25. -- Dec. 1)

- 14 Week 13: (Dec. 2 -- Dec. 8)

Week 1: (Aug. 27 -- Sept. 2)

(1.5 hours) All group members discussed how to target what data we should find and what property of a home we find important.

Week 2: (Sept. 3 -- Sept 9)

(1.0 hours) Brandt, Keith, and Jessica met with Prof. Fehr to review the project and receive further guidance.

Week 3: (Sept. 10 -- Sept. 16)

(3.0 hours) Brandt and Keith further refined the project: investigated data scraping, how to add data to a database, and sites which are easily scrappable and have the data were looking for. Keith downloaded PyCharm so that we could begin experimenting with our ideas using python.

(9/15 Keith: 2 hrs.) Worked towards scraping data from google finance. Google protects financial information which will be a challenge. Currently working on a work around.

Week 4: (Sept. 17 -- Sept. 23)

(2 hours) Brandt, Keith, and Jessica met to discuss and refine the project idea as well as flesh out the wiki.

(2 hours) Jessica created and polished the presentation.

Week 4: (Sept. 24 -- Sept. 30)

(3 hours) Brandt compiled data and began to join the data in excel. The result was uploaded to excel. I investigated uploading these tables of data to a SQL server, and created accounts on AWS and Microsoft AZURE, however had issues figuring out how to upload the data.

(3 hours) Keith researched data scraping, ran code which successfully scrapped data through both Yahoo and Quandl, and discussed with Brandt on how we can load our data into a cloud SQL server.

(2 hours) Keith setup MySQL and SQLite and worked towards figuring out a way to make data accessible to everyone.

(3 hours) Brandt Used Pandas to read-in the excel data, and MatPlotLib to display the results graphically in a plot. The code was uploaded to GitHub to enable the group to view and edit. I also Identified another type of data that could be used later on in the project for further analysis; technical data on SP 500 such as Simple moving average, Stochastic RSI, and bollinger bands. This data can be obtained statically for free via our Bloomberg terminals in the business library. Problems; how to handle data that is N/A (unavailable at the time like EUR before it was a thing or Libor before it existed)

(1 Hour) Keith played around with Numpy and matrix manipulations and how to set up functions and define the main method.

(2 hours) Jessica figured out how to use Python and got part of a GUI set up.

(2.5 hours) Jessica worked on GUI. Got a rough version done.

(1.5 hours) Keith set up AWS EC2 instance and configured apache, so now the instance is connected to internet. Had to overcome Permission denied (public key) error. Check it out here

(1 hour) Keith and Brandt talked to Madjid Zeggane about how to attack this problem and how to obtain data from bloomberg. We need to finalize which tickers, industries and indexes we need download. He advised us to narrow the scope of the project and make out make our goals more reachable for the scope of our course.

Possible Issues: _Setup second AWS EC2 instance in a way such that all of us can ssh into it. Remembering python

Week 5: (Sept. 30 -- Oct. 6)

(4 hours) Brandt completed intro/intermediate python module found on Kaggle and DataCamp. Now I'm more familiar with pandas and matplotlib. They have lessons over sci-kit learn which I will be going over as well. Check out my github Here

(45 min) Keith configured mysql database, created a non-root user for accessing information online and configured phpmyadmin to have a user interface with mysql.

(1 hour) Keith downloaded anaconda and python and configured to AWS EC2 instance. Then set up IPython and jupyter notebook. The link to the notebook is password protected and your browser may have security issues with the link. To overcome just proceed to advanced settings and add this as a security exception. This is just to see the python files in our notebook.

(1 hour) Keith, Jessica, and Brandt met.

(1 hour) Brandt applied what was learned from and Kaggle and DataCamp to produce visualizations of the data using MatPlotLib. Then I utilized Seaborn in order to create a visualization of the correlation matrix of the features, and a second matrix with an OLS regression line draw through the graph.

(2 hours) Keith fixed the phpMyAdmin issue (took more research than anticipated), so you an now see the the databases, structure and content. Content may be hard to view in browser since there are over 17,000 entries. Additionally loaded data into SQL.

(3 hours) Jessica worked on finding a way to display the model chart in the GUI.

(1 Hour 10/8) Keith looked into how to use the newly installed python and anaconda to interact with the sql database.

(3 hours) Brandt added a correlation matrix to the github so that we can see empirically which variables are correlated and by how much. Brandt and Keith brainstormed about how to proceed with the project. Coordinated with Keith in an effort to get the EC2 instance to be accessible from My computer.

(3 hours) Keith formatted more data in excel to be loaded into sql. Error experienced errors with FIlezilla loading data from local machine to EC2 instance. Compiled list of commodities, bonds and sectors to be pulled from the Bloomberg machine.

Next steps: put Additional data into database, migrate GitHub code over to ec2 Jupiter Notebook, finish OLS and backtest somehow. Ask Chang how to backtest.

Challenges: Using python in the EC2 to interact with the data in SQL. Using python to display the model chart in a GUI. Methods for backtesting.

Week 6: (Oct. 14 -- Oct. 20)

(1.5 hours 10/14) Jessica worked on finding a way to display the model chart in the GUI.

(3 hours 10/16) Jessica successfully found a way to display the model chart on the GUI and changed the code. Also found a potential way to have live updates on the chart, but needs more research.

(2.5 hours 10/19) Keith met with Madjid in the Kopolow library to learn how to use a Bloomberg terminal to collect and export data. Also learned about the different ways that the commodities are prices and different techniques to analyze the data. Madjid suggested using the Bollinger bands to detect trends in the market. Anticipate challenges in collecting and parsing data.

Week 7: (Oct. 21 -- Oct. 27)

(3 hours 10/23) Brandt started a simple linear regression model on the Jupyter Notebook. The latest file on GitHub can be found here XXXXXXXXXXXXXXXX. The fit looks decent when a regression line is drawn graphically, and the R^2 gets better obviously as more features are made available. I am continuing researching on the most appropriate way to backtest our regression, any of the premade libraries I have found in python cater to Algorithmic-Trading and are designed to track Profit&Loss rather than some type of confidence interval of predictions. This could take longer than expected. I looked into k-fold cross validation, which essentially is a multi-iteraton version of doing a train/test split.

(1 hour 10/22) Keith researched on Bloomberg which commodity contracts are long lasting in order to determine which data to pull. Anticipate challenges in finding and cleaning data since some contracts can be short term.

(1.5 hours 10/23) Keith and Brandt went to pull data from Bloomberg machine, but the school's monthly limit has been met. Madjid will contact me when we can pull data again. Limits are reset monthly. Then met with Brandt to go over the current understanding of the math models and how we want to go forwards with testing and training (rolling forwards testing).

(1.5 hours 10/23) Brandt and Keith went to pull data from Bloomberg terminals, but someone used up the schools data limit for the next few days :(. Me and Keith discussed each others progress so as to channel our efforts most effectively.

( 4 hours 10/23) Brandt finished constructing a few OLS regression models using 1 or more variables, and implemented several different model validation techniques . As discussed in the last log entry, first I implemented the k-fold validation. each fold has an R^2 so I took the mean of each fold. The R^2 values looked pretty awful, something had to be wrong. After doing some research, I discovered that apparently time series data must be treated differently when backtesting and cross-validating. So k-fold is not legitimate. Instead, multiple sources, (XXXXXXXXXXXXXXX) pointed to a modified k fold where the test data is never before the train data. I found a method in sci-kit learn that helps us do this, TimeSeriesSplit(), and implemented it. The R^2 make more sense but in some iterations are still negative or very small. Now that we have a method for cross-validating, we can implement other models until we are able to produce a more acceptable R^2.

The table structure at the moment is as follows:

+-----------+---------------------+------+-----+---------+-------+

| Field | Type | Null | Key | Default | Extra |

+-----------+---------------------+------+-----+---------+-------+

| ref_date | date | NO | | NULL | |

| open | decimal(20,10) | NO | | NULL | |

| high | decimal(20,10) | NO | | NULL | |

| low | decimal(20,10) | NO | | NULL | |

| adj_close | decimal(20,10) | NO | | NULL | |

| close | decimal(20,10) | NO | | NULL | |

| volume | bigint(20) unsigned | NO | | NULL | |

+-----------+---------------------+------+-----+---------+-------+

(10/25 2 hours) Keith and Brandt pulled data from the Bloomberg machine. We searched for commodities that had a long price history and had to figure out the best way to collect them.

Potential issues: TimeSeries annoying, R^2 bad, Hard to debug with more than 1 variable in regression b/c you can't view it graphically

(10/27 2 hours) Jessica worked on GUI.

Next steps: More Models, Gui, More Data

Week 8: (Oct. 28 -- Nov. 3)

(10/28 1 hour) Keith recollected data and saved it in a proper way (so that the values were save, not the queries). Then formatted the data in a way that can be inserted into SQL.

(10/28 1 hour) Jessica worked on the GUI, plot does not refresh with new inputted values.

(10/29 3.5 hours) Jessica worked on the GUI. Plot does update with new inputted data, but does not update inside of the GUI. It only updates plots properly when the plot is open in a different window. Trying to get the updates inside of the GUI.

(10/30 1 hour) Keith and Jessica met up to go over bugs in GUI.

(10/30 2.5 hours) Keith and Brandt discussed the objective of the project. It is pretty good at the moment, so our reevaluation is to keep it the same. Otherwise we worked on configuring arima (a machine learning toolbox) with our data. We are having trouble feeding in multiple variables. This should come with more time and familiarity with the software.

(10/30 1 hour) Brandt researched time series analysis methods and discovered new toolbox. Arima is similar to what Prof. Feher recommended. Worked on tuning the hyper parameters.

(11/02 2 hour) Keith cleaned combined the S&P500 data with the commodity data in one file. Preformed more research in support vector machines and started demo data camp.

(11/03 2 hour) Keith researched the underlying mechanics of the SVM and implemented the SVM but in doing so found errors in the data. There were 185 (.) instead of numerical values in the file.

(11/02 4 hours) Brandt researched ARIMA and beat his head for hours trying to get it to work. Now I know that ARIMA is for a single variable, but that "VARIMAX" may work for multiple exogenous variables, so we need to use that.

Week 9: (Nov. 4 -- Nov. 10)

(11/05 1.5 hour) Keith researched the SVM error and implementation. No results.

(11/5 2 hours) Jessica researched different regression models.

(11/06 4 hours) Brandt worked on finishing the forward rolling cross validation technique, and plotting the color coded results so that we can more easily debug bad fits. Also I cleaned ups the code a little bit. A read me os in the work.

(11/07 1 hour) Brandt met with Keith to go over our progress this week. We discussed a few different models and how to proceed going forward

(11/07 2.5 hours) Brandt derived/proved the Multivariate Ordinary Least Squares equations. This will be going onto the GitHub!

(11/08 1 hour) Keith set up and the SVM to train data, then let run for a few hours until program crashed.

(11/09 4 hours) Brandt and Keith talked about how to make make predictions in time given the relational coefficients and the best way to present this to users. Brandt further worked developing the regression and Keith worked on upgrading the GUI so that it can data from our modeling file.

Goals: Work out the error in the SVM code. Also get started on writing out and gain a better understanding of the support vector machine algorithms. (I linked a reference which has a pretty good mathematical formulation of what it is). Figure out a way to run the SVM without crashing the program.

Week 10: (Nov 11. -- Nov. 17)

(11/11 3 hours) Keith created a new GUI. Tkinter is best implemented with Object-oriented programming techniques which caused flaws in trying to implement the old one. The current GUI can take in data from outside python files (using pandas dataframes or numpy arrays) and can plot them using matplotlib in a Tkinter GUI (see link in references). The current configuration generates a plot in a window, but there is a return button to the homepage. There will be more style implemented later once the functionality of our forecasting is nailed down.

(11/12 1.5 hours) Jessica created Lasso regression model.

(11/13 1 hour) Keith and Brandt discussed their code and went through how each programed worked to start to merge the two.

(11/15 1 hour) Jessica began working on the poster for demonstration. Demonstration Slide

(11/16 2 hours) Keith and Brandt came up with new design for the gui and decided how we will construct the estimate based off of user input.

(11/19 4 hours) Brandt created an answer matrix so that our coefficients are pre calculated

Goals should be to complete the forecasting techniques and get some work done on the poster so that Chang and Prof. Feher can look it over before break.

Week 11: (Nov 18. -- Nov. 24)

(11/18 3 hours) Keith completed the reconfiguration of the GUI. Currently can use the interface to collect user input and predict what the price will be. The textboxes are automatically set to contain the most recent price for that feature. Currently can only predict based off of one feature. Faced troubles in handling bad user input so created functions to handle error (when collecting numbers ensuring they are floats or ints). Faced issues with updating the matplotlib graph embedded in tkinter, so just created a new popup window for the time being.

(11/19 2 hours) Keith and Brandt met up to talk about what exactly the user experience should be. Users should be able to specify the number of days then we predict the price of the individual features and the S&P 500. Users should also be able to input what features they think will change, and in how many days will that feature reach that price, then we calculate the price of the S&P500 for them. Brandt also took this time to work on the poster.

(11/19 5 hours) Brandt researched, then implemented ARIMA. ARIMA has 3 parameters, and the optimum hyper-parameters are estimated using an integer grid search over possible hyper parameter values and saving the parameter combination which has the least AIC (Akine Information Criterion)

(11/20 2.5 hours) Keith implemented the methods discussed in the previous post. Issues arose in the results of the regression. Our projected S&P 500 price is significantly off of what it should be. We can generate and display results, but they are not very accurate. The updated GUI now shows the current prices of the features and the S&P500 price on start up. Once the user specifies the number of days in advance and hits predict, the prices for all features and the S&P500 price are then updated and the results are displayed graphically.

(11/23 1 hour) Jessica worked on poster.

Week 12: (Nov 25. -- Dec. 1)

(11/27 2 hours) Keith worked out bug with regression results after discussing issue with Brandt. I forgot to collect and add the y-intercept to the equation. Now the gui is able to take in user's predicted changes in the market and forecast the price of the S&P500 based off of their input.

(11/27 1 hour) Jessica worked on the proof for the poster.

Week 13: (Dec. 2 -- Dec. 8)

(12/4 1 hour) Jessica reviewed the code.

(12/5 2 hours) Jessica worked on the report.

(12/6 2 hours) Jessica planned out what needed to be written for the report and wrote some more.

Test