Difference between revisions of "Iterative Averaging"

| (96 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

=Motivation= | =Motivation= | ||

| − | X10 | + | Iterative Averaging is the process of updating an array to so that each index becomes the average of the indices one before and one after it. After repeating this for many iterations, the array may converge to one set of numbers. For example, when given the following array, we can perform iterations of this algorithm until the array eventually converges: |

| + | |||

| + | {| class="wikitable" style="display: inline-table;" | ||

| + | |- | ||

| + | ! scope="col" | [0] || [1] || [2] || [3] || [4] || [5] || [6] || [7] || [8] || [9] || [10] | ||

| + | |- | ||

| + | | 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 1.0 | ||

| + | |- | ||

| + | | 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.5 || 1.0 | ||

| + | |- | ||

| + | | 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.25 || 0.5 || 1.0 | ||

| + | |- | ||

| + | | 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.125 || 0.25 || 0.625 || 1.0 | ||

| + | |- | ||

| + | | 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.0625 || 0.125 || 0.375 || 0.625 || 1.0 | ||

| + | |- | ||

| + | | 0.0 || 0.0 || 0.0 || 0.0 || 0.0 || 0.03125 || 0.0625 || 0.21875 || 0.375 || 0.6875 || 1.0 | ||

| + | |- | ||

| + | | 0.0 || 0.0 || 0.0 || 0.0 || 0.015625 || 0.03125 || 0.125 || 0.21875 || 0.453125 || 0.6875 || 1.0 | ||

| + | |- | ||

| + | | 0.0 || 0.0 || 0.0 || 0.0078125 || 0.015625 || 0.0703125 || 0.125 || 0.2890625 || 0.453125 || 0.7265625 || 1.0 | ||

| + | |- | ||

| + | | 0.0 || 0.0 || 0.00390625 || 0.0078125 || 0.0390625 || 0.0703125 || 0.1796875 || 0.2890625 || 0.5078125 || 0.7265625 || 1.0 | ||

| + | |- | ||

| + | | 0.0 || 0.001953125 || 0.00390625 || 0.021484375 || 0.0390625 || 0.109375 || 0.1796875 || 0.34375 || 0.5078125 || 0.75390625 || 1.0 | ||

| + | |- | ||

| + | | 0.0 || 0.001953125 || 0.01171875 || 0.021484375 || 0.0654296875 || 0.109375 || 0.2265625 || 0.34375 || 0.548828125 || 0.75390625 || 1.0 | ||

| + | |- | ||

| + | | 0.0 || style="text-align:center;" colspan="9" | <math>\vdots</math> || 1.0 | ||

| + | |- | ||

| + | | 0.0 || style="text-align:center;" colspan="9" | <math>\vdots</math> || 1.0 | ||

| + | |- | ||

| + | | 0.0 || style="text-align:center;" colspan="9" | <math>\vdots</math> || 1.0 | ||

| + | |- | ||

| + | | 0.0 || 0.1 || 0.2 || 0.3 || 0.4 || 0.5 || 0.6 || 0.7 || 0.8 || 0.9 || 1.0 | ||

| + | |} | ||

| + | <!-- | ||

| + | |||

| + | {| class="wikitable" style="display: inline-table;" | ||

| + | |- | ||

| + | ! scope="col" | src || dst | ||

| + | |- | ||

| + | | phase 0 || | ||

| + | |- | ||

| + | | phase 1 || phase 0 | ||

| + | |- | ||

| + | | phase 2 || phase 1 | ||

| + | |- | ||

| + | | phase 3 || phase 2 | ||

| + | |- | ||

| + | | phase 4 || phase 3 | ||

| + | |- | ||

| + | | phase 5 || phase 4 | ||

| + | |- | ||

| + | | phase 6 || phase 5 | ||

| + | |- | ||

| + | | phase 7 || phase 6 | ||

| + | |- | ||

| + | | phase 8 || phase 7 | ||

| + | |- | ||

| + | | phase 9 || phase 8 | ||

| + | |- | ||

| + | | phase 10 || phase 9 | ||

| + | |- | ||

| + | | style="text-align:center;" colspan="2" | <math>\vdots</math> | ||

| + | |- | ||

| + | | style="text-align:center;" colspan="2" | <math>\vdots</math> | ||

| + | |- | ||

| + | | style="text-align:center;" colspan="2" | <math>\vdots</math> | ||

| + | |- | ||

| + | | || phase N-1 | ||

| + | |} | ||

| + | |||

| + | Note that the ends of the array remain the same value from the previous iteration. | ||

| + | --> | ||

| + | |||

| + | X10-like Phasers have been added to Java since JDK7. We will gain some experience with using Phasers in a parallel for-loop context. Phasers allow us to change the structure of our loops and reduce overhead in the algorithm. | ||

| + | <!-- | ||

| + | For more information on the algorithm and how we can use Phasers to make it better, review [https://edge.edx.org/courses/RiceX/COMP322/1T2014R/courseware/a900dd0655384de3b5ef01e508ea09d7/6349730bb2a149a0b33fa23db7afddee/13 Topic 3.5] from the RiceX course. | ||

| + | --> | ||

=Background= | =Background= | ||

| + | <youtube>Ixprqn_Xhf4</youtube> | ||

| + | |||

| + | ==Java Phaser== | ||

| + | <youtube>whPAPmylbEU</youtube> | ||

| + | |||

| + | [[Reference_Page#Phasers|Check out the reference page on phasers]] | ||

| + | |||

| + | [http://www.baeldung.com/java-phaser Guide to the Java Phaser] | ||

| + | |||

[https://docs.oracle.com/javase/8/docs/api/java/util/concurrent/Phaser.html class Phaser] | [https://docs.oracle.com/javase/8/docs/api/java/util/concurrent/Phaser.html class Phaser] | ||

| − | : [https://docs.oracle.com/javase/8/docs/api/java/util/concurrent/Phaser.html#bulkRegister-int- bulkRegister] | + | : [https://docs.oracle.com/javase/8/docs/api/java/util/concurrent/Phaser.html#bulkRegister-int- bulkRegister] (also see instructions on [[#ForkLoopWithPhaserIterativeAverager |ForkLoopWithPhaserIterativeAverager]]) |

: [https://docs.oracle.com/javase/8/docs/api/java/util/concurrent/Phaser.html#arriveAndAwaitAdvance-- arriveAndAwaitAdvance] | : [https://docs.oracle.com/javase/8/docs/api/java/util/concurrent/Phaser.html#arriveAndAwaitAdvance-- arriveAndAwaitAdvance] | ||

| − | |||

| − | [ | + | {{warning | Our use of the forall loop with Phasers does not accurately convey their finicky nature. More than other features, Phasers seem to require more care to get performance improvements. }} |

| + | |||

| + | ==PhasableDoubleArrays== | ||

| + | *[https://www.cse.wustl.edu/~cosgroved/courses/cse231/current/apidocs/edu/wustl/cse231s/phasable/AbstractPhasable.html class AbstractPhasable<T>] | ||

| + | **[https://www.cse.wustl.edu/~cosgroved/courses/cse231/current/apidocs/edu/wustl/cse231s/phasable/AbstractPhasable.html#getSrcForPhase-int- getSrcForPhase(phaseIndex)] | ||

| + | **[https://www.cse.wustl.edu/~cosgroved/courses/cse231/current/apidocs/edu/wustl/cse231s/phasable/AbstractPhasable.html#getDstForPhase-int- getDstForPhase(phaseIndex)] | ||

| + | *[https://www.cse.wustl.edu/~cosgroved/courses/cse231/current/apidocs/edu/wustl/cse231s/phasable/PhasableDoubleArrays.html class PhasableDoubleArrays extends AbstractPhasable<double<nowiki>[]</nowiki>>] | ||

| + | ** [https://www.cse.wustl.edu/~cosgroved/courses/cse231/current/apidocs/edu/wustl/cse231s/phasable/PhasableDoubleArrays.html#%3Cinit%3E(double%5B%5D,java.util.function.Function) new PhasableDoubleArrays(originalData, initializerFunction)] | ||

| + | |||

| + | ==Ranges== | ||

| + | [[Ranges|Ranges (Previous Exercise To Use)]] | ||

| + | |||

| + | =Lecture= | ||

| + | <youtube>0c2PZ-ARDDE</youtube> | ||

| + | |||

| + | =Code to Implement= | ||

| + | ==IterativeAveragingUtils== | ||

| + | {{CodeToImplement|IterativeAveragingUtils|slice<br/>createPhasableDoubleArrays|iterativeaveraging.util.exercise}} | ||

| + | |||

| + | ===slice=== | ||

| + | <youtube>_taVHpi1Hpg</youtube> | ||

| + | |||

| + | Each IterativeAverager should slice the data into ranges. The constructor for each IterativeAverager is passed the number of slices to create. | ||

| + | |||

| + | {{Tip| Use Ranges.slice(min, maxExclusive, numSlices)}} | ||

| + | |||

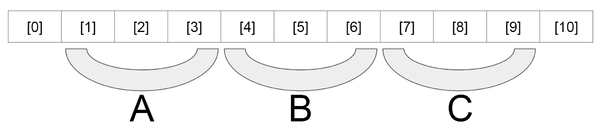

| + | If you are to create 3 slices an array of length 11: | ||

| + | |||

| + | [[File:IterativeAveraging.png|600px]] | ||

| + | |||

| + | the slices should have these properties: | ||

| + | |||

| + | {|class="wikitable" | ||

| + | |||sliceID||minInclusive||maxExclusive | ||

| + | |- | ||

| + | |sliceA||0||1||4 | ||

| + | |- | ||

| + | |sliceB||1||4||7 | ||

| + | |- | ||

| + | |sliceC||2||7||10 | ||

| + | |} | ||

| + | |||

| + | ===createPhasableDoubleArrays=== | ||

| + | <youtube>-SBcwicgyOE</youtube> | ||

| + | |||

| + | TL;DR: Initialize a double[] and make sure to copy over the original data's 0th and last index's value. | ||

| + | |||

| + | ==LoopOfForkLoopsIterativeAverager== | ||

| + | {{CodeToImplement|LoopOfForkLoopsIterativeAverager|sliceCount<br/>iterativelyAverage|iterativeaveraging.exercise}} | ||

| + | |||

| + | {{Parallel|public double[] iterativelyAverage(double[] originalArray, int iterationCount)}} | ||

| + | |||

| + | For this method, you should not be using Phasers. Instead, implement a parallel version of the [[Sequential_Iterative_Averager_Assignment|Sequential Iterative Averaging warm up]]. Make use of the PhasableDoubleArrays class. The return value should be the current version of the array after it has gone through the number of iterations passed in the method. | ||

| + | |||

| + | <nowiki>sequential loop | ||

| + | parallel loop | ||

| + | work</nowiki> | ||

| + | |||

| + | |||

| + | What is the work for each task? | ||

| + | |||

| + | [[File:LoopOfForkLoops_IterativeAverager.svg|600px]] | ||

| + | |||

| + | ==X10PhasedForkLoopIterativeAverager== | ||

| − | {{ | + | {{CodeToImplement|X10PhasedForkLoopIterativeAverager|x10<br/>sliceCount<br/>iterativelyAverage|iterativeaveraging.exercise}} |

| − | + | {{Parallel|public double[] iterativelyAverage(double[] originalArray, int iterationCount)}} | |

| − | |||

| − | {{ | ||

| − | + | <nowiki>x10 phased parallel loop | |

| + | sequential loop | ||

| + | work | ||

| + | arrive and await advance on phaser</nowiki> | ||

| − | + | What is the work for each iteration of a task? | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | [[File:X10PhasedForkLoop_IterativeAverager.svg|600px]] | |

| − | + | ==ForkLoopWithPhaserIterativeAverager== | |

| − | + | {{CodeToImplement|ForkLoopWithPhaserIterativeAverager|phaserCreator<br/>sliceCount<br/>iterativelyAverage|iterativeaveraging.exercise}} | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | {{Parallel|public double[] iterativelyAverage(double[] originalArray, int iterationCount)}} | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | } | ||

| − | |||

| − | + | Before you get started on this, make sure you review the [[Iterative Averaging#Background | Background section]] in order to understand how to utilize Phasers (it will look different than the X10 implementation). This time, we will use Phasers to create a parallel version of the algorithm that has less overhead. Here are a few notes to keep in mind when working on this assignment: | |

| − | + | *Think carefully as to how the loops in this version will be structured. Drawing out the computation graph may help | |

| + | *Bulk registering a Phaser indicates how many times <code>phaser.arriveAndAwaitAdvance()</code> needs to be called before any of the threads are able to move on. In other words, it equals to how many threads need to arive at the checkpoint before moving on. Make sure to register the right number! | ||

| + | <!-- | ||

| + | *You also bulk register the SwappableDoubleArrays when instantiating it. Similar to Phasers, the integer passed through in the constructor tells the object how many times <code>swap()</code> needs to be called before the source and destination arrays are actually swapped. | ||

| + | --> | ||

| − | + | <nowiki>create phaser | |

| + | register phaser for each task | ||

| + | parallel loop | ||

| + | sequential loop | ||

| + | work | ||

| + | arrive and await advance on phaser</nowiki> | ||

| − | + | What is the work for each iteration of a task? | |

| − | + | [[File:ForkLoopWithPhaser_IterativeAverager.svg|600px]] | |

=Testing Your Solution= | =Testing Your Solution= | ||

==Correctness== | ==Correctness== | ||

| − | {{TestSuite| | + | {{TestSuite|__RigidIterativeAveragingTestSuite|iterativeaveraging.rigid.exercise}} |

| − | + | ||

| − | {{ | + | : {{TestSuite|_IterativeAveragingUtilsTestSuite|iterativeaveraging.util.exercise}} |

| + | |||

| + | {{TestSuite|_LoopOfForkLoopsIterativeAveragerTestSuite|iterativeaveraging.rigid.exercise}} | ||

| + | |||

| + | {{TestSuite|_X10PhasedForkLoopIterativeAveragerTestSuite|iterativeaveraging.rigid.exercise}} | ||

| + | |||

| + | {{TestSuite|_ForkLoopWithPhaserIterativeAveragerTestSuite|iterativeaveraging.rigid.exercise}} | ||

Latest revision as of 18:27, 17 April 2024

Motivation

Iterative Averaging is the process of updating an array to so that each index becomes the average of the indices one before and one after it. After repeating this for many iterations, the array may converge to one set of numbers. For example, when given the following array, we can perform iterations of this algorithm until the array eventually converges:

| [0] | [1] | [2] | [3] | [4] | [5] | [6] | [7] | [8] | [9] | [10] |

|---|---|---|---|---|---|---|---|---|---|---|

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 |

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5 | 1.0 |

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.25 | 0.5 | 1.0 |

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.125 | 0.25 | 0.625 | 1.0 |

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0625 | 0.125 | 0.375 | 0.625 | 1.0 |

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.03125 | 0.0625 | 0.21875 | 0.375 | 0.6875 | 1.0 |

| 0.0 | 0.0 | 0.0 | 0.0 | 0.015625 | 0.03125 | 0.125 | 0.21875 | 0.453125 | 0.6875 | 1.0 |

| 0.0 | 0.0 | 0.0 | 0.0078125 | 0.015625 | 0.0703125 | 0.125 | 0.2890625 | 0.453125 | 0.7265625 | 1.0 |

| 0.0 | 0.0 | 0.00390625 | 0.0078125 | 0.0390625 | 0.0703125 | 0.1796875 | 0.2890625 | 0.5078125 | 0.7265625 | 1.0 |

| 0.0 | 0.001953125 | 0.00390625 | 0.021484375 | 0.0390625 | 0.109375 | 0.1796875 | 0.34375 | 0.5078125 | 0.75390625 | 1.0 |

| 0.0 | 0.001953125 | 0.01171875 | 0.021484375 | 0.0654296875 | 0.109375 | 0.2265625 | 0.34375 | 0.548828125 | 0.75390625 | 1.0 |

| 0.0 | 1.0 | |||||||||

| 0.0 | 1.0 | |||||||||

| 0.0 | 1.0 | |||||||||

| 0.0 | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1.0 |

X10-like Phasers have been added to Java since JDK7. We will gain some experience with using Phasers in a parallel for-loop context. Phasers allow us to change the structure of our loops and reduce overhead in the algorithm.

Background

Java Phaser

Check out the reference page on phasers

- bulkRegister (also see instructions on ForkLoopWithPhaserIterativeAverager)

- arriveAndAwaitAdvance

PhasableDoubleArrays

Ranges

Ranges (Previous Exercise To Use)

Lecture

Code to Implement

IterativeAveragingUtils

| class: | IterativeAveragingUtils.java | |

| methods: | slice createPhasableDoubleArrays |

|

| package: | iterativeaveraging.util.exercise | |

| source folder: | student/src/main/java |

slice

Each IterativeAverager should slice the data into ranges. The constructor for each IterativeAverager is passed the number of slices to create.

If you are to create 3 slices an array of length 11:

the slices should have these properties:

| sliceID | minInclusive | maxExclusive | |

| sliceA | 0 | 1 | 4 |

| sliceB | 1 | 4 | 7 |

| sliceC | 2 | 7 | 10 |

createPhasableDoubleArrays

TL;DR: Initialize a double[] and make sure to copy over the original data's 0th and last index's value.

LoopOfForkLoopsIterativeAverager

| class: | LoopOfForkLoopsIterativeAverager.java | |

| methods: | sliceCount iterativelyAverage |

|

| package: | iterativeaveraging.exercise | |

| source folder: | student/src/main/java |

method: public double[] iterativelyAverage(double[] originalArray, int iterationCount) ![]() (parallel implementation required)

(parallel implementation required)

For this method, you should not be using Phasers. Instead, implement a parallel version of the Sequential Iterative Averaging warm up. Make use of the PhasableDoubleArrays class. The return value should be the current version of the array after it has gone through the number of iterations passed in the method.

sequential loop

parallel loop

work

What is the work for each task?

X10PhasedForkLoopIterativeAverager

| class: | X10PhasedForkLoopIterativeAverager.java | |

| methods: | x10 sliceCount iterativelyAverage |

|

| package: | iterativeaveraging.exercise | |

| source folder: | student/src/main/java |

method: public double[] iterativelyAverage(double[] originalArray, int iterationCount) ![]() (parallel implementation required)

(parallel implementation required)

x10 phased parallel loop

sequential loop

work

arrive and await advance on phaser

What is the work for each iteration of a task?

ForkLoopWithPhaserIterativeAverager

| class: | ForkLoopWithPhaserIterativeAverager.java | |

| methods: | phaserCreator sliceCount iterativelyAverage |

|

| package: | iterativeaveraging.exercise | |

| source folder: | student/src/main/java |

method: public double[] iterativelyAverage(double[] originalArray, int iterationCount) ![]() (parallel implementation required)

(parallel implementation required)

Before you get started on this, make sure you review the Background section in order to understand how to utilize Phasers (it will look different than the X10 implementation). This time, we will use Phasers to create a parallel version of the algorithm that has less overhead. Here are a few notes to keep in mind when working on this assignment:

- Think carefully as to how the loops in this version will be structured. Drawing out the computation graph may help

- Bulk registering a Phaser indicates how many times

phaser.arriveAndAwaitAdvance()needs to be called before any of the threads are able to move on. In other words, it equals to how many threads need to arive at the checkpoint before moving on. Make sure to register the right number!

create phaser

register phaser for each task

parallel loop

sequential loop

work

arrive and await advance on phaser

What is the work for each iteration of a task?

Testing Your Solution

Correctness

| class: | __RigidIterativeAveragingTestSuite.java | |

| package: | iterativeaveraging.rigid.exercise | |

| source folder: | testing/src/test/java |

class: _IterativeAveragingUtilsTestSuite.java

package: iterativeaveraging.util.exercise source folder: testing/src/test/java class: _LoopOfForkLoopsIterativeAveragerTestSuite.java

package: iterativeaveraging.rigid.exercise source folder: testing/src/test/java class: _X10PhasedForkLoopIterativeAveragerTestSuite.java

package: iterativeaveraging.rigid.exercise source folder: testing/src/test/java class: _ForkLoopWithPhaserIterativeAveragerTestSuite.java

package: iterativeaveraging.rigid.exercise source folder: testing/src/test/java