Difference between revisions of "WALL-E"

Djsullivan (talk | contribs) |

|||

| (21 intermediate revisions by 3 users not shown) | |||

| Line 28: | Line 28: | ||

**The sensor will measure distance between the robot and objects. [http://www.micropik.com/PDF/HCSR04.pdf (Spec sheet)] | **The sensor will measure distance between the robot and objects. [http://www.micropik.com/PDF/HCSR04.pdf (Spec sheet)] | ||

| − | *'''Piezo Electronic Buzzer for $5.30''' [ https://www.amazon.com/gp/product/B013TEI05A/ref=pd_sbs_328_2?ie=UTF8&psc=1&refRID=W6DJKPDN7XVG8P5T48VZ (Link)] | + | *'''Piezo Electronic Buzzer for $5.30, 4.49 for shipping''' [ https://www.amazon.com/gp/product/B013TEI05A/ref=pd_sbs_328_2?ie=UTF8&psc=1&refRID=W6DJKPDN7XVG8P5T48VZ (Link)] |

** alarm to turn left or right [https://www.arduino.cc/en/Tutorial/toneMelody (Guide)] | ** alarm to turn left or right [https://www.arduino.cc/en/Tutorial/toneMelody (Guide)] | ||

| Line 40: | Line 40: | ||

**gyroscope to determine angles to make sure the car goes forward in a straight line and turns a constant angle [http://playground.arduino.cc/Main/MPU-6050 (Guide)] | **gyroscope to determine angles to make sure the car goes forward in a straight line and turns a constant angle [http://playground.arduino.cc/Main/MPU-6050 (Guide)] | ||

| − | Total Budget: $ | + | Total Budget: $104.69 |

== Gantt Chart== | == Gantt Chart== | ||

| − | [[File: | + | [[File:Gantt2.png]] |

==Design and Solutions== | ==Design and Solutions== | ||

===Hardware=== | ===Hardware=== | ||

| + | [[File:HardwarePic.jpeg|thumb|right|Picture of front sensors, Arduino motor shield and wiring]] | ||

*Car Design | *Car Design | ||

| Line 58: | Line 59: | ||

A total of 6 distance sensors were used, an array of three in the back and three in the front. Each distance sensors requires four pins, two can go to a bus of ground and power but at least two pins(trig and echo) must wire directly into the Arduino, for a total of 12 general purpose pins required. The Arduino shield only has a total of 14 pins though, with 6 of them required to be used by the motor shield which must use all the PWM pins. We realized there wasn't enough space on one regular ArduinoUno for this project. Possible fixes included multiplexing(difficult and error prone), using an Arduino mega(expensive) or using two Arduinos communicating via I2C. Our solution was to use two Arduinos where one slave holds all the distance sensors and the other controls the motors. The distance sensors themselves experience noise, the solution was to add a short time delay of .5 microseconds between each individual firing ping from the sensors, however this was not implemented in time for the presentation of the project. | A total of 6 distance sensors were used, an array of three in the back and three in the front. Each distance sensors requires four pins, two can go to a bus of ground and power but at least two pins(trig and echo) must wire directly into the Arduino, for a total of 12 general purpose pins required. The Arduino shield only has a total of 14 pins though, with 6 of them required to be used by the motor shield which must use all the PWM pins. We realized there wasn't enough space on one regular ArduinoUno for this project. Possible fixes included multiplexing(difficult and error prone), using an Arduino mega(expensive) or using two Arduinos communicating via I2C. Our solution was to use two Arduinos where one slave holds all the distance sensors and the other controls the motors. The distance sensors themselves experience noise, the solution was to add a short time delay of .5 microseconds between each individual firing ping from the sensors, however this was not implemented in time for the presentation of the project. | ||

| − | *3D printing | + | |

| + | [[File:222.png|2221.png|thumb|right|Picture of 3D modeling]] | ||

| + | *3D Printing Casing for Distance Sensors | ||

| + | We used Autodesk Inventor to design the base for the sensors. We then used the free Cura slicing software to turn our .stl file from Autodesk Inventor into G-Code for the Ultimaker 2+ that is in the Lab. There are two base: one for front sensors and one for back sensors. The purpose for the bases is stabilizing the sensors. We want the sensors be able to measure the distances around the vehicle, so sensors should have angles to each other. By printing the base, the middle sensors stay horizontal and left and right sensors are mounted in 60 degrees. Then the sensors can detect obstacles in different directions. | ||

===Communication=== | ===Communication=== | ||

| Line 64: | Line 68: | ||

===Coding=== | ===Coding=== | ||

| + | In order to avoid obstacles in different situations, the vehicle was coded in four main scenarios: obstacle on the left, obstacle on the right, long wall and small obstacle. The vehicle starts the motion only when the back sensors detect feet behind.The vehicle distinguishes scenarios according to the distances that front sensors measure. | ||

| + | *Obstacle on the left | ||

| + | When the left distance is close enough and is smaller than right distance, the obstacle is on the left. The vehicle turns right until no obstacle is detected and moves forward a short distance. Then the vehicle adjusts its angle to straight. We set the "straight interval" to plus and minus 5 degrees so that the vehicle can move forward even when the angle is not perfectly 0 degrees (which is the reason that the vehicle sometimes shakes when it moves forward). | ||

| + | *Obstacle on the right | ||

| + | The right scenario works in a similar way. When the right distance is close enough and is smaller than left distance, the obstacle is on the right. The vehicle turns left until no obstacle is detected and moves forward a short distance. Then the vehicle adjusts its angle to straight. | ||

| + | *Long Wall | ||

| + | The long wall scenario appears when the middle distance is close and the left and right distance is greater than the middle distance. Then the vehicle determines turning direction by the comparison of left and right distance. Turn left when right distance is smaller and turn right when left distance is smaller. | ||

| + | *Small Obstacle | ||

| + | The small obstacle scenario happens when left and right distance is very far away and the middle distance is close. We set the turning direction to right as the default. | ||

==Results== | ==Results== | ||

[[File:ResultVid.mp4|thumb|left|Demo of finished project avoiding 2 objects]] | [[File:ResultVid.mp4|thumb|left|Demo of finished project avoiding 2 objects]] | ||

| − | *Distance Sensors: That is one awkward walk behind the car, anyone would have trouble not stepping on it let alone a blind person. This is a serious design issue, a student pointed out at the demo how come the sensors don't point towards the persons chest? A simple fix, but tunnel vision and trying to stop the Arduino crashing seemed like larger issues so this was put on the back burner for too long. | + | *Distance Sensors: That is one awkward walk behind the car, anyone would have trouble not stepping on it let alone a blind person. This is a serious design issue, a student pointed out at the demo "how come the sensors don't point towards the persons chest?" A simple fix, but tunnel vision and trying to stop the Arduino crashing seemed like larger issues so this was put on the back burner for too long. |

*IMU: The car excels at maintaining its angle and going in fixed lines as it makes sharp accurate movements because the IMU is set at 500 degrees / s with 16 bits at its disposal, making the angle accurate to roughly 0.01 degrees. The car easily handles obstacles to the left or right as it turns when it senses an obstacle within 30 cm until the sensor reading the object no longer does, at which point the car evens out and continues its path forward. However, the IMU took up a significant amount of time away from all other aspects of the project such as design and coding. | *IMU: The car excels at maintaining its angle and going in fixed lines as it makes sharp accurate movements because the IMU is set at 500 degrees / s with 16 bits at its disposal, making the angle accurate to roughly 0.01 degrees. The car easily handles obstacles to the left or right as it turns when it senses an obstacle within 30 cm until the sensor reading the object no longer does, at which point the car evens out and continues its path forward. However, the IMU took up a significant amount of time away from all other aspects of the project such as design and coding. | ||

| − | *Algorithm: | + | *Algorithm: Overall the algorithm works good. But there is a problem after the vehicle turns direction. The professor pointed out that vehicle never move forward after turning. It only moved forward when there is no obstacle in front. This is caused by the short among of time that the vehicle move forward. In fact, the algorithm has a method to check whether the moving forward action happens after vehicle turning direction until no obstacle is detected. When the scenario is finishing turning direction, the moving forward function is called. Then the algorithm goes into next main loop. The delay time in the motor algorithm is 0.1 second which means the vehicle will move forward for 0.1 second when the forward function is called. The moving time is so short that the vehicle seems like not moving froward at all. Also in the algorithm, we set the forward angle interval to plus and minus 5 degree which means the vehicle will move forward even the direction is a little off perfect straight. When the vehicle moves forward in more or less than 0 degree, it's pretty easy for it to reach over positive or negative 5 degrees. Then the vehicle will adjust the direction back to the forward interval by turning left and right. This is the reason that the vehicle is "shaking" when moving forward and looks not straight. It may be better for us to keep track all the turning angles and minus them from the current angle when the vehicle need to adjust direction. We also need to modify the after turning forward function. We may set the moving time independently instead of sharing with the normal forward function. |

*Feedback to Blind person: The speaker works well and was easy to implement, a simple download of ''[https://www.arduino.cc/en/Tutorial/toneMelody (pitches)]'' and a few lines of code later it was up and running. It is doing the bare minimum of feedback right now though, it simply plays two different tones for left and right and periodically plays a tone for 300ms every 3 seconds. But the speed of incorporating it was important, there's no way we would have been able to finish an app alongside this project. | *Feedback to Blind person: The speaker works well and was easy to implement, a simple download of ''[https://www.arduino.cc/en/Tutorial/toneMelody (pitches)]'' and a few lines of code later it was up and running. It is doing the bare minimum of feedback right now though, it simply plays two different tones for left and right and periodically plays a tone for 300ms every 3 seconds. But the speed of incorporating it was important, there's no way we would have been able to finish an app alongside this project. | ||

===Comparison to original objectives=== | ===Comparison to original objectives=== | ||

| + | At the beginning we didn't understand how long it would take to get all the hardware set up, and not just working but working well. Originally we wanted to write an app to handle feedback to the follower, thankfully Humberto steered us away from that as there's just no way that would have been completed on time. The speaker manages feedback well enough, although maybe we are complicating things too much and a leash would work just as well. Originally the objective was to totally blindfold a person and let them walk behind the car, but the results fall short here. In other ways though, the hardware works surprisingly well, data from the IMU is extremely accurate but the algorithm could probably use more work as the car makes sharp turns. | ||

| + | |||

| + | All files to run Wall-E can be found ''[https://github.com/djs12/Wall-E (here)]''! | ||

| + | |||

[[Category:Projects]] | [[Category:Projects]] | ||

[[Category:Fall 2016 Projects]] | [[Category:Fall 2016 Projects]] | ||

Latest revision as of 21:38, 7 February 2017

Contents

Overview

We will create a robot car that can lead and warn a blind person about incoming obstacles. Once an obstacle has been detected the robot will send some form of message to the leadee such as making a noise through speakers. The robot will use micro processor, motors and sensors to determine the position of an incoming object on its way and then accordingly adjust direction in order to avoid a crash. Our motivation for this project is to learn about some of the applications of autonomous cars and to build off a previous groups project Leap Controlled RC Car which can be found here (Link) Also, here are some of the resources we are drawing from to create this project (link), (link) The main improvements we will be making on these projects is to give them the application of leading a person. This means the robot will need new programmable code in order to execute it's leading task.

Team Members

Daniel Sullivan, Novi Wang, Andrew O'Sullivan (TA)

Leap Controlled RC Car (Link)

This project is a continuation of the project Leap Controlled RC Car. This project will use some of the results and ideas found by Andrew O'Sullivan and David D’Agrosa. Specifically, we will be using the same concepts of controlling a small electric car with an Arduino Uno and a motor shield. Our project won't be remotely controlled, but sketches such as turning the motors will be similar with motor shield's: here is a source to access their code under the RC folder(Link) which we will be using to familiarize ourselves with programming in Arduino.

Objectives

A successful project will at the end be an autonomous vehicle alerting a person when an obstacle is encountered and helping the person avoid the obstacle. This means the distance sensor is clearly sending information back to the Arduino to detect obstacles while also staying in front of the follower. The motor shield is connected and working with the driver codes to be written. The robot is sending sound signals when there is an obstacle ahead. Through an IMU sensor, the robot will maintain a straight path forward. When an obstacle is encountered, it will shift by a constant angle either left or right, go a determined length forward and straighten out. The car will alert via speakers, one beep or two for directions left or right. The follower should only need to take one step to the left or right. The front sensors detect the position of obstacles and decide to turn left or turn right. The back sensors detect the distance from the person. And when the person is far away from the vehicle, the vehicle stops and turn back until the person is in expected distance interval. At the end of the semester we will demonstrate our project by putting the vehicle on the ground, blindfold a project member and have the robot lead the member forward while avoiding obstacles.

Challenges

Some of the beginning design challenges are building, connecting and sorting all the electronics together, learning how to program in Arduino and how to communicate between the robot synchronizing all the distance sensors to work together via I2C between two Arduinos and a MPU-6050 Accelerometer + Gyro . We will spend extra time on exploring how to program in Arduino by looking at tutorials. The main challenges will be getting the robot to move forward in a straight line and adjusting through slight turns when necessary, getting the car to turn an accurate angles forward, and making sure the robot stays ahead of the follower a constant distance: reversing when the person is too far away and stopping when too far ahead. All of these main challenges will be solved through coding in the Arduino, based on conditions and data gathered from the distance sensors and gyroscope.

For privacy considerations we will make sure to constantly monitor the robot to make sure the robot doesn't go into any rooms unattended. For user safety, along with carefully watching the robot to make sure it doesn't crash we will also add bright caution tape to make sure no one steps on it.To keep the operation cost below 150 dollars the robot will be kept small. This robot is electric and leaves a small carbon footprint.

Budget

- Arduino Motor Shield R3, for $24.97 at Amazon (Link)

- Motor shield will control the two motors on the robot. (Spec sheet)

- 7 sensors: HC-SR04, for $5.00 each at Amazon for a total of $35.00. (Link)

- The sensor will measure distance between the robot and objects. (Spec sheet)

- Piezo Electronic Buzzer for $5.30, 4.49 for shipping [ https://www.amazon.com/gp/product/B013TEI05A/ref=pd_sbs_328_2?ie=UTF8&psc=1&refRID=W6DJKPDN7XVG8P5T48VZ (Link)]

- alarm to turn left or right (Guide)

- Batteries, for 9.98 (Link)

- Battery is used to power the shields and arduino

- Magician Chassis, for $29.95 at Amazon. (Link)

- The car's chassis, motors and battery pack. (Spec sheet)

- MPU-6050 Accelerometer + Gyro supplied by class

- gyroscope to determine angles to make sure the car goes forward in a straight line and turns a constant angle (Guide)

Total Budget: $104.69

Gantt Chart

Design and Solutions

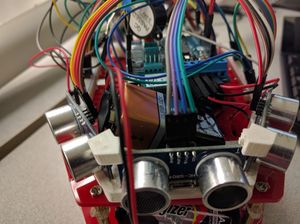

Hardware

- Car Design

At the very beginning of this project we decided to go with a vehicle kit which provided a pre-built chassis and motors so as to save time 3D printing and assembling motors. The chassis held up well for the most part and was a wise decision, a few modifications were needed such as adding to the height of the structure and moving around the battery placement. There were some spacing issues, but with some wire management we were able to at least protect the wires for the lower Arduino, overall the chassis worked well for us. However, the motors were a disappointment as they were not in sync with each other at all as one motor was significantly faster than the other. The addition of the IMU and a short control algorithm in Arduino provided a fix for this, spinning the slower wheel faster when the car angled off track. However, if we were to do it again more planning should go into researching and selecting quality motors, as this would have lead to focusing more time on the coding sections of the project instead of design issues.

- IMU

The IMU provided the means to make the car turn accurately and stay on course, both actions being vital to the project to even be considered a success at all. The IMU was the largest time sink of the project though. Large portions of time were spent researching the documentation, the Arduino library, examples Ivensense provided and the datasheet in order to extract meaningful data from the sensor. At first the plan was to use interrupts and the digital motion processor, however this proved to be too problematic. Instead, the solution was to have the Arduino handle calibrating and turning the analog values from the IMU to useful angles in degrees. A very useful resource to learn how to do this was gained from this example of leveling a quad copter(link). After obtaining the angles, we realized the yaw angle was drifting while the car wasn't even moving. This was partially solved by adding a simple least squares filter, to solve drift for more extensive periods of time a more permanent solution must be used.

- Distance Sensors

A total of 6 distance sensors were used, an array of three in the back and three in the front. Each distance sensors requires four pins, two can go to a bus of ground and power but at least two pins(trig and echo) must wire directly into the Arduino, for a total of 12 general purpose pins required. The Arduino shield only has a total of 14 pins though, with 6 of them required to be used by the motor shield which must use all the PWM pins. We realized there wasn't enough space on one regular ArduinoUno for this project. Possible fixes included multiplexing(difficult and error prone), using an Arduino mega(expensive) or using two Arduinos communicating via I2C. Our solution was to use two Arduinos where one slave holds all the distance sensors and the other controls the motors. The distance sensors themselves experience noise, the solution was to add a short time delay of .5 microseconds between each individual firing ping from the sensors, however this was not implemented in time for the presentation of the project.

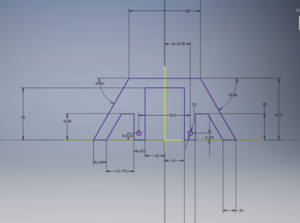

- 3D Printing Casing for Distance Sensors

We used Autodesk Inventor to design the base for the sensors. We then used the free Cura slicing software to turn our .stl file from Autodesk Inventor into G-Code for the Ultimaker 2+ that is in the Lab. There are two base: one for front sensors and one for back sensors. The purpose for the bases is stabilizing the sensors. We want the sensors be able to measure the distances around the vehicle, so sensors should have angles to each other. By printing the base, the middle sensors stay horizontal and left and right sensors are mounted in 60 degrees. Then the sensors can detect obstacles in different directions.

Communication

To have enough inputs for this project, two Arduinos talking over I2C had to be used. Initially, errors were springing up between using interrupts and communicating over I2C at the same time. Conveniently this was solved by calculating the data from the IMU on the main Arduino. To talk over I2C using Arduinos, we used the Wire library(link). The first error we ran into was sending integers over I2C, as you can only send one byte at a time. A simple solution is to shift the lower bytes out by using bit operators, send the higher and lower bytes over one at a time and then recombine them together on the other Arduino. Another problem that arose was timing the IMU and slave Arduino to talk to the main Arduino at reasonable times, as only one piece of hardware can talk at a time. The solution was much easier when the main Arduino took a larger role on handling data, so the timing of the slave Arduino is set to finish before the IMU is called to talk.

Coding

In order to avoid obstacles in different situations, the vehicle was coded in four main scenarios: obstacle on the left, obstacle on the right, long wall and small obstacle. The vehicle starts the motion only when the back sensors detect feet behind.The vehicle distinguishes scenarios according to the distances that front sensors measure.

- Obstacle on the left

When the left distance is close enough and is smaller than right distance, the obstacle is on the left. The vehicle turns right until no obstacle is detected and moves forward a short distance. Then the vehicle adjusts its angle to straight. We set the "straight interval" to plus and minus 5 degrees so that the vehicle can move forward even when the angle is not perfectly 0 degrees (which is the reason that the vehicle sometimes shakes when it moves forward).

- Obstacle on the right

The right scenario works in a similar way. When the right distance is close enough and is smaller than left distance, the obstacle is on the right. The vehicle turns left until no obstacle is detected and moves forward a short distance. Then the vehicle adjusts its angle to straight.

- Long Wall

The long wall scenario appears when the middle distance is close and the left and right distance is greater than the middle distance. Then the vehicle determines turning direction by the comparison of left and right distance. Turn left when right distance is smaller and turn right when left distance is smaller.

- Small Obstacle

The small obstacle scenario happens when left and right distance is very far away and the middle distance is close. We set the turning direction to right as the default.

Results

- Distance Sensors: That is one awkward walk behind the car, anyone would have trouble not stepping on it let alone a blind person. This is a serious design issue, a student pointed out at the demo "how come the sensors don't point towards the persons chest?" A simple fix, but tunnel vision and trying to stop the Arduino crashing seemed like larger issues so this was put on the back burner for too long.

- IMU: The car excels at maintaining its angle and going in fixed lines as it makes sharp accurate movements because the IMU is set at 500 degrees / s with 16 bits at its disposal, making the angle accurate to roughly 0.01 degrees. The car easily handles obstacles to the left or right as it turns when it senses an obstacle within 30 cm until the sensor reading the object no longer does, at which point the car evens out and continues its path forward. However, the IMU took up a significant amount of time away from all other aspects of the project such as design and coding.

- Algorithm: Overall the algorithm works good. But there is a problem after the vehicle turns direction. The professor pointed out that vehicle never move forward after turning. It only moved forward when there is no obstacle in front. This is caused by the short among of time that the vehicle move forward. In fact, the algorithm has a method to check whether the moving forward action happens after vehicle turning direction until no obstacle is detected. When the scenario is finishing turning direction, the moving forward function is called. Then the algorithm goes into next main loop. The delay time in the motor algorithm is 0.1 second which means the vehicle will move forward for 0.1 second when the forward function is called. The moving time is so short that the vehicle seems like not moving froward at all. Also in the algorithm, we set the forward angle interval to plus and minus 5 degree which means the vehicle will move forward even the direction is a little off perfect straight. When the vehicle moves forward in more or less than 0 degree, it's pretty easy for it to reach over positive or negative 5 degrees. Then the vehicle will adjust the direction back to the forward interval by turning left and right. This is the reason that the vehicle is "shaking" when moving forward and looks not straight. It may be better for us to keep track all the turning angles and minus them from the current angle when the vehicle need to adjust direction. We also need to modify the after turning forward function. We may set the moving time independently instead of sharing with the normal forward function.

- Feedback to Blind person: The speaker works well and was easy to implement, a simple download of (pitches) and a few lines of code later it was up and running. It is doing the bare minimum of feedback right now though, it simply plays two different tones for left and right and periodically plays a tone for 300ms every 3 seconds. But the speed of incorporating it was important, there's no way we would have been able to finish an app alongside this project.

Comparison to original objectives

At the beginning we didn't understand how long it would take to get all the hardware set up, and not just working but working well. Originally we wanted to write an app to handle feedback to the follower, thankfully Humberto steered us away from that as there's just no way that would have been completed on time. The speaker manages feedback well enough, although maybe we are complicating things too much and a leash would work just as well. Originally the objective was to totally blindfold a person and let them walk behind the car, but the results fall short here. In other ways though, the hardware works surprisingly well, data from the IMU is extremely accurate but the algorithm could probably use more work as the car makes sharp turns.

All files to run Wall-E can be found (here)!