Difference between revisions of "AmazonRekognition"

| (28 intermediate revisions by the same user not shown) | |||

| Line 12: | Line 12: | ||

====Setting up AWS account:==== | ====Setting up AWS account:==== | ||

| − | 1.) Visit the | + | 1.) Visit the [https://aws.amazon.com/premiumsupport/knowledge-center/create-and-activate-aws-account/ AWS setup tutorial page] and click on sign into console, create a new AWS account, and proceed by entering your email, password and account name. <br> |

| − | |||

| − | 2.) | + | 2.) Use the [https://boto3.amazonaws.com/v1/documentation/api/latest/guide/quickstart.html#installation installation page] to download the AWS SDK for python (Boto3)<br> |

| − | https://boto3.amazonaws.com/v1/documentation/api/latest/guide/quickstart.html#installation <br> | ||

3.) After completing the registration process and confirming your account, sign into the console and open IAM console, choose users then add user.<br> | 3.) After completing the registration process and confirming your account, sign into the console and open IAM console, choose users then add user.<br> | ||

| Line 27: | Line 25: | ||

====Setting Up Pi==== | ====Setting Up Pi==== | ||

1.) Now that AWS is set up we are going to initiate the pi side of the facial recognition. First we must connect our camera module to our raspberry pi. Configure pi camera by pulling up on camera clasp, inserting camera tail such that the metal side points away from the usb ports, and then push the camera clasp back into place. <br> | 1.) Now that AWS is set up we are going to initiate the pi side of the facial recognition. First we must connect our camera module to our raspberry pi. Configure pi camera by pulling up on camera clasp, inserting camera tail such that the metal side points away from the usb ports, and then push the camera clasp back into place. <br> | ||

| − | [[File:Cameramodulepic.PNG| | + | [[File:Cameramodulepic.PNG|600px|thumb|center||Raspberry Pi Camera Orientation]] |

<br> | <br> | ||

2.) Run <code> sudo raspi-config </code>and enable the camera add on then press finish. <br> | 2.) Run <code> sudo raspi-config </code>and enable the camera add on then press finish. <br> | ||

3.) Install camera with <code> sudo apt-get install python-picamera </code>, reboot your raspberry pi. <br> | 3.) Install camera with <code> sudo apt-get install python-picamera </code>, reboot your raspberry pi. <br> | ||

| − | 4.) In python run the following code (from https://medium.com/@petehouston/capture-images-from-raspberry-pi-camera-module-using-picamera-505e9788d609) to ensure the camera is functioning properly. <br> | + | 4.) In python run the following code (from [https://medium.com/@petehouston/capture-images-from-raspberry-pi-camera-module-using-picamera-505e9788d609 Medium]) to ensure the camera is functioning properly. <br> |

| − | [[File:testcameracode.PNG| | + | [[File:testcameracode.PNG|600px|thumb|center||Code Used to Test the Raspberry Pi Camera]] |

====Connect AWS and Raspberry Pi==== | ====Connect AWS and Raspberry Pi==== | ||

1.) First you need to import boto3, which allows python to communicate with Amazon Web Services and picamera which provides a python interface to the raspberry pi camera. <br> | 1.) First you need to import boto3, which allows python to communicate with Amazon Web Services and picamera which provides a python interface to the raspberry pi camera. <br> | ||

| − | <code> | + | <code> import boto3 <br> |

| − | import boto3 | + | import picamera</code> |

| − | import picamera | ||

| − | </code> | ||

| − | 2.) Next, you need to connect python to your AWS account by entering the AWS access key and the secret access key (which were downloaded while setting up the IAM user). The region name which can be found in the S3 bucket on AWS should also be included here. | + | 2.) Next, you need to connect python to your AWS account by entering the AWS access key and the secret access key (which were downloaded while setting up the IAM user). The region name which can be found in the S3 bucket on AWS should also be included here. <br> |

| − | <code> | + | <code>Session = boto3.Session(aws_access_key_id= “enter”, aws_secret_access_key=”enter”, region_name=’enter’)</code> |

| − | Session = boto3.Session(aws_access_key_id= “enter”, aws_secret_access_key=”enter”, region_name=’enter’) | ||

| − | </code> | ||

| − | 3.) Insert the following code, again entering region in the (enter region) blank and replacing bucket with the name of your S3 bucket. | + | 3.) Insert the following code, again entering region in the (enter region) blank and replacing bucket with the name of your S3 bucket. <br> |

| − | <code> | + | <code>s3 = session.client('s3') |

| − | s3 = session.client('s3') | ||

client=session.client('rekognition', 'enter region') | client=session.client('rekognition', 'enter region') | ||

| − | bucket= 'yourbucketname' | + | bucket= 'yourbucketname'</code> |

| − | </code> | ||

| − | 4.) Next we want to instantiate our list of faces that were uploaded into our bucket. These images will be the faces that our code looks to match with the picture taken by the pi. | + | 4.) Next we want to instantiate our list of faces that were uploaded into our bucket. These images will be the faces that our code looks to match with the picture taken by the pi. <br> |

| − | <code> | + | <code>FACE_LIST= ["name1.jpg", "name2.jpg", "name3.jpg"]</code> |

| − | FACE_LIST= ["name1.jpg", "name2.jpg", "name3.jpg"] | ||

| − | </code> | ||

| − | 5.) Next we create a key_target, which will be the filename of the picture uploaded from the pi. We also define two boolean variables which we will utilize later to differentiate an image with a person to an image with no person and to differentiate a face that is recognized from a face that is not recognized. Our min_sim variable is used to set the baseline similarity that we deem necessary to consider two faces a match. | + | 5.) Next we create a key_target, which will be the filename of the picture uploaded from the pi. We also define two boolean variables which we will utilize later to differentiate an image with a person to an image with no person and to differentiate a face that is recognized from a face that is not recognized. Our min_sim variable is used to set the baseline similarity that we deem necessary to consider two faces a match. <br> |

| − | <code> | + | <code>key_target= "intruder.jpg" <br> |

| − | key_target= "intruder.jpg" | + | threshold=0 <br> |

| − | threshold=0 | + | RECOGNIZED_FACE = False <br> |

| − | RECOGNIZED_FACE = False | + | IS_FACE=False <br> |

| − | IS_FACE=False | + | region_name= ‘(enter)' <br> |

| − | region_name= ‘(enter)' | + | message = [] <br> |

| − | message = [] | + | MIN_SIM= 60.0</code> |

| − | MIN_SIM= 60.0 | ||

| − | </code> | ||

| − | 6.) Now we want to utilize our pi camera to take a picture and upload this picture to the S3 bucket with the filename intruderface.jpg | + | 6.) Now we want to utilize our pi camera to take a picture and upload this picture to the S3 bucket with the filename intruderface.jpg <br> |

| − | <code> | + | <code>if __name__ == "__main__":</code> |

| − | + | <code>with picamera.PiCamera() as camera:</code> | |

| − | if __name__ == "__main__": | + | <code>camera.resolution= (1280, 720) |

| − | with picamera.PiCamera() as camera: | ||

| − | camera.resolution= (1280, 720) | ||

camera.capture('intruderface.jpg') | camera.capture('intruderface.jpg') | ||

| − | s3.upload_file('intruderface.jpg', Bucket=bucket, Key= key_target) | + | s3.upload_file('intruderface.jpg', Bucket=bucket, Key= key_target)</code> |

| − | </code> | ||

| − | |||

| − | |||

| − | < | + | 7.) The detect faces function runs and returns the following attributes: bounding box, condience, facial landmarks, facial attributes, quality, pose, and emotions. We can use these attributes to determine facial similarities. again , enter your region_name below. <br> |

| − | + | <code>def detect_face(bucket, key, region_name = "enter", attributes = ['ALL']):</code> | |

| − | + | <code>rekognition = session.client("rekognition", region_name) | |

response = rekognition.detect_faces( | response = rekognition.detect_faces( | ||

Image={ | Image={ | ||

| Line 98: | Line 81: | ||

Attributes = attributes, | Attributes = attributes, | ||

) | ) | ||

| − | return response['FaceDetails'] | + | return response['FaceDetails']</code> |

| − | </code> | + | 8.) The compare_faces function will provide a confidence number that the taken image matches one from our list. <br> |

| − | + | <code>def compare_faces(bucket, key, bucket_target, key_target, threshold, region_name):</code> | |

| − | 8.) The compare_faces function will provide a confidence number that the taken image matches one from our list. | + | <code>rekognition = session.client("rekognition", region_name) |

| − | <code> | ||

| − | |||

| − | |||

| − | |||

response = rekognition.compare_faces( | response = rekognition.compare_faces( | ||

SourceImage={ | SourceImage={ | ||

| Line 121: | Line 100: | ||

SimilarityThreshold=threshold, | SimilarityThreshold=threshold, | ||

) | ) | ||

| − | return response['SourceImageFace'], response['FaceMatches'] | + | return response['SourceImageFace'], response['FaceMatches']</code> |

| − | </code> | + | 9.)This operation utilizes our boolean, IS_FACE, marking it true is a face is detected and false if no face is detected. <br> |

| − | 9.)This operation utilizes our boolean, IS_FACE, marking it true is a face is detected and false if no face is detected. | + | <code> for face in detect_face(bucket, key_target):</code> |

| − | <code> | + | <code>conf = face['Confidence'] </code> |

| − | + | <code> if(conf >= MIN_SIM): | |

| − | |||

| − | |||

message.append("face detected") | message.append("face detected") | ||

IS_FACE = True | IS_FACE = True | ||

else: | else: | ||

IS_FACE = False | IS_FACE = False | ||

| − | message.append("no face detected") | + | message.append("no face detected")</code> |

| − | </code> | + | 10.) If our boolean, IS_FACE is true we now want to determine if this face matches a face in our list. This function will detect the similarities between the taken image and the faces in our list and if the minimum similarity threshold is met, this will match the image to that user. <br> |

| − | 10.) If our boolean, IS_FACE is true we now want to determine if this face matches a face in our list. This function will detect the similarities between the taken image and the faces in our list and if the minimum similarity threshold is met, this will match the image to that user. | ||

| − | < | ||

| − | + | <code> if (IS_FACE==True) : | |

for face in FACE_LIST: | for face in FACE_LIST: | ||

FILENAME= face + ".jpg" | FILENAME= face + ".jpg" | ||

| Line 146: | Line 121: | ||

if (sim>= MIN_SIM) : | if (sim>= MIN_SIM) : | ||

message.append("user name: " + str(face)) | message.append("user name: " + str(face)) | ||

| − | RECOGNIZED_FACE = True | + | RECOGNIZED_FACE = True</code> |

| + | 11.) If the minimum confidence level is not reached with any of the faces, we will conclude it is an unknown user. <br> | ||

| + | <code> if (RECOGNIZED_FACE ==False):</code> | ||

| + | <code>message.append("unknown user")</code> | ||

| + | 12.)Finally we want to print out our results using this final print line. <br> | ||

| + | <code>print(str.join(',', message))</code> | ||

| + | |||

| − | + | ====Whole Code:==== | |

| − | |||

<code> | <code> | ||

| + | import boto3 <br> | ||

| + | import picamera <br> | ||

| + | Session = boto3.Session(aws_access_key_id= “enter”, aws_secret_access_key=”enter”, region_name=’enter’) <br> | ||

| + | s3 = session.client('s3') <br> | ||

| + | client=session.client('rekognition', 'enter region') <br> | ||

| + | bucket= 'yourbucketname' <br> | ||

| + | FACE_LIST= ["name1.jpg", "name2.jpg", "name3.jpg"] <br> | ||

| + | key_target= "intruder.jpg" <br> | ||

| + | threshold=0 <br> | ||

| + | RECOGNIZED_FACE = False <br> | ||

| + | IS_FACE=False <br> | ||

| + | region_name= ‘(enter)' <br> | ||

| + | message = [] <br> | ||

| + | MIN_SIM= 60.0 <br> | ||

| + | |||

| + | if __name__ == "__main__": <br> | ||

| + | with picamera.PiCamera() as camera: <br> | ||

| + | camera.resolution= (1280, 720) <br> | ||

| + | camera.capture('intruderface.jpg') <br> | ||

| + | s3.upload_file('intruderface.jpg', Bucket=bucket, Key= key_target) <br> | ||

| + | |||

| + | def detect_face(bucket, key, region_name = "us-east-1", attributes = ['ALL']): | ||

| + | rekognition = session.client("rekognition", region_name) | ||

| + | response = rekognition.detect_faces( | ||

| + | Image={ | ||

| + | "S3Object": { | ||

| + | "Bucket": bucket, | ||

| + | "Name": key | ||

| + | } | ||

| + | }, | ||

| + | Attributes = attributes, | ||

| + | ) | ||

| + | return response['FaceDetails'] | ||

| + | print(FaceDetails) | ||

| + | def compare_faces(bucket, key, bucket_target, key_target, threshold, region_name): | ||

| + | rekognition = session.client("rekognition", region_name) | ||

| + | response = rekognition.compare_faces( | ||

| + | SourceImage={ | ||

| + | "S3Object": { | ||

| + | "Bucket": bucket, | ||

| + | "Name": key, | ||

| + | } | ||

| + | }, | ||

| + | TargetImage={ | ||

| + | "S3Object": { | ||

| + | "Bucket": bucket_target, | ||

| + | "Name": key_target, | ||

| + | } | ||

| + | }, | ||

| + | SimilarityThreshold=threshold, | ||

| + | ) | ||

| + | return response['SourceImageFace'], response['FaceMatches'] | ||

| − | + | for face in detect_face(bucket, key_target): | |

| + | conf = face['Confidence'] | ||

| + | if(conf >= MIN_SIM): | ||

| + | message.append("face detected") | ||

| + | IS_FACE = True | ||

| + | else: | ||

| + | IS_FACE = False | ||

| + | message.append("no face detected") | ||

| + | if (IS_FACE==True) : | ||

| + | for face in FACE_LIST: | ||

| + | FILENAME= face + ".jpg" | ||

| + | source_face, matches = compare_faces(bucket, FILENAME, bucket, key_target, threshold, region_name) | ||

| + | for match in matches: | ||

| + | sim = match['Similarity'] | ||

| + | message.append("simScore: " + str(sim)) | ||

| + | if (sim>= MIN_SIM) : | ||

| + | message.append("user name: " + str(face)) | ||

| + | RECOGNIZED_FACE = True | ||

| + | if (RECOGNIZED_FACE ==False): | ||

message.append("unknown user") | message.append("unknown user") | ||

| − | + | print(str.join(',', message))</code> | |

| − | |||

| − | |||

| − | print(str.join(',', message)) | ||

| − | </code> | ||

| − | |||

| − | |||

| + | ==Authors== | ||

| + | Katie Cardwell,<br> Andrew Koltz,<br> Ethan Shry (TA), <br> Johnny Strek | ||

| + | ==Group Link== | ||

| + | [[https://classes.engineering.wustl.edu/ese205/core/index.php?title=Smarter_Door Smarter Door]] <br /> | ||

| + | [[https://classes.engineering.wustl.edu/ese205/core/index.php?title=Smarter_Door_Log Smarter Door Log]] | ||

| + | == External References == | ||

| + | *https://aws.amazon.com/premiumsupport/knowledge-center/create-and-activate-aws-account/ | ||

| + | *https://boto3.amazonaws.com/v1/documentation/api/latest/guide/quickstart.html#installation | ||

| + | *https://medium.com/@petehouston/capture-images-from-raspberry-pi-camera-module-using-picamera-505e9788d609 | ||

[[Category:HowTos]] | [[Category:HowTos]] | ||

Latest revision as of 15:44, 27 April 2019

Contents

Overview

This tutorial covers how to create an Amazon Web Services account, how to set up a camera on the raspberry pi, and how to run Amazon's facial Rekognition using a picture taken from the pi. Before we can run facial recognition we must create an AWS user, create a S3 bucket, and upload pictures to this S3 bucket. The final product will be able to calculate the similarity between a picture taken on the pi with various images saved in the AWS bucket.

Materials/Prerequisites

- Atom, or another source code editor

- A Raspberry Pi

- A Pi camera

Process

Setting up AWS account:

1.) Visit the AWS setup tutorial page and click on sign into console, create a new AWS account, and proceed by entering your email, password and account name.

2.) Use the installation page to download the AWS SDK for python (Boto3)

3.) After completing the registration process and confirming your account, sign into the console and open IAM console, choose users then add user.

- Give your user a name, and proceed with programmatic access.

- Add the following permissions to user: AmazonS3FullAccess, AmazonRekognitionFullAccess, and AmazonS3ReadOnlyAccess

- After creating user, click on security credentials and download the given access key and access password and store in a safe file. These credentials should not be shared nor pushed to github.

4.) Next go to services, then S3, then create bucket, note the region and name for later use. Enable static website hosting and the ACL permissions.

5.) Next we want to upload images from your computer and add to bucket to test for facial similarity with the picture you will take. Click upload and choose image file (preferably saved as a .jpg) from computer.

Setting Up Pi

1.) Now that AWS is set up we are going to initiate the pi side of the facial recognition. First we must connect our camera module to our raspberry pi. Configure pi camera by pulling up on camera clasp, inserting camera tail such that the metal side points away from the usb ports, and then push the camera clasp back into place.

2.) Run sudo raspi-config and enable the camera add on then press finish.

3.) Install camera with sudo apt-get install python-picamera , reboot your raspberry pi.

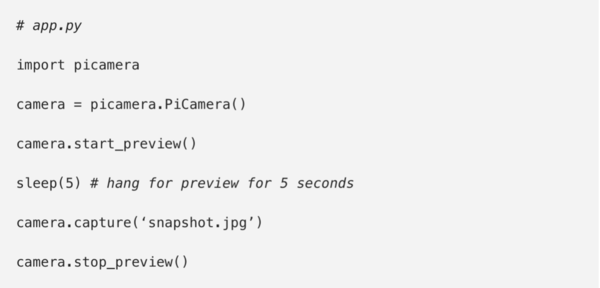

4.) In python run the following code (from Medium) to ensure the camera is functioning properly.

Connect AWS and Raspberry Pi

1.) First you need to import boto3, which allows python to communicate with Amazon Web Services and picamera which provides a python interface to the raspberry pi camera.

import boto3

import picamera

2.) Next, you need to connect python to your AWS account by entering the AWS access key and the secret access key (which were downloaded while setting up the IAM user). The region name which can be found in the S3 bucket on AWS should also be included here.

Session = boto3.Session(aws_access_key_id= “enter”, aws_secret_access_key=”enter”, region_name=’enter’)

3.) Insert the following code, again entering region in the (enter region) blank and replacing bucket with the name of your S3 bucket.

s3 = session.client('s3')

client=session.client('rekognition', 'enter region')

bucket= 'yourbucketname'

4.) Next we want to instantiate our list of faces that were uploaded into our bucket. These images will be the faces that our code looks to match with the picture taken by the pi.

FACE_LIST= ["name1.jpg", "name2.jpg", "name3.jpg"]

5.) Next we create a key_target, which will be the filename of the picture uploaded from the pi. We also define two boolean variables which we will utilize later to differentiate an image with a person to an image with no person and to differentiate a face that is recognized from a face that is not recognized. Our min_sim variable is used to set the baseline similarity that we deem necessary to consider two faces a match.

key_target= "intruder.jpg"

threshold=0

RECOGNIZED_FACE = False

IS_FACE=False

region_name= ‘(enter)'

message = []

MIN_SIM= 60.0

6.) Now we want to utilize our pi camera to take a picture and upload this picture to the S3 bucket with the filename intruderface.jpg

if __name__ == "__main__":

with picamera.PiCamera() as camera:camera.resolution= (1280, 720) camera.capture('intruderface.jpg') s3.upload_file('intruderface.jpg', Bucket=bucket, Key= key_target)

7.) The detect faces function runs and returns the following attributes: bounding box, condience, facial landmarks, facial attributes, quality, pose, and emotions. We can use these attributes to determine facial similarities. again , enter your region_name below.

def detect_face(bucket, key, region_name = "enter", attributes = ['ALL']):

rekognition = session.client("rekognition", region_name)

response = rekognition.detect_faces(

Image={

"S3Object": {

"Bucket": bucket,

"Name": key

}

},

Attributes = attributes,

)

return response['FaceDetails']

8.) The compare_faces function will provide a confidence number that the taken image matches one from our list.

def compare_faces(bucket, key, bucket_target, key_target, threshold, region_name):

rekognition = session.client("rekognition", region_name)

response = rekognition.compare_faces(

SourceImage={

"S3Object": {

"Bucket": bucket,

"Name": key,

}

},

TargetImage={

"S3Object": {

"Bucket": bucket_target,

"Name": key_target,

}

},

SimilarityThreshold=threshold,

)

return response['SourceImageFace'], response['FaceMatches']

9.)This operation utilizes our boolean, IS_FACE, marking it true is a face is detected and false if no face is detected.

for face in detect_face(bucket, key_target):

conf = face['Confidence']if(conf >= MIN_SIM): message.append("face detected") IS_FACE = True else: IS_FACE = False message.append("no face detected")

10.) If our boolean, IS_FACE is true we now want to determine if this face matches a face in our list. This function will detect the similarities between the taken image and the faces in our list and if the minimum similarity threshold is met, this will match the image to that user.

if (IS_FACE==True) :

for face in FACE_LIST:

FILENAME= face + ".jpg"

source_face, matches = compare_faces(bucket, FILENAME, bucket, key_target, threshold, region_name)

for match in matches:

sim = match['Similarity']

message.append("simScore: " + str(sim))

if (sim>= MIN_SIM) :

message.append("user name: " + str(face))

RECOGNIZED_FACE = True

11.) If the minimum confidence level is not reached with any of the faces, we will conclude it is an unknown user.

if (RECOGNIZED_FACE ==False):

message.append("unknown user")

12.)Finally we want to print out our results using this final print line.

print(str.join(',', message))

Whole Code:

import boto3

import picamera

Session = boto3.Session(aws_access_key_id= “enter”, aws_secret_access_key=”enter”, region_name=’enter’)

s3 = session.client('s3')

client=session.client('rekognition', 'enter region')

bucket= 'yourbucketname'

FACE_LIST= ["name1.jpg", "name2.jpg", "name3.jpg"]

key_target= "intruder.jpg"

threshold=0

RECOGNIZED_FACE = False

IS_FACE=False

region_name= ‘(enter)'

message = []

MIN_SIM= 60.0

if __name__ == "__main__":

with picamera.PiCamera() as camera:

camera.resolution= (1280, 720)

camera.capture('intruderface.jpg')

s3.upload_file('intruderface.jpg', Bucket=bucket, Key= key_target)

def detect_face(bucket, key, region_name = "us-east-1", attributes = ['ALL']):

rekognition = session.client("rekognition", region_name)

response = rekognition.detect_faces(

Image={

"S3Object": {

"Bucket": bucket,

"Name": key

}

},

Attributes = attributes,

)

return response['FaceDetails']

print(FaceDetails)

def compare_faces(bucket, key, bucket_target, key_target, threshold, region_name):

rekognition = session.client("rekognition", region_name)

response = rekognition.compare_faces(

SourceImage={

"S3Object": {

"Bucket": bucket,

"Name": key,

}

},

TargetImage={

"S3Object": {

"Bucket": bucket_target,

"Name": key_target,

}

},

SimilarityThreshold=threshold,

)

return response['SourceImageFace'], response['FaceMatches']

for face in detect_face(bucket, key_target):

conf = face['Confidence']

if(conf >= MIN_SIM):

message.append("face detected")

IS_FACE = True

else:

IS_FACE = False

message.append("no face detected")

if (IS_FACE==True) :

for face in FACE_LIST:

FILENAME= face + ".jpg"

source_face, matches = compare_faces(bucket, FILENAME, bucket, key_target, threshold, region_name)

for match in matches:

sim = match['Similarity']

message.append("simScore: " + str(sim))

if (sim>= MIN_SIM) :

message.append("user name: " + str(face))

RECOGNIZED_FACE = True

if (RECOGNIZED_FACE ==False):

message.append("unknown user")

print(str.join(',', message))

Authors

Katie Cardwell,

Andrew Koltz,

Ethan Shry (TA),

Johnny Strek

Group Link

[Smarter Door]

[Smarter Door Log]