Difference between revisions of "Pi Car Discovery"

m (Protected "Pi Car Discovery" ([Edit=Allow only administrators] (indefinite) [Move=Allow only administrators] (indefinite))) |

|||

| (196 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | This is | + | [[https://classes.engineering.wustl.edu/ese205/core/index.php?title=Pi_Car_Discovery/Logs Weekly Logs Link]] |

| + | |||

| + | = Project Proposal = | ||

| + | == Project Overview == | ||

| + | Nowadays, with the fast development of technology, artificial intelligence becomes highly valued and popular. High-tech companies have developed incredible technology. Some notable examples are Tesla with self-driving cars, Google has invented its instant translation machine, Apple has created Siri, etc. With all the excitement and hype of A.I, our group decided to study and explore the Raspberry Pi Car. The goal of this project is to build a model car and use Raspberry Pi to navigate through certain trails. For example, our preliminary goal is for the car to navigate a path clearly marked on a white floor with black tape. We will try some easy trails first, then we will attempt harder tasks as we achieve our goals. We can either increase the complexity of road or add more functions to the car. | ||

| + | |||

| + | |||

| + | [https://docs.google.com/presentation/d/1Ed41HrluTbkPlG12MQ1PVYfMlzX2B2v7rU2CiWZHpEA/edit#slide=id.p2 Presentation] | ||

| + | |||

| + | == Team Members == | ||

| + | * Yinghan Ma (James) | ||

| + | * Jiaqi Li (George) | ||

| + | * Zhimeng Gou (Zimon) | ||

| + | * David Tekien (TA) | ||

| + | * Jim Feher (Instructor) | ||

| + | |||

| + | == Objectives == | ||

| + | * Build a Pi Car | ||

| + | * Connect the car with Raspberry Pi wirelessly | ||

| + | * Interface the Raspberry Pi and Arduino with sensors and actuators | ||

| + | * Enable the car to move | ||

| + | * Navigate the car by following an easy, straight path clearly marked on a white floor with black tape | ||

| + | * Navigate the car by following a curved path | ||

| + | * try some harder path with complex environment | ||

| + | * Install Night Light into the car | ||

| + | * Honk the horn when the car detecting barriers at the front | ||

| + | |||

| + | == Challenges == | ||

| + | * Learn how to use Raspberry Pi and how Pi interact with each electronical component | ||

| + | * Code with Python. | ||

| + | * Understand the meaning of the code in software section | ||

| + | * Learn CAD and figure out how to 3D print accessories for Pi Car | ||

| + | |||

| + | == Budgets == | ||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Item | ||

| + | ! Description | ||

| + | ! Source URL | ||

| + | ! Price/unit | ||

| + | ! Quantity | ||

| + | ! Shipping/Tax | ||

| + | ! Total | ||

| + | |- | ||

| + | | Buggy Car | ||

| + | | Used as our pi car | ||

| + | | [http://www.activepowersports.com/dromida-1-18-buggy-2-4ghz-rtr-w-battery-charger-c0049/ Link] | ||

| + | | $99.99 | ||

| + | | 1 | ||

| + | | $0 | ||

| + | | $99.99 | ||

| + | |- | ||

| + | | Rotary Encoder | ||

| + | | | ||

| + | | [https://www.sparkfun.com/products/10932 Link] | ||

| + | | $39.95 | ||

| + | | 1 | ||

| + | | $0 | ||

| + | | 39.95 | ||

| + | |- | ||

| + | | Raspberry Pi | ||

| + | | | ||

| + | | [Provided] | ||

| + | | $0 | ||

| + | | 1 | ||

| + | | $0 | ||

| + | | $0 | ||

| + | |- | ||

| + | | 32GB MicroSD Card | ||

| + | | | ||

| + | | [Provided] | ||

| + | | $0 | ||

| + | | 1 | ||

| + | | $0 | ||

| + | | $0 | ||

| + | |- | ||

| + | | IMU 9DoF Senor Stick | ||

| + | | | ||

| + | | [https://www.sparkfun.com/products/13944 Link] | ||

| + | | $14.95 | ||

| + | | 1 | ||

| + | | $0 | ||

| + | | $14.95 | ||

| + | |- | ||

| + | | Raspberry Pi Camera Module V2 | ||

| + | | | ||

| + | | [https://www.sparkfun.com/products/14028 Link] | ||

| + | | $29.95 | ||

| + | | 1 | ||

| + | | $0 | ||

| + | | $29.95 | ||

| + | |- | ||

| + | | Brushed ESC Motor Speed Controller | ||

| + | | | ||

| + | | [https://www.amazon.com/Hobbypower-Brushed-Motor-Speed-Controller/dp/B00DU49XL0/ref=sr_1_2_sspa?ie=UTF8&qid=1537500234&sr=8-2-spons&keywords=Brushed+ESC+Motor+Speed+Controller&psc=1 Link] | ||

| + | | $8.95 | ||

| + | | 1 | ||

| + | | $0 | ||

| + | | $8.95 | ||

| + | |- | ||

| + | | TowerPro SG90 Micro Servo | ||

| + | | | ||

| + | | [https://www.amazon.com/KBYN-TowerPro-SG90-Micro-Servo/dp/B01608II3Q/ref=sr_1_3?ie=UTF8&qid=1537500305&sr=8-3&keywords=TowerPro+SG90+Micro+Servo&dpID=51yFqbET2aL&preST=_SY300_QL70_&dpSrc=srch Link] | ||

| + | | $7.29 | ||

| + | | 1 | ||

| + | | $0 | ||

| + | | $7.29 | ||

| + | |- | ||

| + | | TFMini- Micro LiDAR Module | ||

| + | | | ||

| + | | [https://www.sparkfun.com/products/14588 Link] | ||

| + | | $39.95 | ||

| + | | 1 | ||

| + | | $0 | ||

| + | | $39.95 | ||

| + | |- | ||

| + | | Current Sensors | ||

| + | | | ||

| + | | [https://www.amazon.com/HiLetgo-MAX471-Current-Consume-Detection/dp/B01N6AIG60/ref=sr_1_11_sspa?ie=UTF8&qid=1537500413&sr=8-11-spons&keywords=Current+Sensors&psc=1 Link] | ||

| + | | $6.39 | ||

| + | | 6 | ||

| + | | $0 | ||

| + | | $38.34 | ||

| + | |- | ||

| + | | Honk | ||

| + | | | ||

| + | | [https://www.amazon.com/Speaker-Computer-Powered-Multimedia-Notebook/dp/B075M7FHM1/ref=sr_1_3?ie=UTF8&qid=1537505355&sr=8-3&keywords=mini+honk Link] | ||

| + | | $11.99 | ||

| + | | 1 | ||

| + | | $0 | ||

| + | | $11.99 | ||

| + | |- | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | | $286.67 | ||

| + | |} | ||

| + | |||

| + | == Gantt Chart == | ||

| + | [[File:GanttChart1.png | frameless | 1000px | center]] | ||

| + | |||

| + | ==References== | ||

| + | [https://picar.readthedocs.io/en/latest/chapters/usage/mechanical.html Pi Car Project] | ||

| + | |||

| + | [http://shumeipai.nxez.com/ Pi Car Lab] | ||

| + | |||

| + | [http://www.ladyada.net/learn/arduino/ Arduino Tutorial] | ||

| + | |||

| + | [https://www.instructables.com/id/Raspberry-Pi-Arduino-Serial-Communication/ Serial Communication] | ||

| + | |||

| + | = Design and Solutions = | ||

| + | |||

| + | == Build the car == | ||

| + | Extra Materials that we need: | ||

| + | * 3D printed layers | ||

| + | * 3D printed frame for fixing the encoder and the car | ||

| + | * Spacers for the layers | ||

| + | * Gear that fixes the encoder | ||

| + | * Some plastic central plastic gears for back up (since they broke easily) | ||

| + | The first thing we have to do is to remove the original parts from the Buggy car. | ||

| + | |||

| + | To get all the STL files, and to build the car step by step, please click: Pi Car Project Setup. <ref>Pi Car Setup -[https://picar.readthedocs.io/en/latest/chapters/usage/mechanical.html#assembly]</ref> | ||

| + | |||

| + | == Pi Arduino communication == | ||

| + | We used serial communication <ref>Serial Communication Graph -[https://www.instructables.com/id/Raspberry-Pi-Arduino-Serial-Communication/]</ref> first. It can be done easily by plugging in USB wire from Pi to Arduino <ref>Arduino Tutorial -[http://www.ladyada.net/learn/arduino/]</ref>. | ||

| + | |||

| + | Later on, we found the WASD file used I2C communication. Thus we switched to I2C communication. | ||

| + | |||

| + | To install Arduino software on the Pi, please follow this useful website: Arduino Install <ref>Arduino Install -[https://www.arduino.cc/en/Main/Software]</ref> | ||

| + | |||

| + | |||

| + | |||

| + | Serial Communication code: (This is for our test, not for the project) | ||

| + | * Arduino communicated with Raspberry Pi: Upload the Arduino file. Next, run the Python file. | ||

| + | * The central picture shows how to find the port of the Arduino. | ||

| + | [[File:AtoP.png|150px|left]] | ||

| + | [[File:AtoPi.png|500px|center]] | ||

| + | |||

| + | |||

| + | |||

| + | Pi communicated with Arduino: | ||

| + | * Python reads what the Arduino writes to it (Here, the Arduino writes a sequence of numbers, which increases after each iteration in the loop) | ||

| + | |||

| + | [[File:PtoA.png|150px|left]] | ||

| + | [[File:PitoA.png|500px|center]] | ||

| + | |||

| + | |||

| + | |||

| + | I2C communication approach: <ref>I2C Communication Graph -[https://picar.readthedocs.io/en/latest/chapters/usage/electronics.html]</ref> (This is what we used in our project) | ||

| + | [[File:I2C.jpg|200px|left]] | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | == ESC & Motor & Encoder & Arduino Connection == | ||

| + | Here is how we connect the ESC, motor, encoder, and Arduino.(Double click the picture to get a clear version) | ||

| + | |||

| + | [[File:zimonnew.png | frameless | 400px | center]] | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | == Make the car move == | ||

| + | * Run WASD file to make the motor move. | ||

| + | * The Simpletimer was not included in the library. | ||

| + | * The Simpletimer.h was created by ourselves. | ||

| + | * Encoder did not work very well. It did not control the speed as it supposed to be. | ||

| + | * Some changes were made in the WASD.ino. | ||

| + | |||

| + | Those files can be found in Github. <ref>Github - [https://github.com/Jiaqigeorgeli/PiCar/blob/master/linetrack.py/ github]</ref> | ||

| + | |||

| + | |||

| + | == Line tracking == | ||

| + | In the line tracking process. I used Python and OpenCV to find lines in a real-time video. | ||

| + | |||

| + | The following techniques are used: | ||

| + | |||

| + | 1. Canny Edge Detection | ||

| + | |||

| + | 2. Hough Transform Line Detection | ||

| + | |||

| + | You will need a Raspberry Pi 3 with python installed and Pi camera (Webcam works too). | ||

| + | You also need to install OpenCV onto your Raspberry Pi <ref>Installing OpenCV&Python - [https://www.pyimagesearch.com/2015/02/23/install-opencv-and-python-on-your-raspberry-pi-2-and-b/]</ref>. | ||

| + | If this is your first time to use the Pi camera, to be able to access the Raspberry Pi camera with OpenCV and Python, we recommend looking at this <ref>Accessing OpenCV&Python - [https://www.pyimagesearch.com/2015/03/30/accessing-the-raspberry-pi-camera-with-opencv-and-python/ instruction]</ref>. For interfacing with the Raspberry Pi camera module using Python, the basic idea is to install Pi camera module with NumPy array support since OpenCV represents images as NumPy arrays when using Python bindings. | ||

| + | |||

| + | If you are using Webcam, please follow this website <ref>Webcam - [https://www.hackster.io/Rjuarez7/line-tracking-with-raspberry-pi-3-python2-and-open-cv-9a9327/ link]</ref> to realize line detection. The code is given below: | ||

| + | |||

| + | <source lang="python"> | ||

| + | import sys | ||

| + | import time | ||

| + | import cv2 | ||

| + | import numpy as np | ||

| + | import os | ||

| + | </source> | ||

| + | We start by importing packages. | ||

| + | |||

| + | <source lang="python"> | ||

| + | Kernel_size=15 | ||

| + | low_threshold=40 | ||

| + | high_threshold=120 | ||

| + | |||

| + | rho=10 | ||

| + | threshold=15 | ||

| + | theta=np.pi/180 | ||

| + | minLineLength=10 | ||

| + | maxLineGap=1 | ||

| + | </source> | ||

| + | Next, we set some important parameters. They represent different things: | ||

| + | |||

| + | kernel_size must be positive and odd. The GaussianBlur takes a Kernel_size parameter which you'll need to play with to find one that works best. | ||

| + | |||

| + | low_threshold – the first threshold for the hysteresis procedure. | ||

| + | |||

| + | high_threshold – the second threshold for the hysteresis procedure. | ||

| + | |||

| + | rho – Distance resolution of the accumulator in pixels. | ||

| + | |||

| + | theta – Angle resolution of the accumulator in radians. | ||

| + | |||

| + | threshold – Accumulator threshold parameter. Only those lines are returned that get enough votes ( >\texttt{threshold} ). | ||

| + | |||

| + | minLineLength – The minimum line length. Line segments shorter than that are rejected. | ||

| + | |||

| + | maxLineGap – The maximum allowed gap between points on the same line to link them. | ||

| + | |||

| + | <source lang="python"> | ||

| + | Initialize camera | ||

| + | video_capture = cv2.VideoCapture(0) | ||

| + | </source> | ||

| + | From there, we need to grab access to our '''video_capture''' pointer. Here we grab reference to our Webcam. | ||

| + | <source lang="python"> | ||

| + | #keep looping | ||

| + | while True: | ||

| + | ret, frame = video_capture.read() | ||

| + | |||

| + | time.sleep(0.1) | ||

| + | |||

| + | gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) | ||

| + | |||

| + | blurred = cv2.GaussianBlur(gray, (Kernel_size, Kernel_size), 0) | ||

| + | </source> | ||

| + | Then we start a loop that will continue until we press the q key. We make a call to the '''read''' method of our camera pointer which returns a 2-tuple. The first entry in the tuple, '''ret''' is a boolean indicating whether the frame was successfully read or not. The '''frame''' is the video frame itself. | ||

| + | |||

| + | |||

| + | After we successfully grab the current frame, we preprocess our '''frame''' a bit. We first convert the frame to Grayscale. We’ll then blur the frame to reduce high-frequency noise and allow us to focus on the structural objects inside the frame. If Kernel_size is bigger the image will be more blurry. | ||

| + | |||

| + | |||

| + | The bigger kearnel_size value requires more time to process. It is not noticeable with the test images but we should keep that in mind (later we'll be processing video clips). So, we should prefer smaller values if the effect is similar. | ||

| + | <source lang="python"> | ||

| + | #Canny recommended ratio upper: lower between 2:1 or 3:1 | ||

| + | edged = cv2.Canny(blurred, low_threshold, high_threshold) | ||

| + | #Perform hough lines probabilistic transform | ||

| + | lines = cv2.HoughLinesP(edged,rho,theta,threshold,minLineLength,maxLineGap) | ||

| + | </source> | ||

| + | Then we perform canny edge-detection. When there is an edge (i.e. a line), the pixel intensity changes rapidly (i.e. from 0 to 255) which we want to detect. We introduced high_threshold and low_threshold so that if a pixel gradient is higher than high_threshold is considered as an edge. Similarly, if a pixel gradient is lower than low_threshold, it is rejected. Bigger high_threshold values will provoke to find fewer edges and lower high_threshold values will give you more lines. It is important to choose a good value of low_threshold to discard the weak edges (noises) connected to the strong edges. You need to adjust these two parameters to make the number of lines be acceptable. | ||

| + | |||

| + | Finally, we use '''cv2.HoughLinesP''' to detect lines in the edge images. | ||

| + | |||

| + | <source lang="python"> | ||

| + | #Draw cicrcles in the center of the picture | ||

| + | cv2.circle(frame,(320,240),20,(0,0,255),1) | ||

| + | cv2.circle(frame,(320,240),10,(0,255,0),1) | ||

| + | cv2.circle(frame,(320,240),2,(255,0,0),2) | ||

| + | |||

| + | #With this for loops only a dots matrix is painted on the picture | ||

| + | #for y in range(0,480,20): | ||

| + | #for x in range(0,640,20): | ||

| + | #cv2.line(frame,(x,y),(x,y),(0,255,255),2) | ||

| + | |||

| + | #With this for loops a grid is painted on the picture | ||

| + | for y in range(0,480,40): | ||

| + | cv2.line(frame,(0,y),(640,y),(255,0,0),1) | ||

| + | for x in range(0,640,40): | ||

| + | cv2.line(frame,(x,0),(x,480),(255,0,0),1) | ||

| + | </source> | ||

| + | |||

| + | This part of the code is not necessary but it makes the frame more vivid when showing on the screen. We draw circles in the center of the picture and grids in the picture. | ||

| + | |||

| + | <source lang="python"> | ||

| + | #Draw lines on input image | ||

| + | if(lines != None): | ||

| + | for x1,y1,x2,y2 in lines[0]: | ||

| + | cv2.line(frame,(x1,y1),(x2,y2),(0,255,0),2) | ||

| + | cv2.putText(frame,'lines_detected',(50,50),cv2.FONT_HERSHEY_SIMPLEX,1,(0,255,0),1) | ||

| + | cv2.imshow("line detect test", frame) | ||

| + | |||

| + | if cv2.waitKey(1) & 0xFF == ord('q'): | ||

| + | break | ||

| + | |||

| + | # When everything is done, release the capture | ||

| + | |||

| + | video_capture.release() | ||

| + | cv2.destroyAllWindows() | ||

| + | </source> | ||

| + | The last part draws the lines in the picture. If lines are not NoneType, we use a loop to draw the lines. | ||

| + | |||

| + | |||

| + | Our group is using Pi camera so the code needs to be modified. Otherwise, it will show a Nonetype error due to an image not being read properly from a disk or a frame not being read from the video stream. You cannot use cv2.VideoCapture when you should instead be using the Pi camera Python package to access the Raspberry Pi camera module. | ||

| + | |||

| + | There are two ways to modify the code to replace USB Webcam with Pi camera. | ||

| + | |||

| + | One is swapping out the cv2.VideoCapture for the VideoStream that works with both the Raspberry Pi camera module and USB webcams. Here is a website to know more details<ref>Camera More Details - [https://www.pyimagesearch.com/2016/01/04/unifying-picamera-and-cv2-videocapture-into-a-single-class-with-opencv/ more details] </ref>. | ||

| + | |||

| + | We did another way. Firstly we import some Pi camera packages | ||

| + | |||

| + | <source lang="python"> | ||

| + | from picamera.array import PiRGBArray | ||

| + | from picamera import PiCamera | ||

| + | </source> | ||

| + | |||

| + | when initializing camera, we comment out video_capture = cv2.VideoCapture(0). Instead, we give code below: | ||

| + | <source lang="python"> | ||

| + | #Initialize camera | ||

| + | camera = PiCamera() | ||

| + | camera.resolution = (640, 480) | ||

| + | camera.framerate = 10 | ||

| + | rawCapture = PiRGBArray(camera, size=(640, 480)) | ||

| + | </source> | ||

| + | |||

| + | We also change the "While True" loop into: | ||

| + | |||

| + | <source lang="python"> | ||

| + | for f in camera.capture_continuous(rawCapture, format="bgr", use_video_port=True): | ||

| + | </source> | ||

| + | |||

| + | Finally, we comment out "ret, frame = video_capture.read()" and add: | ||

| + | <source lang="python"> | ||

| + | frame = f.array | ||

| + | </source> | ||

| + | |||

| + | Now you should be able to realize line detection with Pi camera. However, you might find it is not satisfying because sometimes the video window suddenly disappears and the terminal gives you a Nonetype error again. In addition, the lines appearing in the window are more likely some sparse line segments or even dots. In fact, those problems stem from the HoughLineP function. Please check Feature Detection <ref>Feature Detection - [https://docs.opencv.org/2.4/modules/imgproc/doc/feature_detection.html?highlight=houghlinesp#houghlinesp/ Feature Detection]</ref> if you are interested in HoughLineP function. Basically, cv2.HoughLinesP returns us an array of array of a 4-element vector(x_1, x_2, y_1, y_2) where (x_1,y_1) and (x_2, y_2) are the ending points of each detected line segment. (Each line is represented by a list of 4 values (x_1, y_1, x_2, y_2).) | ||

| + | |||

| + | |||

| + | The sample of cv2.HoughLinesP return value: | ||

| + | |||

| + | print(cv2.HoughLinesP) = [[[a b c d]]] | ||

| + | |||

| + | |||

| + | When we there is no line shown is the camera, cv2.HoughLinesP returns null(or None?) so that is the reason why the video window suddenly disappears. The code cv2.imshow("line detect test", frame) is in the if statement in which (lines != None). To maintain the window when there is no line detected, we could modified the code as below: | ||

| + | <source lang="python"> | ||

| + | if(lines is not None): | ||

| + | for x1,y1,x2,y2 in lines[0]: | ||

| + | cv2.line(frame,(x1,y1),(x2,y2),(0,255,0),2) | ||

| + | cv2.putText(frame,'lines_detected',(50,50),cv2.FONT_HERSHEY_SIMPLEX,1,(0,255,0),1) | ||

| + | cv2.imshow("line detect test", frame) | ||

| + | else: | ||

| + | cv2.putText(frame,'lines_undetected',(50,50),cv2.FONT_HERSHEY_SIMPLEX,1,(0,255,0),1) | ||

| + | cv2.imshow("line detect test", frame) | ||

| + | </source> | ||

| + | |||

| + | After we understand the meaning of cv2.HoughLinesP function, we can modified the loop in the if statement since the old one "for x1,y1,x2,y2 in lines[0]:" only give us sparse line segments. The modified loop is: | ||

| + | |||

| + | <source lang="python"> | ||

| + | for line in lines: | ||

| + | for x1,y1,x2,y2 in line: | ||

| + | </source> | ||

| + | |||

| + | It would have a better result if we set both minLineLength and maxLineGap to be 0. | ||

| + | |||

| + | Now, we should be able to detect lines. How about the center of the lines? How do we find the center of it? | ||

| + | |||

| + | What we did is declare two variable x and y initialized to be 0. Then in the innermost loop of drawing lines from images, we set x=x+x1+x2 and y=y+y1+y2. Next, we give another two variables xa and ya in the outer loop, which equal to x/(2 * the number of lines) and y/(2* the number of lines). Here, xa and ya represent the center of all the points(average point). Therefore, we can tell if our car is on the line or not by comparing (xa, ya) with (320, 240). | ||

| + | |||

| + | The final version of the code can be found in my github. <ref>Github - [https://github.com/Jiaqigeorgeli/PiCar/blob/master/linetrack.py/ github]</ref> | ||

| + | |||

| + | == Combine OpenCV and WASD == | ||

| + | Combine line tracking with WASD python file. | ||

| + | |||

| + | == Conclusion Relationship == | ||

| + | * We first get the image from the Pi camera. | ||

| + | * Next, we use OpenCV in Pi to analyze the image. | ||

| + | * Then, we obtain and process the data so that we can send the signals to Arduino. | ||

| + | * Finally the Arduino can control the car by the signal (command from us). | ||

| + | [[File:relationship.png | frameless | 300px | center]] | ||

| + | |||

| + | = Results = | ||

| + | [[File:buildthecar.jpeg | 300px | right]] | ||

| + | * Build the car: | ||

| + | 1. Keep the original motor and servo. <br/> | ||

| + | 2. Remove the cover of Buggy. <br/> | ||

| + | 3. Remove the battery. <br/> | ||

| + | 4. Remove the ESC. (Electronic Speed Control) <br/> | ||

| + | 5. Add encoder, new ESC. <br/> | ||

| + | 6. Connect wires and set up the first layer. | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | * I2C communication: | ||

| + | 1. I2C communication is not very stable. Sometimes the Pi and Arduino suddenly lose connection. <br/> | ||

| + | 2. Serial communication (USB) is recommended. <br/> | ||

| + | [[File:connection.jpeg|140px|right]] | ||

| + | [[File:zimonnew.png|300px|center]] | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | [[File: 1st Move.mp4 | 300px | right]] | ||

| + | * Make the car move: | ||

| + | 1. Connect the wires correctly. <br/> | ||

| + | 2. Run the WASD code. <br/> | ||

| + | 3. Test: the car runs pretty well except the speed is a little fast.<br/> | ||

| + | How to solve the speed problem: <br/> | ||

| + | We changed some code in WASD.ino, by setting the variable "control_speed = 150", which is also the slowest speed of the motor. | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | [[File: 2nd Move.mp4 | 300px | right]] | ||

| + | * Make the servo move: | ||

| + | 1. Connect the wires correctly. <br/> | ||

| + | 2. Follow the command in the WASD code. <br/> | ||

| + | 3. Test: the servo worked pretty well. | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | [[File: Final Run.mp4 | 300px | right]] | ||

| + | * Line detection: | ||

| + | 1. Can detect any kinds of lines with any colors (as long as there is a color difference)<br/> | ||

| + | 2. Basic algorithms: detect the average point of the lines. <br/> | ||

| + | 3. Fun fact: when the camera detects two lines, it will follow the center of these two lines instead of following one of them. <br/> | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | [[File: Test Drive.mp4 | 300px | right]] | ||

| + | * Test drive: | ||

| + | 1. Test the car with a straight line ----------------------------------------- Successful <br/> | ||

| + | 2. Test the car with a curved right line ----------------------------------------- Successful<br/> | ||

| + | 3. Test the car with a curved left line ----------------------------------------- Successful<br/> | ||

| + | 4. Test the car with an S-shaped line ----------------------------------------- Successful <br/> | ||

| + | 5. Test the car with a 90 degrees corner-shaped line ----------------------------------------- Fail <br/> | ||

| + | Fail reason: 90-degree angle is a sharp angle, and it makes the car camera unable to detect where the followed line is once the angle appears. If we can set the camera higher, as we can see broader, we can achieve this task. | ||

| − | |||

| − | == | + | == Discussion == |

| + | Overall, the project was successful. We met most of the objectives as we set up at the beginning of the semester. The car is moving smoothly and it can detect shapes and follow lines easily. Although the encoder does not work very well, we can still control the speed by modifying the WASD code. | ||

| + | |||

| + | What we did not do well is we underestimate the difficulty of our project. In the beginning, we thought this project should be done smoothly since there are many materials online. We even thought about doing self-parking. However, we met so many difficulties throughout this semester: the WASD file did not have simpletimer library; the encoder did not work very well; OpenCV has a lot of bugs; the camera can easily be disturbed by surroundings; Pi and battery died in the final week. Thus, we did not finish the self-parking and add the fancy decorations (such as a nightlight, honk, etc) in our project. | ||

| + | |||

| + | The obstacles can be concluded into two areas: lack of knowledge and experience in computer programming and the quality of materials. We could further improve the OpenCV by ignoring the surrounding edges, and only focusing on the ground. Besides, we could fix the bugs in the code more efficiently if we have more experience in computer programming. Thus we can also finish the project faster and dive into harder problems. As for the material's quality, if we have an extended length of the Pi camera, there will be a better effect in shape detection. If materials are in good shape, we will not procrastinate during the final week, and some more progress might be done. | ||

| + | |||

| + | Next step: switch back to serial communication; further improvement on OpenCV: ignoring the surroundings; automatically stop when an obstacle occurs. | ||

| + | |||

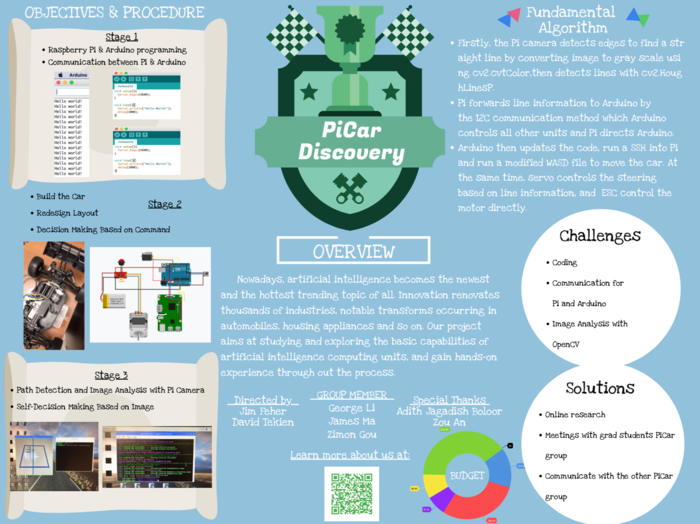

| + | == Poster == | ||

| + | [[File:picardiscovery.png | center | 700px]] | ||

| + | |||

| + | |||

| + | ==References== | ||

| + | |||

[[Category:Projects]] | [[Category:Projects]] | ||

[[Category:Fall 2018 Projects]] | [[Category:Fall 2018 Projects]] | ||

Latest revision as of 16:41, 17 December 2018

Project Proposal

Project Overview

Nowadays, with the fast development of technology, artificial intelligence becomes highly valued and popular. High-tech companies have developed incredible technology. Some notable examples are Tesla with self-driving cars, Google has invented its instant translation machine, Apple has created Siri, etc. With all the excitement and hype of A.I, our group decided to study and explore the Raspberry Pi Car. The goal of this project is to build a model car and use Raspberry Pi to navigate through certain trails. For example, our preliminary goal is for the car to navigate a path clearly marked on a white floor with black tape. We will try some easy trails first, then we will attempt harder tasks as we achieve our goals. We can either increase the complexity of road or add more functions to the car.

Team Members

- Yinghan Ma (James)

- Jiaqi Li (George)

- Zhimeng Gou (Zimon)

- David Tekien (TA)

- Jim Feher (Instructor)

Objectives

- Build a Pi Car

- Connect the car with Raspberry Pi wirelessly

- Interface the Raspberry Pi and Arduino with sensors and actuators

- Enable the car to move

- Navigate the car by following an easy, straight path clearly marked on a white floor with black tape

- Navigate the car by following a curved path

- try some harder path with complex environment

- Install Night Light into the car

- Honk the horn when the car detecting barriers at the front

Challenges

- Learn how to use Raspberry Pi and how Pi interact with each electronical component

- Code with Python.

- Understand the meaning of the code in software section

- Learn CAD and figure out how to 3D print accessories for Pi Car

Budgets

| Item | Description | Source URL | Price/unit | Quantity | Shipping/Tax | Total |

|---|---|---|---|---|---|---|

| Buggy Car | Used as our pi car | Link | $99.99 | 1 | $0 | $99.99 |

| Rotary Encoder | Link | $39.95 | 1 | $0 | 39.95 | |

| Raspberry Pi | [Provided] | $0 | 1 | $0 | $0 | |

| 32GB MicroSD Card | [Provided] | $0 | 1 | $0 | $0 | |

| IMU 9DoF Senor Stick | Link | $14.95 | 1 | $0 | $14.95 | |

| Raspberry Pi Camera Module V2 | Link | $29.95 | 1 | $0 | $29.95 | |

| Brushed ESC Motor Speed Controller | Link | $8.95 | 1 | $0 | $8.95 | |

| TowerPro SG90 Micro Servo | Link | $7.29 | 1 | $0 | $7.29 | |

| TFMini- Micro LiDAR Module | Link | $39.95 | 1 | $0 | $39.95 | |

| Current Sensors | Link | $6.39 | 6 | $0 | $38.34 | |

| Honk | Link | $11.99 | 1 | $0 | $11.99 | |

| $286.67 |

Gantt Chart

References

Design and Solutions

Build the car

Extra Materials that we need:

- 3D printed layers

- 3D printed frame for fixing the encoder and the car

- Spacers for the layers

- Gear that fixes the encoder

- Some plastic central plastic gears for back up (since they broke easily)

The first thing we have to do is to remove the original parts from the Buggy car.

To get all the STL files, and to build the car step by step, please click: Pi Car Project Setup. [1]

Pi Arduino communication

We used serial communication [2] first. It can be done easily by plugging in USB wire from Pi to Arduino [3].

Later on, we found the WASD file used I2C communication. Thus we switched to I2C communication.

To install Arduino software on the Pi, please follow this useful website: Arduino Install [4]

Serial Communication code: (This is for our test, not for the project)

- Arduino communicated with Raspberry Pi: Upload the Arduino file. Next, run the Python file.

- The central picture shows how to find the port of the Arduino.

Pi communicated with Arduino:

- Python reads what the Arduino writes to it (Here, the Arduino writes a sequence of numbers, which increases after each iteration in the loop)

I2C communication approach: [5] (This is what we used in our project)

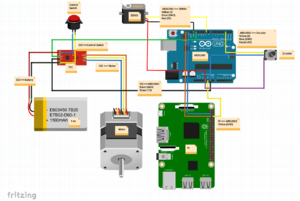

ESC & Motor & Encoder & Arduino Connection

Here is how we connect the ESC, motor, encoder, and Arduino.(Double click the picture to get a clear version)

Make the car move

- Run WASD file to make the motor move.

- The Simpletimer was not included in the library.

- The Simpletimer.h was created by ourselves.

- Encoder did not work very well. It did not control the speed as it supposed to be.

- Some changes were made in the WASD.ino.

Those files can be found in Github. [6]

Line tracking

In the line tracking process. I used Python and OpenCV to find lines in a real-time video.

The following techniques are used:

1. Canny Edge Detection

2. Hough Transform Line Detection

You will need a Raspberry Pi 3 with python installed and Pi camera (Webcam works too). You also need to install OpenCV onto your Raspberry Pi [7]. If this is your first time to use the Pi camera, to be able to access the Raspberry Pi camera with OpenCV and Python, we recommend looking at this [8]. For interfacing with the Raspberry Pi camera module using Python, the basic idea is to install Pi camera module with NumPy array support since OpenCV represents images as NumPy arrays when using Python bindings.

If you are using Webcam, please follow this website [9] to realize line detection. The code is given below:

import sys

import time

import cv2

import numpy as np

import os

We start by importing packages.

Kernel_size=15

low_threshold=40

high_threshold=120

rho=10

threshold=15

theta=np.pi/180

minLineLength=10

maxLineGap=1

Next, we set some important parameters. They represent different things:

kernel_size must be positive and odd. The GaussianBlur takes a Kernel_size parameter which you'll need to play with to find one that works best.

low_threshold – the first threshold for the hysteresis procedure.

high_threshold – the second threshold for the hysteresis procedure.

rho – Distance resolution of the accumulator in pixels.

theta – Angle resolution of the accumulator in radians.

threshold – Accumulator threshold parameter. Only those lines are returned that get enough votes ( >\texttt{threshold} ).

minLineLength – The minimum line length. Line segments shorter than that are rejected.

maxLineGap – The maximum allowed gap between points on the same line to link them.

Initialize camera

video_capture = cv2.VideoCapture(0)

From there, we need to grab access to our video_capture pointer. Here we grab reference to our Webcam.

#keep looping

while True:

ret, frame = video_capture.read()

time.sleep(0.1)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

blurred = cv2.GaussianBlur(gray, (Kernel_size, Kernel_size), 0)

Then we start a loop that will continue until we press the q key. We make a call to the read method of our camera pointer which returns a 2-tuple. The first entry in the tuple, ret is a boolean indicating whether the frame was successfully read or not. The frame is the video frame itself.

After we successfully grab the current frame, we preprocess our frame a bit. We first convert the frame to Grayscale. We’ll then blur the frame to reduce high-frequency noise and allow us to focus on the structural objects inside the frame. If Kernel_size is bigger the image will be more blurry.

The bigger kearnel_size value requires more time to process. It is not noticeable with the test images but we should keep that in mind (later we'll be processing video clips). So, we should prefer smaller values if the effect is similar.

#Canny recommended ratio upper: lower between 2:1 or 3:1

edged = cv2.Canny(blurred, low_threshold, high_threshold)

#Perform hough lines probabilistic transform

lines = cv2.HoughLinesP(edged,rho,theta,threshold,minLineLength,maxLineGap)

Then we perform canny edge-detection. When there is an edge (i.e. a line), the pixel intensity changes rapidly (i.e. from 0 to 255) which we want to detect. We introduced high_threshold and low_threshold so that if a pixel gradient is higher than high_threshold is considered as an edge. Similarly, if a pixel gradient is lower than low_threshold, it is rejected. Bigger high_threshold values will provoke to find fewer edges and lower high_threshold values will give you more lines. It is important to choose a good value of low_threshold to discard the weak edges (noises) connected to the strong edges. You need to adjust these two parameters to make the number of lines be acceptable.

Finally, we use cv2.HoughLinesP to detect lines in the edge images.

#Draw cicrcles in the center of the picture

cv2.circle(frame,(320,240),20,(0,0,255),1)

cv2.circle(frame,(320,240),10,(0,255,0),1)

cv2.circle(frame,(320,240),2,(255,0,0),2)

#With this for loops only a dots matrix is painted on the picture

#for y in range(0,480,20):

#for x in range(0,640,20):

#cv2.line(frame,(x,y),(x,y),(0,255,255),2)

#With this for loops a grid is painted on the picture

for y in range(0,480,40):

cv2.line(frame,(0,y),(640,y),(255,0,0),1)

for x in range(0,640,40):

cv2.line(frame,(x,0),(x,480),(255,0,0),1)

This part of the code is not necessary but it makes the frame more vivid when showing on the screen. We draw circles in the center of the picture and grids in the picture.

#Draw lines on input image

if(lines != None):

for x1,y1,x2,y2 in lines[0]:

cv2.line(frame,(x1,y1),(x2,y2),(0,255,0),2)

cv2.putText(frame,'lines_detected',(50,50),cv2.FONT_HERSHEY_SIMPLEX,1,(0,255,0),1)

cv2.imshow("line detect test", frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything is done, release the capture

video_capture.release()

cv2.destroyAllWindows()

The last part draws the lines in the picture. If lines are not NoneType, we use a loop to draw the lines.

Our group is using Pi camera so the code needs to be modified. Otherwise, it will show a Nonetype error due to an image not being read properly from a disk or a frame not being read from the video stream. You cannot use cv2.VideoCapture when you should instead be using the Pi camera Python package to access the Raspberry Pi camera module.

There are two ways to modify the code to replace USB Webcam with Pi camera.

One is swapping out the cv2.VideoCapture for the VideoStream that works with both the Raspberry Pi camera module and USB webcams. Here is a website to know more details[10].

We did another way. Firstly we import some Pi camera packages

from picamera.array import PiRGBArray

from picamera import PiCamera

when initializing camera, we comment out video_capture = cv2.VideoCapture(0). Instead, we give code below:

#Initialize camera

camera = PiCamera()

camera.resolution = (640, 480)

camera.framerate = 10

rawCapture = PiRGBArray(camera, size=(640, 480))

We also change the "While True" loop into:

for f in camera.capture_continuous(rawCapture, format="bgr", use_video_port=True):

Finally, we comment out "ret, frame = video_capture.read()" and add:

frame = f.array

Now you should be able to realize line detection with Pi camera. However, you might find it is not satisfying because sometimes the video window suddenly disappears and the terminal gives you a Nonetype error again. In addition, the lines appearing in the window are more likely some sparse line segments or even dots. In fact, those problems stem from the HoughLineP function. Please check Feature Detection [11] if you are interested in HoughLineP function. Basically, cv2.HoughLinesP returns us an array of array of a 4-element vector(x_1, x_2, y_1, y_2) where (x_1,y_1) and (x_2, y_2) are the ending points of each detected line segment. (Each line is represented by a list of 4 values (x_1, y_1, x_2, y_2).)

The sample of cv2.HoughLinesP return value:

print(cv2.HoughLinesP) = [[[a b c d]]]

When we there is no line shown is the camera, cv2.HoughLinesP returns null(or None?) so that is the reason why the video window suddenly disappears. The code cv2.imshow("line detect test", frame) is in the if statement in which (lines != None). To maintain the window when there is no line detected, we could modified the code as below:

if(lines is not None):

for x1,y1,x2,y2 in lines[0]:

cv2.line(frame,(x1,y1),(x2,y2),(0,255,0),2)

cv2.putText(frame,'lines_detected',(50,50),cv2.FONT_HERSHEY_SIMPLEX,1,(0,255,0),1)

cv2.imshow("line detect test", frame)

else:

cv2.putText(frame,'lines_undetected',(50,50),cv2.FONT_HERSHEY_SIMPLEX,1,(0,255,0),1)

cv2.imshow("line detect test", frame)

After we understand the meaning of cv2.HoughLinesP function, we can modified the loop in the if statement since the old one "for x1,y1,x2,y2 in lines[0]:" only give us sparse line segments. The modified loop is:

for line in lines:

for x1,y1,x2,y2 in line:

It would have a better result if we set both minLineLength and maxLineGap to be 0.

Now, we should be able to detect lines. How about the center of the lines? How do we find the center of it?

What we did is declare two variable x and y initialized to be 0. Then in the innermost loop of drawing lines from images, we set x=x+x1+x2 and y=y+y1+y2. Next, we give another two variables xa and ya in the outer loop, which equal to x/(2 * the number of lines) and y/(2* the number of lines). Here, xa and ya represent the center of all the points(average point). Therefore, we can tell if our car is on the line or not by comparing (xa, ya) with (320, 240).

The final version of the code can be found in my github. [12]

Combine OpenCV and WASD

Combine line tracking with WASD python file.

Conclusion Relationship

- We first get the image from the Pi camera.

- Next, we use OpenCV in Pi to analyze the image.

- Then, we obtain and process the data so that we can send the signals to Arduino.

- Finally the Arduino can control the car by the signal (command from us).

Results

- Build the car:

1. Keep the original motor and servo.

2. Remove the cover of Buggy.

3. Remove the battery.

4. Remove the ESC. (Electronic Speed Control)

5. Add encoder, new ESC.

6. Connect wires and set up the first layer.

- I2C communication:

1. I2C communication is not very stable. Sometimes the Pi and Arduino suddenly lose connection.

2. Serial communication (USB) is recommended.

- Make the car move:

1. Connect the wires correctly.

2. Run the WASD code.

3. Test: the car runs pretty well except the speed is a little fast.

How to solve the speed problem:

We changed some code in WASD.ino, by setting the variable "control_speed = 150", which is also the slowest speed of the motor.

- Make the servo move:

1. Connect the wires correctly.

2. Follow the command in the WASD code.

3. Test: the servo worked pretty well.

- Line detection:

1. Can detect any kinds of lines with any colors (as long as there is a color difference)

2. Basic algorithms: detect the average point of the lines.

3. Fun fact: when the camera detects two lines, it will follow the center of these two lines instead of following one of them.

- Test drive:

1. Test the car with a straight line ----------------------------------------- Successful

2. Test the car with a curved right line ----------------------------------------- Successful

3. Test the car with a curved left line ----------------------------------------- Successful

4. Test the car with an S-shaped line ----------------------------------------- Successful

5. Test the car with a 90 degrees corner-shaped line ----------------------------------------- Fail

Fail reason: 90-degree angle is a sharp angle, and it makes the car camera unable to detect where the followed line is once the angle appears. If we can set the camera higher, as we can see broader, we can achieve this task.

Discussion

Overall, the project was successful. We met most of the objectives as we set up at the beginning of the semester. The car is moving smoothly and it can detect shapes and follow lines easily. Although the encoder does not work very well, we can still control the speed by modifying the WASD code.

What we did not do well is we underestimate the difficulty of our project. In the beginning, we thought this project should be done smoothly since there are many materials online. We even thought about doing self-parking. However, we met so many difficulties throughout this semester: the WASD file did not have simpletimer library; the encoder did not work very well; OpenCV has a lot of bugs; the camera can easily be disturbed by surroundings; Pi and battery died in the final week. Thus, we did not finish the self-parking and add the fancy decorations (such as a nightlight, honk, etc) in our project.

The obstacles can be concluded into two areas: lack of knowledge and experience in computer programming and the quality of materials. We could further improve the OpenCV by ignoring the surrounding edges, and only focusing on the ground. Besides, we could fix the bugs in the code more efficiently if we have more experience in computer programming. Thus we can also finish the project faster and dive into harder problems. As for the material's quality, if we have an extended length of the Pi camera, there will be a better effect in shape detection. If materials are in good shape, we will not procrastinate during the final week, and some more progress might be done.

Next step: switch back to serial communication; further improvement on OpenCV: ignoring the surroundings; automatically stop when an obstacle occurs.

Poster

References

- ↑ Pi Car Setup -[1]

- ↑ Serial Communication Graph -[2]

- ↑ Arduino Tutorial -[3]

- ↑ Arduino Install -[4]

- ↑ I2C Communication Graph -[5]

- ↑ Github - github

- ↑ Installing OpenCV&Python - [6]

- ↑ Accessing OpenCV&Python - instruction

- ↑ Webcam - link

- ↑ Camera More Details - more details

- ↑ Feature Detection - Feature Detection

- ↑ Github - github