Difference between revisions of "FingerSpark"

| Line 5: | Line 5: | ||

'''Overview''' | '''Overview''' | ||

| − | FingerSpark, our product, will be a program that will allow a camera to track a user’s hand and individual fingers. The user will wear a glove with different colored fingers, making it easier for the camera to pinpoint the two-dimensional location of each finger. The camera will also utilize a Raspberry Pi B+ CPU, which will run our program and gather the data from the camera, forming an integrated system that will be mounted on a tripod for the user’s convenience. | + | FingerSpark, our product, will be a program that will allow a camera to track a user’s hand and individual fingers. The user will wear a glove with different colored fingers, making it easier for the camera to pinpoint the two-dimensional location of each finger. To use our product, the user will position his hand 2-3 feet in front of the camera, with a white backdrop behind his hand. The camera will also utilize a Raspberry Pi B+ CPU, which will run our program and gather the data from the camera, forming an integrated system that will be mounted on a tripod for the user’s convenience. The Raspberry Pi Camera Module has modes that support 1080p video at 30 frames/second and 720p video at 60 frames/second, either of which would be more than sufficient to serve the purpose of our project (detecting the movement of brightly colored points at approximately 2-3 feet away from the camera). |

| − | To achieve this, we plan to use an image processing program to process individual frames from the camera’s video feed which we will analyze to determine the coordinates of each of the user’s fingers. | + | To achieve this, we plan to use an image processing program to process individual frames from the camera’s video feed which we will analyze to determine the coordinates of each of the user’s fingers. If we are able to achieve this goal with a substantial amount of time before the deadline, we hope to begin work on a program to interpret human gestures using this data. Transforming the finger coordinates to gestures will require real-time extrapolation of changes in the x- and y-locations of each finger while allowing for slight inaccuracies in the movement of the user's hand. |

| Line 13: | Line 13: | ||

Our goal in creating FingerSpark is to work towards eliminating the barriers to perfectly natural user control of electronics. We believe that our product will be an essential next step in developing three-dimensional operating systems, creating robots that can flawlessly mimic the fine motor skills of humans, and producing interactive augmented reality technologies. | Our goal in creating FingerSpark is to work towards eliminating the barriers to perfectly natural user control of electronics. We believe that our product will be an essential next step in developing three-dimensional operating systems, creating robots that can flawlessly mimic the fine motor skills of humans, and producing interactive augmented reality technologies. | ||

| + | |||

| + | Our demonstration at the end of the semester will consist of us demonstrating the ability to move our hand in the glove with colored fingertips in front of the camera, while a monitor reflects the location of each fingertip in two-dimensional space with each fingertip represented as a point on a uniformly colored background updating in real time. For our demo, we will develop a simple coloring app that will allow the user to “paint” different colors onscreen using our product. | ||

| Line 31: | Line 33: | ||

* [http://www.amazon.com/Rust-Oleum-249124-Painters-Purpose-12-Ounce/dp/B002BWOS6W/ref=sr_1_1?ie=UTF8&qid=1455906226&sr=8-1&keywords=red+spray+paint/ Brown Spray Paint]: $11.00 (Need to purchase) | * [http://www.amazon.com/Rust-Oleum-249124-Painters-Purpose-12-Ounce/dp/B002BWOS6W/ref=sr_1_1?ie=UTF8&qid=1455906226&sr=8-1&keywords=red+spray+paint/ Brown Spray Paint]: $11.00 (Need to purchase) | ||

| − | * [http://www.google.com/shopping/product/5790257923932465681?lsf=seller:9119074,store:16319532146990885804&prds=oid:8794367866582351565&q=tripod+for+sale&hl=en&ei=LVvHVsmBJ4GV-wHphr_gDA/ Tripod]: $19.99 | + | * [http://www.google.com/shopping/product/5790257923932465681?lsf=seller:9119074,store:16319532146990885804&prds=oid:8794367866582351565&q=tripod+for+sale&hl=en&ei=LVvHVsmBJ4GV-wHphr_gDA/ Tripod]: $19.99 (Need to purchase) |

| + | |||

| + | * [http://www.amazon.com/LinenTablecloth-102-Inch-Rectangular-Polyester-Tablecloth/dp/B008TL6GJG/ref=sr_1_1?ie=UTF8&qid=1455907465&sr=8-1&keywords=white+sheet/ White Backdrop]: $9.50 (Need to purchase) | ||

| − | TOTAL: $ | + | TOTAL: $116.12 |

Revision as of 18:46, 19 February 2016

A project by David Battel and Connor Goggins.

Overview

FingerSpark, our product, will be a program that will allow a camera to track a user’s hand and individual fingers. The user will wear a glove with different colored fingers, making it easier for the camera to pinpoint the two-dimensional location of each finger. To use our product, the user will position his hand 2-3 feet in front of the camera, with a white backdrop behind his hand. The camera will also utilize a Raspberry Pi B+ CPU, which will run our program and gather the data from the camera, forming an integrated system that will be mounted on a tripod for the user’s convenience. The Raspberry Pi Camera Module has modes that support 1080p video at 30 frames/second and 720p video at 60 frames/second, either of which would be more than sufficient to serve the purpose of our project (detecting the movement of brightly colored points at approximately 2-3 feet away from the camera).

To achieve this, we plan to use an image processing program to process individual frames from the camera’s video feed which we will analyze to determine the coordinates of each of the user’s fingers. If we are able to achieve this goal with a substantial amount of time before the deadline, we hope to begin work on a program to interpret human gestures using this data. Transforming the finger coordinates to gestures will require real-time extrapolation of changes in the x- and y-locations of each finger while allowing for slight inaccuracies in the movement of the user's hand.

Objective

Our goal in creating FingerSpark is to work towards eliminating the barriers to perfectly natural user control of electronics. We believe that our product will be an essential next step in developing three-dimensional operating systems, creating robots that can flawlessly mimic the fine motor skills of humans, and producing interactive augmented reality technologies.

Our demonstration at the end of the semester will consist of us demonstrating the ability to move our hand in the glove with colored fingertips in front of the camera, while a monitor reflects the location of each fingertip in two-dimensional space with each fingertip represented as a point on a uniformly colored background updating in real time. For our demo, we will develop a simple coloring app that will allow the user to “paint” different colors onscreen using our product.

Budget

- Raspberry Pi B+ - $29.95 (Will likely use classroom kit)

- Raspberry Pi Camera Module - $24.99 (Need to purchase)

- Set of comfortable black gloves - 2 pairs: $7.89 x 2 = $15.78 (Need to purchase)

- Red Spray Paint: $3.98 (Need to purchase)

- Blue Spray Paint: $5.93+$5.05 [shipping] = $10.08 (Need to purchase)

- Green Spray Paint: $3.98 (Need to purchase)

- Yellow Spray Paint: $3.98 (Need to purchase)

- Orange Spray Paint: $8.86 (Need to purchase)

- Purple Spray Paint: $3.98 (Need to purchase)

- Brown Spray Paint: $11.00 (Need to purchase)

- Tripod: $19.99 (Need to purchase)

- White Backdrop: $9.50 (Need to purchase)

TOTAL: $116.12

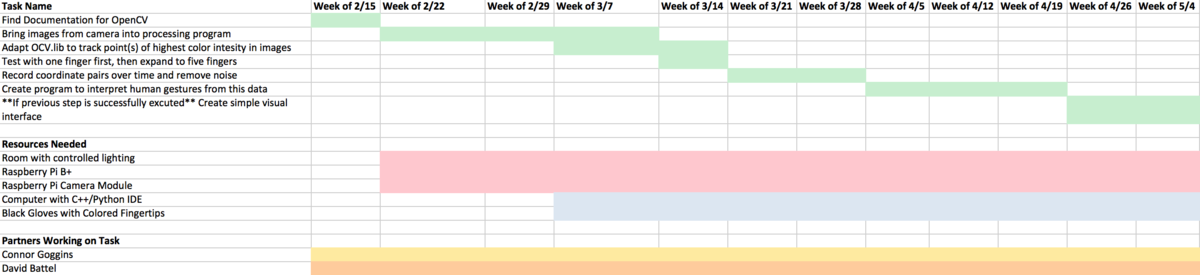

Gantt Chart