Difference between revisions of "Duckling"

m (Protected "Duckling" ([Edit=Allow only administrators] (indefinite) [Move=Allow only administrators] (indefinite))) |

|||

| (68 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

== Link to Log == | == Link to Log == | ||

| − | |||

| + | [[Duckling_log|Weekly log]] | ||

| + | |||

| + | = Project Proposal = | ||

== Overview == | == Overview == | ||

| − | + | There once was a time when children's toy cars would be bland and boring. A child might push the car and chase it, or use her own remote controller to move the car around, but no real fun was to had when the car couldn't move freely! | |

| + | We wish to fix this lack of fun by designing an entirely new kind of car-the kind of car that chases you! By integrating remote-controlled car, raspberry pi, and arduino, we plan to design a pi car so that its sensors (through the Pi camera) may evaluate a person's movement and follow them. The arduino will direct the car by communicating with the motor, servo and ESC . All components will be mounted on top of the car to create one autonomous system that needs no outside influence, besides a person's movements, to direct its path. | ||

== Team Members == | == Team Members == | ||

| − | |||

| − | |||

Tushar Menon | Tushar Menon | ||

| Line 21: | Line 22: | ||

2. The pi car is able to sense a red dot (or other comparable reference point) and move towards it | 2. The pi car is able to sense a red dot (or other comparable reference point) and move towards it | ||

| − | 3. The raspberry pi successfully connects to | + | 3. The raspberry pi successfully connects to a pi camera |

4. The sensors can differentiate between the movements of a target person or object and other movements. | 4. The sensors can differentiate between the movements of a target person or object and other movements. | ||

| − | 5. The duckling pi car will move according to information from the | + | 5. The duckling pi car will move according to information from the camera |

| + | |||

| + | 6. The duckling pi car will use the camera to follow a person | ||

| + | |||

| + | == Presentation == | ||

| − | + | [https://docs.google.com/presentation/d/1CCfXd_s2eQlEuH7pxQ5VXRTi_YC4t6u7IGGgBqWmDRQ/edit?usp=sharing click here for slides] | |

== Challenges == | == Challenges == | ||

| − | We expect our challenges to include: | + | We expect our challenges to include: |

| + | |||

| + | 1. Completing our project under budget | ||

| + | |||

| + | 2. Learning how to use the raspberry pi and arduino | ||

| + | |||

| + | 3. Interfacing the pi and arduino with one another | ||

| + | |||

| + | 4. 3-D printing. No members have experience. | ||

| + | |||

| + | 5. Getting the camera to communicate with the raspberry pi | ||

| + | |||

| + | 6. Working with the PI camera image processing to sense a person | ||

| − | + | 7. Difficulties with hardware assembly | |

| − | + | ||

| − | user safety | + | We also expect that we may have difficulty working on the project independently if we do not all have access to the pi car with the arduino and raspberry pi mounted on top. We plan to leave it in the lab in order to protect our project and promote inclusivity in access. We have no concerns regarding privacy, user safety, or security from malicious attacks. |

| − | security from malicious attacks | ||

| − | |||

| − | |||

== Gantt Chart == | == Gantt Chart == | ||

| − | + | [[File:Ganttduckling.png]] | |

| + | |||

== Budget == | == Budget == | ||

| − | |||

| − | |||

| − | + | Dromida 1/18 Buggy($99) | |

| + | |||

| + | ISC25 Rotary Encoder($40) | ||

| + | |||

| + | 32GB MicroSD Card($13) | ||

| + | |||

| + | IMU 9DoF Sensor Stick($15) | ||

| + | |||

| + | Raspberry Pi Camera Module V2($30) | ||

| + | |||

| + | Brushed ESC Motor Speed Controller($6) | ||

| + | |||

| + | TowerPro SG90 Micro Servo($4) | ||

| + | |||

| + | TFMini - Micro LiDAR Module($40) | ||

| + | |||

| + | Arduino (free from lab) | ||

| + | |||

| + | Raspberry Pi (free) | ||

| + | |||

| + | |||

| + | Total: $247 | ||

| + | |||

| + | Supplies purchased according to Pi Car documentation <ref>Pi Car documentation - [https://picar.readthedocs.io/en/latest/chapters/usage/mechanical.html#design Bulletin Link]</ref> | ||

| + | |||

| + | = Design and Solutions = | ||

| + | ==Building the car== | ||

| + | We bought a Dromida buggy and dismantled it to create our chassis for the car. We took out its batteries and motor, and replaced them with our own battery and motor. We connected the motor to an electronic speed controller, and also mounted a rotary encoder onto the chassis by drilling and dremeling through it. This forms Layer 0 of the car. | ||

| + | We created a GND and 5V channel using breadboard and used spacers to mount the raspberry Pi and Arduino onto the first printed layer and then mounted that layer onto the car. | ||

| + | We would have mounted the Lidar module onto the second layer, but we decided against its functionality to meet our objectives. | ||

| + | A more detailed overview of the mechanical assembly can be found at [https://picar.readthedocs.io/en/latest/chapters/usage/mechanical.html the Pi car documentation] | ||

| + | We faced several difficulties with gear meshing for both the rotary encoder and the motor gear. We Macgyvered 'quick fix' solutions to both issues but the problem actually turned out to be with the cheap plastic spur gear that came with the buggy. A future build of the car could potentially use a metal spur gear. Furthermore, we had issues with the steering wherein we couldn't fix pins onto our chassis to hold parts that connected to the wheels. We improvised a solution to that by using Alan wrenches as pins instead. Our turnigy 1A battery also dipped below 30% and it turns out they cannot be recharged if they cross that threshold. This was on the day before demo so we were panicking because we couldn't find another 1A battery at a store nearby, but we tried using a 1.5 A battery instead and it worked. There were also several instances where we tried testing and the entire car just fell apart, so we had to rebuild it a few times. | ||

| + | |||

| + | == ESC, motor, encoder and servo connection== | ||

| + | This is how the components were connected. (Credit to Zimon from the other Pi car group for the picture) | ||

| + | |||

| + | [[File:zimon.png | frameless | 300px | center]] | ||

| + | |||

| + | == Pi and Arduino connection== | ||

| + | |||

| + | We initially planned to implement a serial port between the Pi and Arduino, but decided to use I2C communication instead, using the code on this website <ref>I2C connection for Pi and Arduino - [https://oscarliang.com/raspberry-pi-arduino-connected-i2c/ Bulletin Link]</ref>, wiring them as follows | ||

| + | [[File:I2C.jpg|200px|left]] | ||

| + | |||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | |||

| + | ==Code== | ||

| + | |||

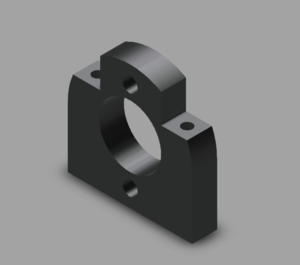

| + | [[File:Encoder.png|300px|thumb|left|Encoder mount]] | ||

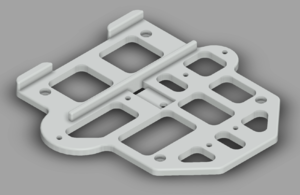

| + | [[File:Firstlayer.png|300px|thumb|left|Printed first layer]] | ||

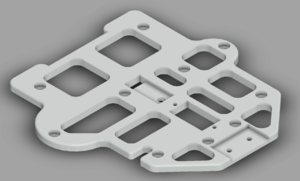

| + | [[File:Secondlayer.png|300px|thumb|left|Printed second layer]] | ||

| + | |||

| + | |||

| + | https://github.com/Matheus-camacho/ese205 | ||

| + | |||

| + | How the code works (pseudo code): | ||

| + | 1. Initialize video stream and PiCamera | ||

| + | 2. Read if the Arduino sends something | ||

| + | 3. Initialize openCV tracker with the desired properties | ||

| + | 4. Draw the tracking circle | ||

| + | While there is something to track | ||

| + | Update tracker position | ||

| + | Save tracker position | ||

| + | Convert position to angle | ||

| + | Send angle and speed to Arduino | ||

| + | |||

| + | 3D printed parts (Credit to Adith Boloor): | ||

| + | Encoder Mount: https://a360.co/2DUNNK6 | ||

| + | |||

| + | First Layer: https://a360.co/2RquoDs | ||

| + | |||

| + | Second Layer: https://a360.co/2OBMGmP | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | |||

| + | =Results= | ||

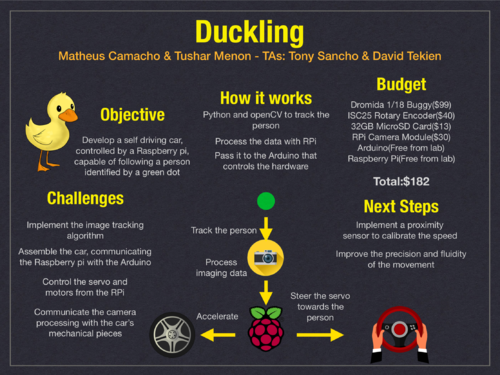

| + | ==Poster== | ||

| + | [[File:Ducklingposter.png|500px|thumb|left|Our poster for the demo session]] | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/><br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | |||

| + | <br/> | ||

| + | |||

| + | <br/> | ||

| + | |||

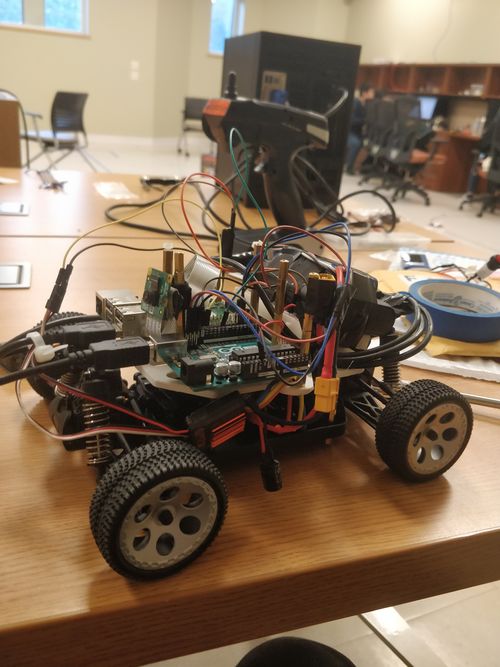

| + | ==Final build== | ||

| + | Here is what our final build of the car looks like | ||

| + | The motor and rotary encoder are on the chassis, and the the 3D printed first layer covers them. The Pi and Arduino and breadboard rest on the 3D printed first layer, as well as the powerbank for the Pi and the battery for the car. The wires are ziptied together and the ESC just dangles amidst the wires. | ||

| + | [[File:Tushar2.jpg|500px|thumb|left|Duckling]] | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | |||

| + | <br/> | ||

| + | |||

| + | <br/> | ||

| + | |||

| + | <br/> | ||

| + | |||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | |||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | <br/> | ||

| + | |||

| + | ==Demonstration== | ||

| − | + | [[File:Carr.mp4]] | |

| + | <br/> | ||

| + | A demonstration of the car following a red object | ||

| + | <br/> | ||

| + | As is evident, the car can successfully track the colours we teach it to recognise. The camera works well under different lighting conditions as the range of HSV values we taught it to recognise is wide. The speed of the car is constant and is controlled from a rpm variable in the source code itself. The motor gear doesn't mesh with the spur gear at lower speeds so we had to set the rpms constant at a relatively high value. | ||

| + | <br/> | ||

| + | <br/> | ||

| − | + | ==Discussion== | |

| + | Our car had a successful demo, as outlined by our initial objectives. It moves smoothly and follows objects as intended. The only difference is that we chose not to use the kinect but that didn't change the functionality of the car. The reason for that is that a kinect is expensive and its hard to obtain a second hand one. Furthermore it's hard to interface the kinect with the Raspberry Pi, so we chose to use to use a Pi camera instead. We also could not add depth mapping functionality (we intended to use a lidar module in place of the kinect) because getting the motor and encoder to work was a lot harder than we foresaw. | ||

| + | Next steps: Implementing depth mapping in order to calibrate the speed of the car. It currently moves at a constant speed but if it had the lidar module on it to feed the RPi distances then we could control the speed of the car. We could make that functionality open to the user as well instead of having the car "smart drive" and decide for itself how fast it should go. | ||

| + | == References== | ||

[[Category:Projects]] | [[Category:Projects]] | ||

[[Category:Fall 2018 Projects]] | [[Category:Fall 2018 Projects]] | ||

Latest revision as of 15:55, 17 December 2018

Link to Log

Project Proposal

Overview

There once was a time when children's toy cars would be bland and boring. A child might push the car and chase it, or use her own remote controller to move the car around, but no real fun was to had when the car couldn't move freely! We wish to fix this lack of fun by designing an entirely new kind of car-the kind of car that chases you! By integrating remote-controlled car, raspberry pi, and arduino, we plan to design a pi car so that its sensors (through the Pi camera) may evaluate a person's movement and follow them. The arduino will direct the car by communicating with the motor, servo and ESC . All components will be mounted on top of the car to create one autonomous system that needs no outside influence, besides a person's movements, to direct its path.

Team Members

Tushar Menon

Matheus Camacho

Tony Sancho (TA)

Objectives

The duckling project will be deemed a success if

1. The duckling's pi car is constructed successfully according to the reference guide

2. The pi car is able to sense a red dot (or other comparable reference point) and move towards it

3. The raspberry pi successfully connects to a pi camera

4. The sensors can differentiate between the movements of a target person or object and other movements.

5. The duckling pi car will move according to information from the camera

6. The duckling pi car will use the camera to follow a person

Presentation

Challenges

We expect our challenges to include:

1. Completing our project under budget

2. Learning how to use the raspberry pi and arduino

3. Interfacing the pi and arduino with one another

4. 3-D printing. No members have experience.

5. Getting the camera to communicate with the raspberry pi

6. Working with the PI camera image processing to sense a person

7. Difficulties with hardware assembly

We also expect that we may have difficulty working on the project independently if we do not all have access to the pi car with the arduino and raspberry pi mounted on top. We plan to leave it in the lab in order to protect our project and promote inclusivity in access. We have no concerns regarding privacy, user safety, or security from malicious attacks.

Gantt Chart

Budget

Dromida 1/18 Buggy($99)

ISC25 Rotary Encoder($40)

32GB MicroSD Card($13)

IMU 9DoF Sensor Stick($15)

Raspberry Pi Camera Module V2($30)

Brushed ESC Motor Speed Controller($6)

TowerPro SG90 Micro Servo($4)

TFMini - Micro LiDAR Module($40)

Arduino (free from lab)

Raspberry Pi (free)

Total: $247

Supplies purchased according to Pi Car documentation [1]

Design and Solutions

Building the car

We bought a Dromida buggy and dismantled it to create our chassis for the car. We took out its batteries and motor, and replaced them with our own battery and motor. We connected the motor to an electronic speed controller, and also mounted a rotary encoder onto the chassis by drilling and dremeling through it. This forms Layer 0 of the car. We created a GND and 5V channel using breadboard and used spacers to mount the raspberry Pi and Arduino onto the first printed layer and then mounted that layer onto the car. We would have mounted the Lidar module onto the second layer, but we decided against its functionality to meet our objectives. A more detailed overview of the mechanical assembly can be found at the Pi car documentation We faced several difficulties with gear meshing for both the rotary encoder and the motor gear. We Macgyvered 'quick fix' solutions to both issues but the problem actually turned out to be with the cheap plastic spur gear that came with the buggy. A future build of the car could potentially use a metal spur gear. Furthermore, we had issues with the steering wherein we couldn't fix pins onto our chassis to hold parts that connected to the wheels. We improvised a solution to that by using Alan wrenches as pins instead. Our turnigy 1A battery also dipped below 30% and it turns out they cannot be recharged if they cross that threshold. This was on the day before demo so we were panicking because we couldn't find another 1A battery at a store nearby, but we tried using a 1.5 A battery instead and it worked. There were also several instances where we tried testing and the entire car just fell apart, so we had to rebuild it a few times.

ESC, motor, encoder and servo connection

This is how the components were connected. (Credit to Zimon from the other Pi car group for the picture)

Pi and Arduino connection

We initially planned to implement a serial port between the Pi and Arduino, but decided to use I2C communication instead, using the code on this website [2], wiring them as follows

Code

https://github.com/Matheus-camacho/ese205

How the code works (pseudo code):

1. Initialize video stream and PiCamera

2. Read if the Arduino sends something

3. Initialize openCV tracker with the desired properties

4. Draw the tracking circle

While there is something to track

Update tracker position

Save tracker position

Convert position to angle

Send angle and speed to Arduino

3D printed parts (Credit to Adith Boloor): Encoder Mount: https://a360.co/2DUNNK6

First Layer: https://a360.co/2RquoDs

Second Layer: https://a360.co/2OBMGmP

Results

Poster

Final build

Here is what our final build of the car looks like The motor and rotary encoder are on the chassis, and the the 3D printed first layer covers them. The Pi and Arduino and breadboard rest on the 3D printed first layer, as well as the powerbank for the Pi and the battery for the car. The wires are ziptied together and the ESC just dangles amidst the wires.

Demonstration

A demonstration of the car following a red object

As is evident, the car can successfully track the colours we teach it to recognise. The camera works well under different lighting conditions as the range of HSV values we taught it to recognise is wide. The speed of the car is constant and is controlled from a rpm variable in the source code itself. The motor gear doesn't mesh with the spur gear at lower speeds so we had to set the rpms constant at a relatively high value.

Discussion

Our car had a successful demo, as outlined by our initial objectives. It moves smoothly and follows objects as intended. The only difference is that we chose not to use the kinect but that didn't change the functionality of the car. The reason for that is that a kinect is expensive and its hard to obtain a second hand one. Furthermore it's hard to interface the kinect with the Raspberry Pi, so we chose to use to use a Pi camera instead. We also could not add depth mapping functionality (we intended to use a lidar module in place of the kinect) because getting the motor and encoder to work was a lot harder than we foresaw. Next steps: Implementing depth mapping in order to calibrate the speed of the car. It currently moves at a constant speed but if it had the lidar module on it to feed the RPi distances then we could control the speed of the car. We could make that functionality open to the user as well instead of having the car "smart drive" and decide for itself how fast it should go.

References

- ↑ Pi Car documentation - Bulletin Link

- ↑ I2C connection for Pi and Arduino - Bulletin Link