A Survey of Robotics Systems and Performance Analysis

Abstract

The purpose of this paper is to present a survey of general robotic systems and performance analysis. This paper will also present ideas and research from three related fields within robotics: assistive robotics, human robot interaction, and autonomous robotics. In each of these cases the information provided is intended to help with future research by providing the taxonomy and concepts of that field. The most important idea this paper tries to convey is to be aware of all aspects of a system before studying, and to avoid analysis errors resulting from tunnel vision.

Keywords:

Robotics, Human Robot Interaction, Assistive Robotics, Real-time robotic systems, multi-robot systems, Unmanned Aerial Vehicle, Autonomous Robotics, Performance Analysis, Metrics, Modeling, Simulation, Benchmarking

Table of Contents

1. Introduction

Robotics is diverse area of study with applications in numerous fields and aspects of society. Properly designed robotic systems that take into account how they benefit human users make use of multiple methods of evaluation. For the purpose of this paper Robotics will be defined as a mechanical system controlled by embedded or other computer systems with the purpose of simplifying human tasks. As this is a survey paper, topics will be discussed at a higher level and provide insight for further research. This paper will also discuss the distinctions between several fields within robotics. The first section will cover assistive robotics, followed by human robot interaction, autonomous robotics, real-time robotic systems, and evaluation pitfalls.

2. Assistive Robotics

Assistive Robotics is an area of robotics that deals with utilizing robots as tools rather than task based autonomous systems. The use of robotics as an assistive tool requires a special set of metrics rather than the sole use of the conventional task completion time metric. While Assistive Robotics covers multiple areas where robots are utilized as tools, here the focus will be in the area of medical tasks. a In the case of rehabilitation, friendliness, ease of operation, and effectiveness of input device are more suitable to give useful results [Tsui08]. The remainder of this section will touch on the development of assistive robotics, discuss clinical applications, and finally the progress in establishing standard benchmarks.

2.1 Technology Development Challenges

This section will focus on the creation of new devices and their usability. One of the difficulties of testing systems in assistive robotics is the low number of trials, which provide good insight, but are limited by human fatigue. Running thousands of trials is not practical or cost effective. The time involved would also prevent the data collected up to this point from being used to analyze the system and improve performance, and thus quality of life. For example, in the case of developing a prosthetic arm, typical metrics were taken into account, such as time to complete a task and accuracy of the task. Beyond these metrics, researchers also monitored blood oxygen levels and carbon dioxide production. This allows an early stage device to be analyzed and compared to its biological counterpart. Since the goal defined by assistive robots is to make tasks easier, a failure to perform at least on par with natural systems means a new look must be taken at the design. The time frame involved is also a critical reason careful thought must be taken when choosing factors to observe. In the case of prosthetic limbs and stroke rehabilitation, testing time ranges are on the order of six months. With such long time frames, including useless factors waste both time and money, while providing little future benefit to patients.[Tsui09]

2.2 Clinical Application

This section discuses how assistive technology is applied to a given end-user population. At this stage, assistive robotics are being used on a daily basis, and analysis can be conducted to gauge where future efforts for development are needed. When evaluating the mental efforts used to operate an assistive robotic system, Functional Independence Measure (FIM), Rating Scale for Mental Efforts (RSME), and Standardized Mini-Mental State Examination are commonly used, but other measures do exist [Tsui08]. These scales help provide some level of quantitative data in regards to the performance of assistive robotics systems as perceived by users. This demonstrates the difficulties in trying to generalize robotics to a point of a single evaluation and analysis technique, which will be discussed later.

2.3 Benchmarks and Evaluation

Evaluation performance of assistive robotics can be difficult with the human element present. As proposed by David Feil-Seifer et al.[5] in table 1, a set of benchmark criteria does exist. While it may not be conclusive, it does help future researchers in the field by making them aware of the human element of the system. The derivation of the table stems from a Stanford University experiment, which included questionnaires for patients to gather non-quantitative data such as usefulness and ease of use of the ProVAR system.

Table 1. Propose of Benchmarks for the field of Assistive Robotics

| Robotic Technology |

Social Interaction |

Assistive Technology |

| Safety |

Autonomy |

Impact on User's Care |

| Scalability |

Imitation |

Impact on Care Givers |

|

Privacy |

Impact on User's Life |

|

Understanding of Domain |

|

|

Social Success |

|

3. Human Robotic Interaction

The interaction between human and robotic systems permeates all robotic systems to some level. The three main levels discussed in this section include: full human, partial human, and humans as observers. Assistive robotics, in the previous section, ties into full and partial human interaction, while autonomous robotics in section four primarily focuses on humans as observers. A key point to keep in mind for this section is how each element affects trust of the system [Freedy07]. While the robotic system on its own may be capable of high throughput rates, if the system is not utilized the throughput falls to zero. The remainder of this section will discuss data acquisition and how this applies to single and multi robot systems.

3.1 Data Acquisitions Via Sensors

Data acquisition is a vital part of human robot interaction. Since the robot is not defined as assistive in the sense of the section 2, but rather an extension of the user, sensory input allows the user to make decisions on remote data. For this section, two types of perceptions will be discussed, passive perception and active perception as mentioned by [Freedy07]. Passive perception refers to the interpretation of received sensor data from individual sensors acting separately. Active perception, on the other hand, refers to the use of multiple sensors which are used to remove ambiguity from a single sensor reading. This would come into play if a robot became inverted while traversing rough terrain. The nature of using multiple sensors adds complexity to the system, and researchers should be mindful when laying out the metrics and goals of the system. Metrics used to evaluate a single sensor as its own system, may no longer be as relevant due to its interaction with other sensors. More general objective metrics may need to be used, such as the number of false positives generated by the robot for being in trouble, such as intervention.

3.2 Multi-operator single-robot

In this section the focus will rest on robotic systems requiring more then one human operator. Typically this is thought of as unmanned aerial vehicles or other unmanned craft. The distinction should be made here from autonomous systems which would only require human input for the initial tasks.

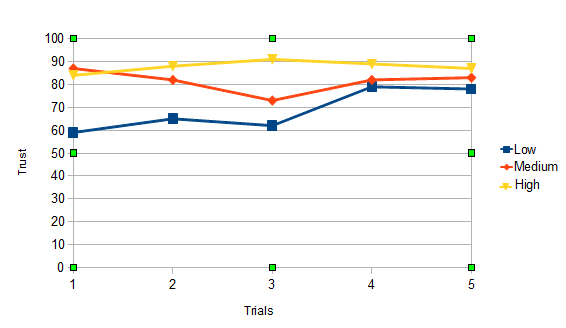

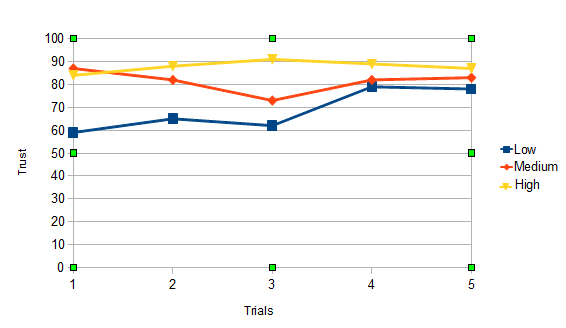

Experimental results from a system involving an unmanned aerial vehicle and unmanned ground vehicle, display the correlation between robotic system competency and trust [Freedy07]. This is shown over a period of trials in which user trust of the system generally increases as users become familiar with the system and place greater trust in it. What Figure 3.1 also illustrates and implies is a psychological connection to the robotic side of the system, in that trust diminishes as the system become a hindrance. The experimenters also found that bias was present in the results, which shows thought was put into a through analysis. The bias they came across stemmed from the first experience with the system. If the experience was a positive one, the user would be more tolerant of faults for a greater number of trials, and vice versa if it was a negative experience. This further illustrates the importance of documenting how analysis is carried out, as experienced users of the system have a higher trust than new users, and failing to acknowledge this leads to skewed results.

Figure 3.1: Trust vs Trials with respect to low, medium, and high competency

3.3 Single operator multi-robot

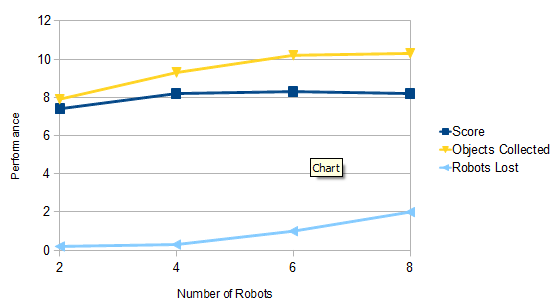

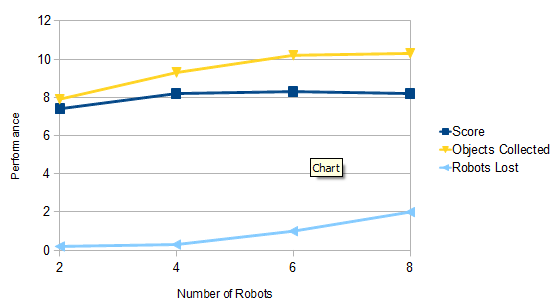

Since 2006, work has been done to develop a set of metric classes for single operator multi-robot systems. The complexity of human robot interaction prevents a single ubiquitous class. As presented in Crandall and Cummings 2007[9], three criteria are important when choosing metrics. The first is having the ability to identify the limits of both the human and robot aspects of the system. Neglecting either would give an incomplete picture of performance analysis. The second criteria is deemed predictive power. In this case, it is too costly to evaluate a full factorial design, a case in which all combination of the environment and number of robots in the system are considered. Thus, having the ability to predict the performance of a system with some level of confidence is very valuable information. The third criteria to consider ties this section together, as it focuses on listing key performance factors. Within all areas of human robot interaction, knowing what is being analyzed is critical to obtaining a clear picture of the effectiveness of the system. Figure 3.2, also indicates that increasing the number of robots in the system or fanning-out, also increases the number of robots lost. Here they define lost to mean robots left in the maze when time expired for the experiment. Some level of communication between robots can be implemented to marginally increase performance; however, the real bottleneck is the human operator and their ability to attend to each robot in a manner that maximizes performance for the task under analysis.

Figure 3.2: Performance as robot team size increases

Looking at the levels interaction with robots presented in this section, a key point is accurately defining the level of human involvement in the system to prevent overwhelming the user. While this section focused on the aspect of interaction between humans and robots, the next section will shift focus to removing much of the human element from the system, creating a more autonomous robotic system.

4. Autonomous Robotics

The focus of this section is to illustrate the development of experimental design in autonomous robotics, and its use in current systems. Comparatively speaking, the standards of experimental methodologies have not made the same advancements as other fields, but recent efforts have been made to use simulation to rectify this [Amigoni10]. For the purpose of this paper, autonomous robotics will be defined as the use of robots in unpredictable environments without continual human intervention.

4.1 Autonomous Robotics Methodologies

Simulation, according to Amigoni and Schiaffonati, has become an acceptable alternative to experimenting with actual robots, as well as more cost efficient in early stage development. While autonomous robotics is not held to the current rigid standard of commercial robotics, the effort to create stringent methodologies is a step in that direction. The success of simulation in the early stages of development also depends on careful consideration of where errors could potentially enter the analysis. For example, failing to take into account adequate knowledge of real world characteristics ultimately leads to difficulties when the systems goes beyond simulation into the real world. Taking into consideration the attributes of the real world arena the systems will perform in also falls short of the whole picture, as noise present in the real world must also be dealt with before modeling and simulation. With this in mind, the simulation results should be validated against real world performance to isolate any discrepancies in the data. [Amigoni10]

4.2 Current Simulation Systems

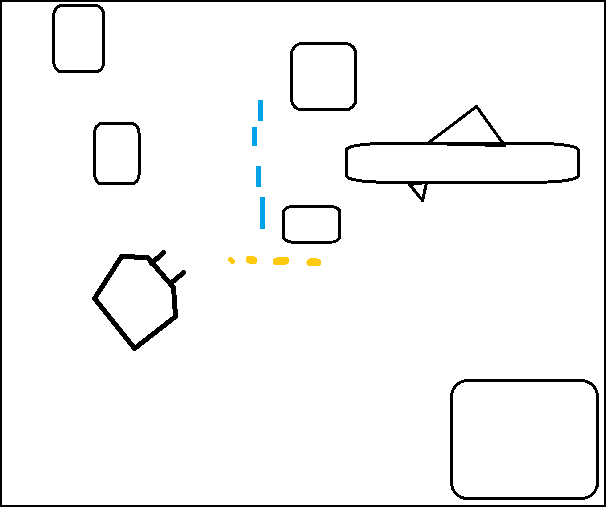

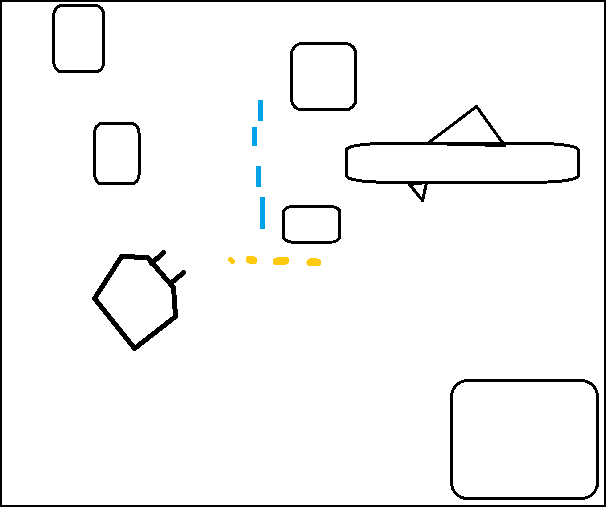

Two popular simulators mentioned in [Amigoni10], Player/Stage and USARSim will be discussed in this section. The first, Player/Stage, which can be obtained from http://playerstage.sourceforge.net/, is comprised of two programs working in conjunction. The player program portion allows any computer with a network connection to communicate with sensors on the robot. While the stage portion simulates the robots and sensors in a way that is not resource intensive. The combination of these programs allows for quick simulation. As indicated in [Amigoni10] and shown in Figure 4.1 the black shapes represent obstacles in the environment, while the blue and yellow lines represent objects the robot is to collect.

Figure 4.1 Mock Player/Stage Screen

The simulation software USARSim implements a client server architecture. Here the server is in charge of maintaining the states of the robots in the simulation. When a client issues a command, the server simply changes the state of the robot. The acceptance of USARSim as a valid simulation solution is shown through its use in the RoboCup rescue virtual robot competition and the IEEE Virtual Manufacturing Automation Competition. The two simulation software programs mentioned here, while not an exhaustive list, do represent a common starting point.

4.3 Real-Time

As touched on in the previous section, metrics are tightly coupled with the domain of the robotic system. Elements of real-time components may exist in other systems; however, the system as a whole does not focus on maintaining the characteristics of a real-time system. For instance, while observing the effect of a worst case performance scenario is valuable, to maintain real-time performance the system load should remain under 50% of its capacity [yoon09]. Real-time systems do share some characteristics with general robotic systems, including focusing tuning efforts on bottleneck components. Humanoid robots in particular possess component bottlenecks, but require the overall system to adhere to real-time requirements. The bipedal movement depends on center of gravity and zero point movement calculations in real-time in order to stay upright and mimic the human gait.[harada05] As Harada and others demonstrate, simulation is useful in real-time robotic systems, but should be coupled with experimentation to verify the results. In the case of bipedal robots, failure of the system can result in physical damage to the robot itself, and thus real-time constraints must be maintained. While real-time robotic systems are only touched briefly in this paper, it is an area of robotics worth further research and reading.

5. Evaluation Pitfalls

As the field of Robotics covers many different domains, there exists a greater chance for evaluation error and bias. Extra care must be used when defining the domain the system will operate in, and what metrics are truly important. Experimentation will help remove some unneeded metrics; however, the development time of a system can be increased beyond an acceptable time if too many variables are under observation.

5.1 Metrics and Data Plotting

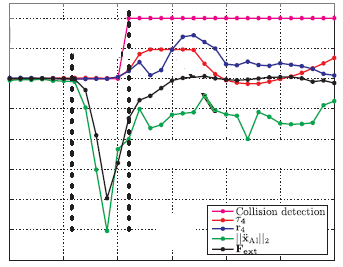

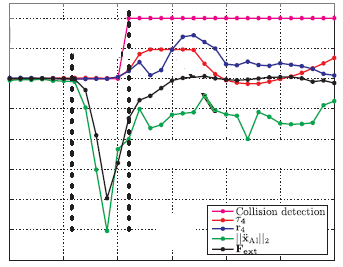

While some research papers present solid cases for properly defining metrics, clear presentation of data is sometimes overlooked such as in Figure 5.1 [haddadin09]. In this case lines are labeled by variables, rather than keywords which would increase readability of the chart. This is particularly important as the number of lines presented falls in the 5 to 7 ranges, where the ability to quickly read and comprehend a line chart wanes.

Figure 5.1 A line chart displays the upper limit for the acceptable number of lines.

5.2 Modeling

In the case of modeling robotic systems, one of the most common pitfalls is failure to account for real world anomalies and noise. While the robot is generally thought of as the system understudy, the environment in which it operates is heavily correlated to its performance. Whether performance is measured in time to completion or slanted to human criteria like ease of use, the assumption of a perfect world will introduce errors into the system that will propagate into production systems if not caught.

5.3 Simulation and Benchmarking

As indicated in [helmer09], benchmarking becomes increasing useful when applied to a well defined system in a domain as opposed to the field robotics in general. The use of benchmarks as an ambiguous solution across all aspects of robotics, can actually have an adverse effect on system design due to scope and complexity mismatch of the benchmark and system.

6. Conclusion

In conclusion the concepts presented in this paper in regards to robotics can be applied to many other fields as well. The idea that a plan should be well thought out before testing is part of all area of robotics mention, and in general is a best practice. For robotics in particular it is critical that the proper level of autonomy be determined before analysis to properly evaluate system performance. The goal of this paper was to show three levels of metric selection and analysis. These levels included metrics focused on human side of system, metrics with a balance of human and robot factors, and systems focused on the robotic side of systems. The pitfalls mentioned are closely tied to the area of robotics a system is part of, and vary from metric selection to absence of the human element in the design process. It is also important to be mindful of the goals of the systems, and avoid erroneous metrics that will not provided useful analysis.

7. Acronyms

HRI- Human Robot Interaction

UAV- Unmanned Aerial Vehicle

FIM- Functional Independence Measure

RSME- Rating Scale for Mental Efforts

8. References

(In the order of importance.)

[Helmer09] Scott Helmer, David Meger et al., "Semantic Robot Vision Challenge: Current State and Future Directions", 2009, http://arxiv.org/PS_cache/arxiv/pdf/0908/0908.2656v1.pdf

"This paper speaks to the need for well defined systems before choosing metrics."

[Yoon] Hobin Yoon/Jungmoo Song et al, "Real-Time Performance Analysis in Linux-Based Robotic Systems", 2009, http://www.kernel.org/doc/ols/2009/ols2009-pages-331-339.pdf

"Discusses metrics needed to analyze real-time systems."

[Haddadin] Sami Haddadin et al.,"Requirements for Safe Robots: Measurements, Analysis and New Insights", 2009, The International Journal of Robotics Research, http://www.phriends.eu/ijrr_09.pdf

"This article shows how the labels of charts can make a difference in readablity."

[Tsui2008] Katherine M. Tsui/Holly A. et al, "Survey of Domain-Specifc Performance Measures in Assistive Robotic Technology", 2008, http://cres.usc.edu/pubdb_html/files_upload/591.pdf

"This paper discuesses ther various aspects of assistive robotics and how to gauage effectiveness."

[Feil] David Feil-Seifer, Kristine Skinner and Maja J. Mataric, "Benchmarks for evaualting socially assistive robotics", Interaction Studies: Psychological Benchmarks of Human-Robot Inteaction, Vol 8, no 3, pages 423-429, 2007

"This paper talks about the social aspect of assistive robotics."

[Freedy] Amos Freedy et al., "Measurement of Trust in Human-Robot Collaboration", 2007, http://www.percsolutions.com/papers/human-robot-interaction/MITPAS_Trust_CTS_2007.pdf

"This paper talks about how trust is key in a system involving human robot interaction."

[Tsui2009] Katherine Tsui, David Feli-Seifer, et al., "Performance Evaluation Methods for Assistive Robotic Technology", 2009, http://robotics.cs.uml.edu/fileadmin/content/publications/2009/SpringerChapter-tsui-fseifer-mataric-yanco.pdf

"This paper discusses methods for evaluating effectivness of assistive robotics."

[Amigoni] Francesco Amigoni, Viola Schia?onati, "Good Experimental Methodologies and Simulation in Autonomous Mobile Robotics", 2010, ftp://ftp.elet.polimi.it/users/Francesco.Amigoni/pib7.pdf

"This paper talks about how to evaluate the design of autonomus systems."

[Crandall] Jacob W. Crandall and M. L. Cummings, "Identifying Predictive Metrics for Supervisory Control of Multiple Robots", 2007, http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.85.8478&rep=rep1&type=pdf

"This paper discusses human interaction with multiple robots and metrics for analyzing the system."

[60_paper] Kensuke Harada, Shuuji Kajita, et al., "An Analytical Method on Real-time Gait Planning for a Humanoid Robot", 2005, http://www.lira.dist.unige.it/teaching/SINA_08-09/SINA_PREV/library/HUMANOIDS2004/paper/60_paper.pdf

"This paper talks about real-time metrics as they pertain to humanoid robotics."

Related Material:

The following articles are related but not discussed in this paper.

- C. R. Burghart & A. Steinfeld, "Metrics for Human-Robot Interaction", 2008, http://www.hri-metrics.org/metrics08/index.html

- Terrence W. Fong et al, "Common Metrics for Human-Robot Interaction", 2006, http://www.ri.cmu.edu/publication_view.html?pub_id=5299

- Jason R. Schenk/Robert L. Wade, "Robotic systems technical and operational metrics correlation", 2008, http://portal.acm.org/citation.cfm?id=1774692

- Demiris, Y. and Meltzoff A., "The robot in the crib: a developmental analysis of imitation skills in infants and robots", 2008, http://onlinelibrary.wiley.com/doi/10.1002/icd.543/abstract

- Bicho, E., "Dynamic approach to behavior-based robotics : design, specification, analysis, simulation and implementation", 2008, http://lakh.unm.edu/handle/10229/104991

- Cengiz Kahraman et al, "Fuzzy multi-criteria evaluation of industrial robotic systems", 2007, http://www.sciencedirect.com/science?_ob=ArticleURL&_udi=B6V0C-4BVC8XW-9&_user=10&_coverDate=02/25/2005&_alid=1666501407&_rdoc=14&_fmt=high&_orig=search&_origin=search&_zone=rslt_list_item&_cdi=5643&_sort=r&_st=13&_docanchor=&view=c&_ct=54&_acct=C000050221&_version=1&_urlVersion=0&_userid=10&md5=c6d05cfc111a777170dd6e33b69ff9fd&searchtype=a

- Gang Feng, "A Survey on Analysis and Design of Model-Based Fuzzy Control Systems", 2006, http://ieeexplore.ieee.org/document/1707754/

- Edward Tunstel/Mark Maimone, "Mars Exploration Rover Mobility and Robotic Arm Operational Performance", 2005, http://www-robotics.jpl.nasa.gov/publications/Mark_Maimone/MobIDDPerf90sols.pdf

- ARC Advisory Group, "Siemens PLM Software's Robotics Simulation: Validating & Commissioning the Virtual Workcell", 2008, http://www.plm.automation.siemens.com/en_us/Images/Siemens%20PLM%20Robotics%20WP-%20Final_tcm1023-58463.pdf

- Madhavan, Raj Editor, "Performance Evaluation and Benchmarking of Intelligent Systems", 2009, http://libcat-ind.wustl.edu/?itemid=|iii|b4309454

- Thomas Thueer and Roland Siegwart, "Kinematic Analysis and Comparison of Wheeled Locomotion Performance", 2008,http://robotics.estec.esa.int/ASTRA/Astra2008/S08/08_04_Thueer.pdf

- Roman Neruda, Stanislav Slusn´y, "Performance Comparison of Two Reinforcement Learning Algorithms for Small Mobile Robots", International Journal of Control and Automation, Vol. 2, No. 1, March, 2009, http://www.sersc.org/journals/IJCA/vol2_no1/7.pdf

Last modified on April 24, 2011

This and other papers on latest advances in performance analysis are available on line at http://www.cse.wustl.edu/~jain/cse567-11/index.html

Back to Raj Jain's Home Page