Alexander Benjamin - Study Abroad SU10

Part I: Looking back on the Israel Trip

Recently, I went on a trip to Israel and the Technion, the top technical school in Israel and an institution respected around the world. As a rising junior studying electrical engineering, computer science, and business, I went with other students from the Electrical and Systems Engineering department at Washington University in St. Louis.

The trip to Israel held particular importance for me for both cultural and academic reasons. All of my family comes from a Jewish background, but before this trip I had never been to Israel. Therefore, the opportunity to visit the cultural center of my religion is an experience that means an a lot to me.

From the other perspective, it was a unique chance to learn from and exchange ideas with one of the top departments in my field in the world. I was very impressed at the amount the group was able to accomplish in the allotted time. We toured and conducted experiments in many labs at the Technion while also finding the time to visit a lot of the most important and historically significant sites in Israel.

The most striking aspect of the Technion was the scale of research. The electrical engineering department is many times the size of ours at Wash U. It seemed that research was integrated into the undergraduate curriculum in different ways there, which gave us ideas to pursue at Wash U. Because of the mandatory military service in Israel, most college-age students are several years older than in the U.S. Thus, it isn’t uncommon for students to be married while in college, which I found to be an interesting contrast. Certainly, it leads to a different, more mature, social atmosphere.

The time that we spent touring the sites around Israel will be an unforgettable experience. Some of the sites we visited are places I have been hearing about from family and friends for my entire life. It was edifying to see Israeli customs and culture firsthand since I had always held certain notions from what I had heard from others. In particular, it was very meaningful to walk around the old city of Jerusalem. Standing before the Western Wall was an awing experience and one which I will always carry with me.

The experience will aid me immensely in my growth as a person and as a student. It was a great opportunity to learn about a culture and a country with an ancient and very rich history, and also to encourage the exchange of ideas between our institutions. I would also like to thank the donor who made the trip possible. We are all grateful for his support and that there are people like him who take it in hand to broaden the horizons of young scholars.

Part II: Design Considerations for Audio Compression

The world of music is very different than it was ten years ago, which was markedly changed from ten years before that. Just as the ways in which people listen to music have evolved steadily over the last couple of decades, so have the demands and considerations of music listeners. People want music to have optimal sound quality while requiring as little space as possible (so they can listen to it on an MP3 player, for example). This paper will discuss the considerations and tradeoffs that go into audio compression as a determinant of audio quality and the amount of memory that the file occupies.

Music files are compressed to reduce the amount of data needed to play a song while minimizing the loss (if any) of perceptible sound quality. A decade ago, hard drive storage was much more expensive, and so the norm was to compress music. With the advent of digital audio players (MP3 players), compression is desired so that people can fit more songs onto a device. In addition, music compression is very useful for transferring the data over the internet or between devices. While download and transfer speeds have increased notably over the years, it takes much longer to download an uncompressed music file over the internet in comparison to a compressed one.

Different types of compression algorithms have been developed that aim to reduce or alter the digital audio data in order to reduce the number of bits needed for playback. The compression process is called encoding while the reverse is called decoding. From this, compression algorithms are commonly termed codecs (a combination of coder-decoder).

There are two types of general data compression: lossless and lossy. As suggested by the name, lossless compression algorithms do not permanently eliminate any of the original data or transform the digital data in an irreversible way. Thus, it is possible to reproduce an exact duplicate of the original digital data by decoding a losslessly compressed file. Conversely, lossy compression algorithms alter or completely remove digital data, rendering it impossible to reverse the process. As could be expected, lossy compression algorithms compress more than lossless algorithms. While a lossless algorithm generally reduces file size to about 50-60% of the original size, a lossy algorithm can typically achieve 5-20% of the original size and maintain reasonable sound quality.

All non-trivial compression algorithms use techniques to reduce information redundancy, which is when more bits than necessary are used to represent a sequence that could be represented with fewer bits. The most common techniques include coding, linear prediction, and pattern recognition. Coding is altering the format in which data is represented. For example, a naïve form of coding used by fax machines, called run-length encoding, would represent “WWWWBBWWWWWW”, the letters denoting pixel colors white or black on a page, as simply “4W2B6W” . It is important to note that certain coding methods are only useful when applied to appropriate data. Using the same example, run-length encoding would not be useful for a format which varied regularly from each sample to the next (an image for example). If the sequence were instead “WBRO”, it would encode to “1W1B1R1O”, doubling the original size. Linear prediction is a mathematical technique that attempts to estimate future values of a discrete-time signal as a linear function of previous examples . Linear predictive coding (abbreviated LPC) uses a linear predictive model to represent the spectral envelope of the digital data. It represents the intensity and frequency of a digital sample with numbers from which the original data can be reproduced.

Pattern recognition essentially uses a statistical model to analyze a set of data and determine parts that are repeated. Instead of preserving each instance of the repeated sequence, the pattern can be symbolically represented each time in order to save space. A unique symbolic description will be computed for each pattern, and this symbolic description will substitute the pattern . It is especially difficult to effectively compress certain music data losslessly because the waveforms are often much more complex than other audio waveforms such as human speech and don’t lend as well to pattern detection or predictive models. Also, the variation is often chaotic even between individual digital samples – this means that consecutive sequences of bits (the “WWWWBBWWWWWW” example) don’t occur very often. However, the data can be manipulated with certain signal processing techniques that make the previously described techniques more applicable. Codecs such as FLAC and Shorten, two well-known lossless compression algorithms, use linear prediction to estimate the spectrum of the signal. In order to use this technique, these codecs take the convolution with the filter [-1 1] which helps to whiten (i.e., make the power spectrum flatter) the signal. By reducing the variability in the spectrum, the linear predictive models are more effective. The whitening process can easily be reversed when the data is decoded by convolving with the reverse filter. Other codecs use similar filtering techniques in order to whiten the spectrum for linear predictive coding .

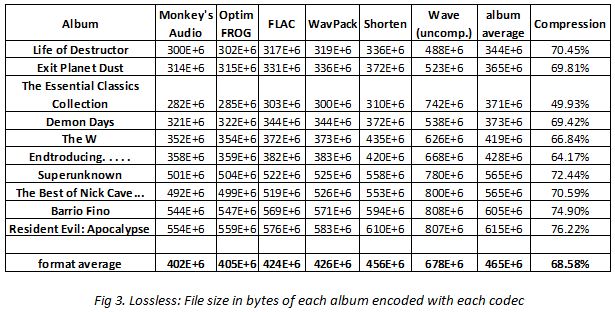

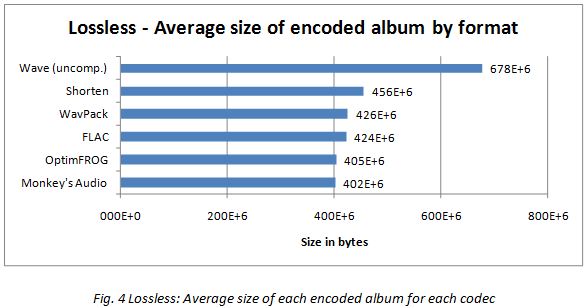

Lossless music compression algorithms retain all of the high-fidelity audio data and can reproduce the original digital data via decompression. Thus, these algorithms are not judged on the quality of compression, but mainly on the effectiveness and speed. Certain algorithms optimize slightly more for the degree of compression (reducing file size), while others optimize slightly more for faster speeds of compression and decompression (useful especially for real-time applications).

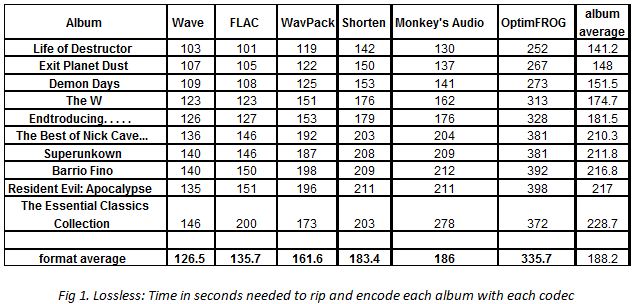

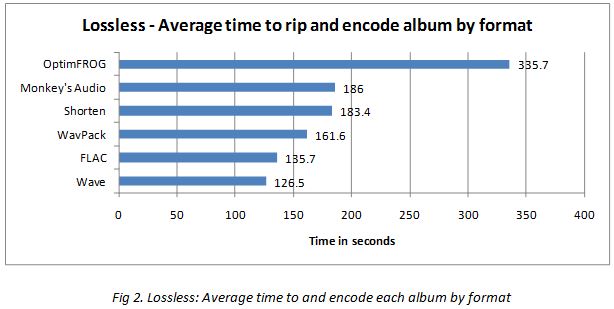

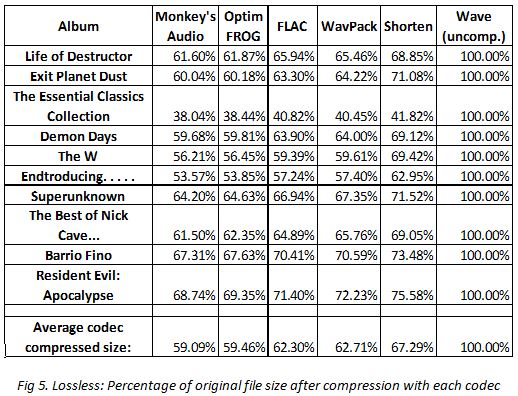

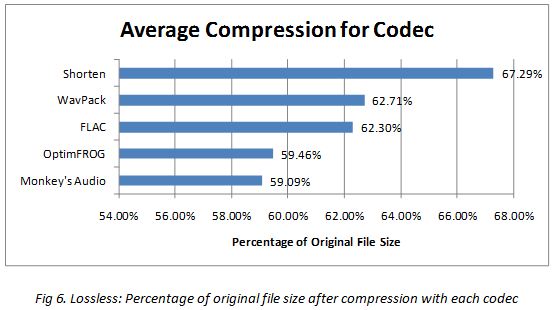

The following is an analysis of how mainstream lossless music codecs perform in relation to each other and demonstrates performance differences in the above criteria. The music albums used in the test were selected to possess varying styles of music in order to show the effect of music style on the effectiveness and speed of the codecs. As a standard of comparison, the wave format is uncompressed and so can be used to judge the degree of compression.

As can be seen, there is significant variation in both the time to rip and encode different albums based on the complexity of the digital data as well as the performance of each codec. The tradeoff to speed of the compression process is the degree of compression that each codec achieves. It should be noted that the tradeoff is not linear, and that some codecs are better at encoding and decoding, as well as compressing, some styles of music.

The first point that becomes immediately obvious is that none of the encoders ‘wins’ in multiple categories. This reinforces the point that the effectiveness of compression must be traded in order to achieve speed. The results do suggest that certain codecs have optimized the tradeoff better; in other words, they are not sacrificing as much time in order to achieve good compression levels.

From these results, one can see that OptimFROG compresses very well in relation to the other codecs; however, the time it takes for compression is almost double that of any of the other codecs. This result suggests what one would have expected given familiarity with the codec – the makers of OptimFROG attempted to optimize almost entirely for the degree of compression .

The codecs which, based on these results, appear to achieve the best results are Monkey’s Audio and FLAC. The former achieves notably greater compression, while the latter achieves notably better compression time. Depending on the specific desire of the user, one of these codecs seems best for general use.

To verify that compression was indeed lossless, MD5, SHA1 and CRC-32 hash values were generated for the uncompressed wave and then a cycle of compression/decompression was done with each codec . The hash values were also generated for the resulting file after the compression/decompression. The hash values from the original file were identical to the other files, and the files were the same size.

The other type of compression is termed as ‘lossy’. Lossy compression is actually much more widely used than lossless, which might seem surprising at first. However, lossy compression can achieve size reductions to about 5-20% of the original file size and still have good quality such that the music is indistinguishable from the original file for the average listener. The appeal for mainstream applications is primarily in the transfer rate of the digital data. Digital television, radio broadcasts, DVDs, and satellite all use lossy compression to make transfer rates faster and have the data more accessible to end-users.

Lossy compression focuses on discarding or de-emphasizing pieces of the digital data that are either imperceptible to the human ear, or else are less critical. Unlike with lossless compression, data that is compressed lossily is compressed until it reaches a target file size. The target file size is specified as a bit rate; in other words, how many bits can be used to describe each sample of music. One can think of this as similar to a color palette for images. If a photograph is redrawn using only 16 colors, details will be missing and the image will appear drastically different than the original. The more colors that are used to draw the image, the more details will be noticeable. While there could theoretically be an infinite number of color shades, at a certain point the human eye will no longer be able to detect further detail. This analogy translates very well to the idea of lossy music compression and bit rates. A high-fidelity music file contains far more information, or detail, than the human ear can detect. For this reason, it is easy to take away a significant amount of digital data without noticeably changing the way a person perceives it. The average compression threshold, or bit rate, at which a person can notice the difference in sound is a topic that is regularly under debate and of interest to both music listeners and music producers.

It is important to understand how lossy compression codecs determine what digital data is less-critical in relation to other data. While the same techniques used in lossless compression are still applied to lossy compression, lossy codecs have the added responsibility of deciding what sounds to remove rather than just how to compress. Also, the codecs need to calculate how many bits (i.e., how much detail) to devote to certain sounds.

The guiding theory in lossy compression comes primarily from the study of psychoacoustics. Psychoacoustics recognizes that not all sounds are perceptible by the human ear. There is a finite frequency range at which a person can detect sounds, and also a minimum sound level (called the absolute threshold of hearing). While humans can, under certain circumstances, hear sounds in the range of 20 Hz to 20,000 Hz, the upper and lower thresholds are generally lower for most people and the range decreases with age . There is also a limit at which the ear can perceive changes in pitch. The frequency resolution between 1-2 kHz is 3.6 Hz, meaning that any pitch change smaller than that is unnoticeable . Finally, the concept of auditory masking describes how the perception of one sound can be affected by the presence of another sound based on their relative volumes and frequencies . Depending on the attributes of simultaneous sounds, some sounds may be completely masked, or less prominent, to a listener. Lossy compression algorithms take advantage of psychoacoustics to determine which sounds can be entirely removed, or else described less completely (with less bits).

Since lossy compression algorithms are generally set for a target bit rate, the pieces of digital data that become categorized as less-critical will change depending on the degree to which a file is compressed. Since high-fidelity music contains much more digital data than is critical, these algorithms can achieve very high levels of compression without perceptibly changing what a human can hear. Obviously, to reduce the bit rate to a very low level, lossy algorithms are forced to sacrifice noticeable sound quality.

Modern codecs can also encode music at a constant bit rate (CBR) or variable bit rate (VBR). Constant bit rate means that every sample will be described using the same number of bits. If the target bit rate is 128 Kbits/s, every digital data sample will have that level of detail. Variable bit rate allows the encoder to use more or less bits per individual sample while maintaining the average rate across the song. Using VBR, an encoder could use fewer bits to describe a more simple part of a song, and then use the excess bits to describe the more complex passage. A variable bit rate is almost always desirable as it allows music to be more accurately represented at lower bit rates.

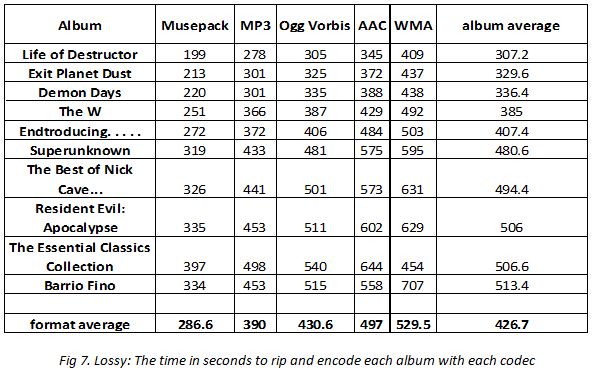

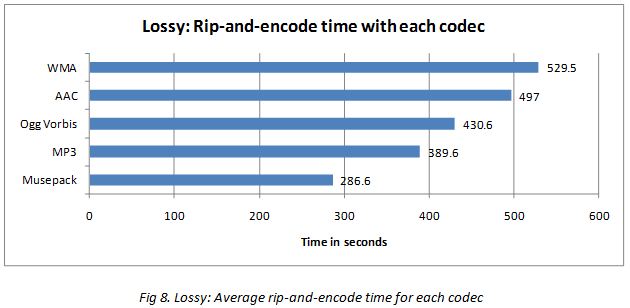

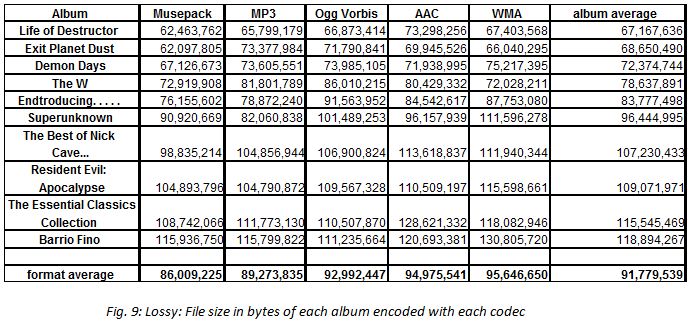

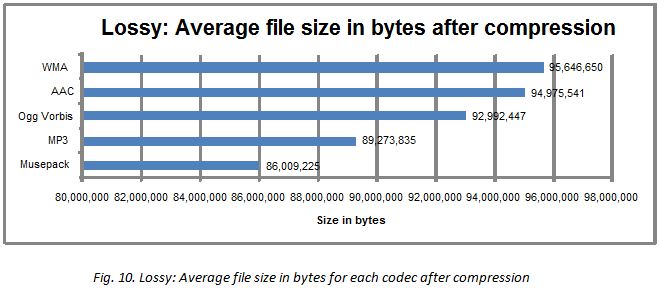

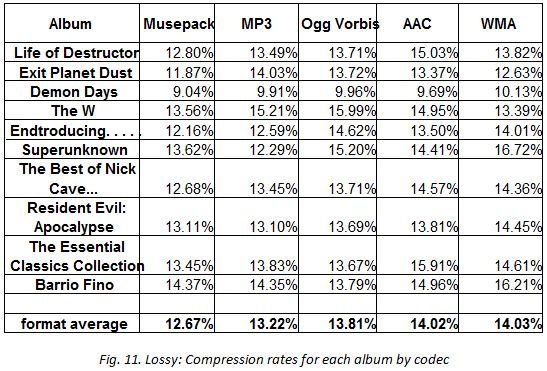

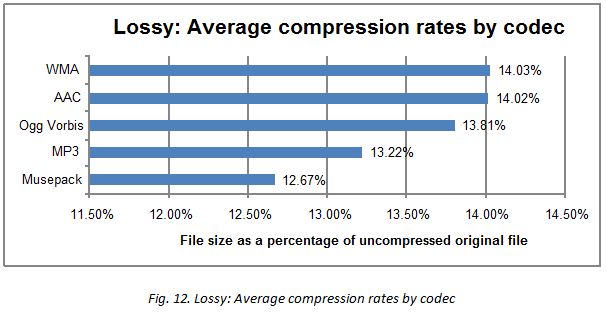

The following is an analysis of how mainstream lossy music codecs perform in relation to each other and demonstrates performance differences. It is important to note that this analysis does not address the quality of the music when compressed which is clearly a vital component when judging a lossy compression algorithm. It would require polling and blind testing from a wide audience beyond the current scope of this analysis to determine reliable results. Instead, other studies in this area will be addressed to discuss perceptible quality differences as a factor of bit rate. This music is encoded at 192 Kbits/s except for AAC (MP4) at 150 Kbits/s, and Musepack at 210 Kbits/s (these levels are generally accepted to have no obvious quality problems for their respective codecs). All are encoded using VBR with the exception of WMA which uses CBR. The music albums used in the test were selected to possess varying styles of music to show the effect of music style on the effectiveness and speed of the codecs.

There are several interesting points to note. First, the timing was fairly widely distributed between the codecs; no two results were within 30 seconds of each other. Also, the times for lossy encoding were uniformly much longer than the general times for lossless encoding. This demonstrates that the calculations and models that use psychoacoustic theory to remove or de-emphasize add significant run-time.

The results for the file size between different codecs were interesting. There was quite a bit of variation in the file size of each album between each codec. The results also show that the codec most successful in compressing varied between albums, suggesting that there is a potentially significant variation in the effectiveness of each codec for different music styles.

The degree of compression for lossy compression is quite astounding when taking into account the fact that the quality level at these compression levels is generally accepted to be almost, if not entirely, indistinguishable from an uncompressed file. This shows that lossy compression algorithms are probably desirable for applications other than archiving music. These files were compressed to roughly 192 Kbits/s and achieved around 13.5% of the original file size.

As mentioned previously, this still does not address the question of which codec produces the highest quality recording when compressed to a given bit rate. When music files are compressed, the compression can leave artifacts if the data is represented with too few bits to preserve all of the perceivable sound. Whether or not a listener will be able to hear the difference between music files at various rates of compression depends on a number of factors, including familiarity with the music, quality of the sound source, and sensitivity of the listener.

Conducting a reliable experiment regarding the bit rate threshold at which the music becomes distinguishably different is outside the scope of this analysis. The pursuit in itself seems somewhat futile as the answer will always depend on subjective factors such as the quality of the listener’s sound system and how attuned his or her ear is to music. The conclusion of a majority of studies shows that 128 Kbits/s will provide good quality for the average listener on a typical sound system. A bit rate any lower than that will probably yield obvious compression artifacts that may bother some listeners more than others. The obvious recommendation would be to encode one’s music at no less than 128 Kbits/s and to experiment with one’s own set of circumstances to determine at what bit rate one can no longer notice a difference. It is generally accepted that non-experts will not be able to tell the difference between music encoded at 192 Kbits/s and higher bit rates.

The field of audio compression is one in constant development as the models, optimizations, and algorithms continue to become more advanced. While different people will likely always have very individualized preferences for music format and quality, the topic is of interest to users on both ends of the spectrum. Those who produce music are especially interested, because it can affect the design decisions for making music. A song that is mixed to sound best at 320 Kbits/s may not sound as good at lower bit rates. However, recognizing that mainstream listeners use iPods and other portable devices that rarely use files greater than 128 Kbits/s, some music producers have started designing songs to sound their best when compressed at these levels. There are so many individual variables that factor into a specific user’s music preferences that there is likely not any best answer. However, the above analysis should certainly provide some indication of the considerations and tradeoffs that should be considered.