Difference between revisions of "Robotic Movement"

| Line 14: | Line 14: | ||

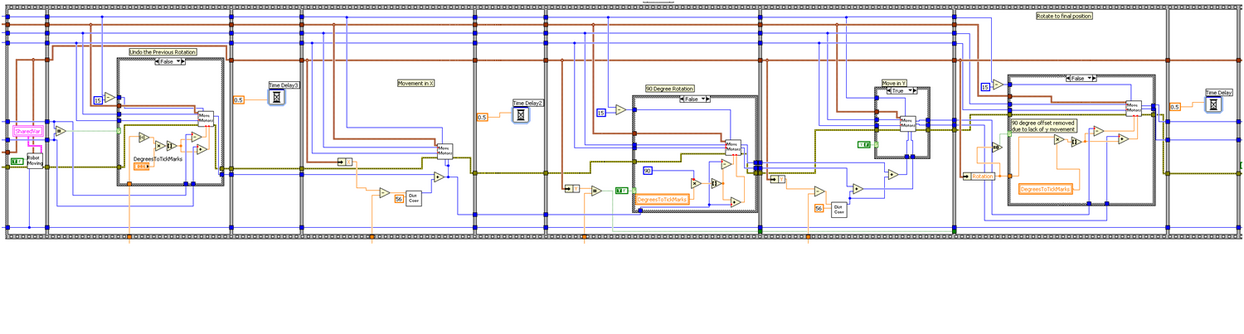

An image of the sequence structure LabVIEW VI is shown below. | An image of the sequence structure LabVIEW VI is shown below. | ||

| + | |||

| + | |||

| + | |||

[[File:Robot2d.PNG|1244px|center]] | [[File:Robot2d.PNG|1244px|center]] | ||

Latest revision as of 06:54, 6 May 2010

<sidebar>Robotic Sensing: Adaptive Robotic Control for Improved Acoustic Source Localization in 2D Nav</sidebar>

The commands for microphone placement are determined by the Matlab adaptive controller, but the algorithm carries out the actual robotic movement was made in LabVIEW. When the system was extended to incorporate two dimensional movement, this LabVIEW code needed to be updated since the robots were no longer moving along a straight track. The robots needed to be able to move in two dimensions and to do this an algorithm needed to be created to command them in what sequence to perform their lateral, horizontal and rotation movements. This algorithm was made by creating a sequence of moment steps in a LabView VI called “robot2D”. This sequence of steps needed to be capable of handling any of the possible movements: forward & back, left & right, and rotating to any angle up to 180 degrees in both directions. Further, the absolute position coordinates, as passed to LabVIEW by the controlling algorithm, needed to be commanded to the robots in relative distances between their current position and the new, intended position.

The left and right movements, those done along the x axis, were done as before by turning both wheels on each robot by the difference between the current robot position and that new one. The wheel turns are tracked using absolute value tick marks counted by the Lego Mindstorm NXTs.

The rotations of the robot were accomplished by turning the two wheels in opposing directions by equal amounts; the exact number of tick marks needed to cause a rotation by 1 degree was calibrated to about 2 tick marks per degree. Once rotation has been performed, the robots would be positioned off of the x axis. Each time a new command was issued, these rotations need to be undone to allow the robots to move along the x axis by undoing the rotational tick mark changes. These changes are tracked from one movement to the next by LabVIEW ‘shift registers’ in the robot2D VI.

In theory, the Y axis motion is performed by using the rotation sequence to rotate 90 degrees before moving in straight forward, to move in Y instead of X. The rotations are done by not returning from the 90 rotation completely. However, while it works in simulation, at this point the Y axis movement is not enabled. This is because the current hardware is wired to the amplifier and not capable of y axis motion. Thus, the robot2D code will need modification when new, wireless hardware is ready.

The movements (including Y direction movement) take place in this sequence: 1) any rotation off of the x axis is undone 2) the robots move along the x axis 3) protestation by 90 degrees to face along the Y axis 4) movement along the y axis and 5) finally rotation to the final point angle. The current version of the sequence, without Y movement includes steps 1, 2, and 5.

The only other effect to be accounted for is the power exerted by the motors during movement. The rotational motion requires less motor power to be used than lateral motion because rotations tended to cause the wheels to slip. This functionality was built into movement to account for this.

An image of the sequence structure LabVIEW VI is shown below.