Difference between revisions of "Pupil tracking"

| (11 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== Background == | == Background == | ||

| − | + | This research was conducted by Tommy Powers and Cameron Fleming in the Spring Semester and Fall Semester of 2012 at Washington University in Saint Louis. It was part of the Undergraduate Research Program in the Preston M. Green Department of Electrical and Systems Engineering. This project was overseen by Dr. Arye Nehorai, Ed Richter, and Phani Chavali. | |

== Project Overview == | == Project Overview == | ||

| − | + | The goal of this pupil tracking project is to provide a method of communication using eye movement for people with Amyotrophic Lateral Sclerosis (ALS) who are unable to control voluntary muscle movement in their limbs. The project will be developed in two main phases: | |

| − | + | ||

| − | + | *Develop an algorithm to track the movement of the pupils. | |

| + | |||

| + | *Develop hardware in order to capture the movement of the eyes so that it may | ||

| + | then be processed. | ||

| + | |||

Our work focused on the first task in order to construct a robust pupil finding algorithm. We tried a variety of ways to track the pupils and in this report we focus on the most robust of these. | Our work focused on the first task in order to construct a robust pupil finding algorithm. We tried a variety of ways to track the pupils and in this report we focus on the most robust of these. | ||

| Line 12: | Line 16: | ||

*Eye Region Extraction | *Eye Region Extraction | ||

| + | |||

*Homomorphic Filtering | *Homomorphic Filtering | ||

| + | |||

*Eye Tracking | *Eye Tracking | ||

| + | |||

*Kalman Filtering | *Kalman Filtering | ||

| − | === Eye Region Extraction === | + | |

| + | === 1. Eye Region Extraction === | ||

This portion uses the horizontal and vertical projections of the gradient to find the biggest changes in intensity. These changes correspond to edges in the image. | This portion uses the horizontal and vertical projections of the gradient to find the biggest changes in intensity. These changes correspond to edges in the image. | ||

| Line 31: | Line 39: | ||

*Issues: This is more consistent although making sure that the peaks are at least a certain distance apart adds consistency. | *Issues: This is more consistent although making sure that the peaks are at least a certain distance apart adds consistency. | ||

| − | === Homomorphic Filtering === | + | |

| + | === 2. Homomorphic Filtering === | ||

Homomorphic filtering performs filtering on an image by simultaneously normalizing the brightness and creating more contrast. This is accomplished using a high-pass filter on the natural log of the image. | Homomorphic filtering performs filtering on an image by simultaneously normalizing the brightness and creating more contrast. This is accomplished using a high-pass filter on the natural log of the image. | ||

| + | <math> Image(x,y) \rarr ln \rarr FFT \rarr H(u,v) \rarr FFT^{-1} \rarr exp \rarr ImageOut(x,y) </math> | ||

For the eye tracking problem, the homomorphic filter is able to separate the useful information of the image from the artifacts created by non-uniform lighting conditions. The filtering removes the artifacts, leaving a clearer picture of the eye region. | For the eye tracking problem, the homomorphic filter is able to separate the useful information of the image from the artifacts created by non-uniform lighting conditions. The filtering removes the artifacts, leaving a clearer picture of the eye region. | ||

| + | |||

| + | === 3. Eye Tracking === | ||

| + | |||

| + | The eye tracking portion involves 2 steps: Hough Transform and Eye Verifier. These steps are performed every 3 frames in order to make sure that the following Kalman Filtering correctly tracks the eye movement. | ||

| + | |||

| + | ==== Hough Transform ==== | ||

| + | |||

| + | *The Hough Transform finds features within an image, generally simple shapes such as lines or circles. | ||

| + | |||

| + | *In the eye detection problem, the Hough Transform finds circles within the region that has been extracted and filtered. These circles correspond to the pupils. | ||

| + | |||

| + | ==== Eye Verifier ==== | ||

| + | |||

| + | *After locating circles, an eye verifier checks all circles that are found and decides which two have the correct characteristics of the eyes based on size and distance from one another. | ||

| + | |||

| + | * Once the eyes are verified, we will use their location as our measurement for the Kalman filtering stage | ||

| + | |||

| + | |||

| + | === 4. Kalman Filtering === | ||

| + | |||

| + | Kalman filtering estimates the state of a dynamical system in each sample frame using the measurements obtained up to that time. It proceeds in two stages: | ||

| + | |||

| + | *1. Prediction: In this step, we predict the location of the eyes using the past estimate and the state transition model. | ||

| + | |||

| + | *2. Update: In the update step, we use the current measurement to refine the predicted state. | ||

| + | |||

| + | The estimates gained from the Kalman filter are generally more precise than the individual measurements since they consider both the current and the past measurements. | ||

| + | |||

| + | == Results == | ||

| + | |||

| + | === Original Image === | ||

| + | [[File:Eye1.png|480px]] | ||

| + | |||

| + | === Projections === | ||

| + | [[File:vert_proj.png|228px|left]] [[File:horix_proj.png|245px]] | ||

| + | |||

| + | === Eye Region Extraction === | ||

| + | [[File:Eye2.png|480px]] | ||

| + | |||

| + | === Homomorphic Filtering === | ||

| + | [[File:Eye3.png|480px]] | ||

| + | |||

| + | === Hough Transform === | ||

| + | [[File:Eye4.png|480px]] | ||

| + | |||

| + | === Eye Verifier === | ||

| + | [[File:Eye5.png|480px]] | ||

| + | |||

| + | === kalman Filter === | ||

| + | [[File:Eye6.png|480px]] | ||

| + | |||

| + | == Conclusions and Future Work == | ||

| + | |||

| + | We developed a robust pupil tracking algorithm that can be used for various applications. It performs in real-time, at 15 frames per second. In the future, we will work to speed it up further to reach 30 frames per second. In addition, we will work to improve the accuracy of the algorithm and build the necessary hardware for it. | ||

| + | |||

| + | == Acknowledgements == | ||

| + | We would like to thank Arye Nehorai and Ed Richter for their effort to making the Undergraduate Research Program accessible to so many students at Washington University in Saint Louis. | ||

| + | |||

| + | We would also like to thank Phani Chavali, our mentor, for guiding and helping us to complete this project. | ||

Latest revision as of 20:30, 18 December 2012

Contents

Background

This research was conducted by Tommy Powers and Cameron Fleming in the Spring Semester and Fall Semester of 2012 at Washington University in Saint Louis. It was part of the Undergraduate Research Program in the Preston M. Green Department of Electrical and Systems Engineering. This project was overseen by Dr. Arye Nehorai, Ed Richter, and Phani Chavali.

Project Overview

The goal of this pupil tracking project is to provide a method of communication using eye movement for people with Amyotrophic Lateral Sclerosis (ALS) who are unable to control voluntary muscle movement in their limbs. The project will be developed in two main phases:

- Develop an algorithm to track the movement of the pupils.

- Develop hardware in order to capture the movement of the eyes so that it may

then be processed.

Our work focused on the first task in order to construct a robust pupil finding algorithm. We tried a variety of ways to track the pupils and in this report we focus on the most robust of these.

Eye Tracking Steps

The eye tracking algorithm uses the following detailed steps:

- Eye Region Extraction

- Homomorphic Filtering

- Eye Tracking

- Kalman Filtering

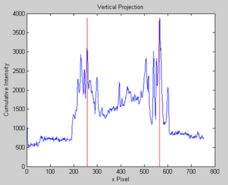

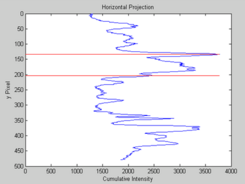

1. Eye Region Extraction

This portion uses the horizontal and vertical projections of the gradient to find the biggest changes in intensity. These changes correspond to edges in the image.

Horizontal Projection

- Goal: Use peaks near the eyebrows and the bottom of the eye to define the top and bottom of the region.

- Issues: The hairline on the forehead and the lips are often large peaks, so these must be taken into account.

Vertical Projection

- Goal: Find the peaks near the edges of the face to define the left and right parts of the region.

- Issues: This is more consistent although making sure that the peaks are at least a certain distance apart adds consistency.

2. Homomorphic Filtering

Homomorphic filtering performs filtering on an image by simultaneously normalizing the brightness and creating more contrast. This is accomplished using a high-pass filter on the natural log of the image.

<math> Image(x,y) \rarr ln \rarr FFT \rarr H(u,v) \rarr FFT^{-1} \rarr exp \rarr ImageOut(x,y) </math>

For the eye tracking problem, the homomorphic filter is able to separate the useful information of the image from the artifacts created by non-uniform lighting conditions. The filtering removes the artifacts, leaving a clearer picture of the eye region.

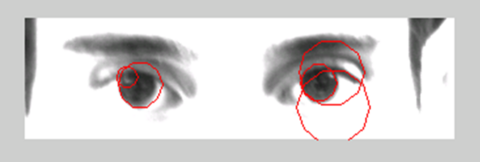

3. Eye Tracking

The eye tracking portion involves 2 steps: Hough Transform and Eye Verifier. These steps are performed every 3 frames in order to make sure that the following Kalman Filtering correctly tracks the eye movement.

Hough Transform

- The Hough Transform finds features within an image, generally simple shapes such as lines or circles.

- In the eye detection problem, the Hough Transform finds circles within the region that has been extracted and filtered. These circles correspond to the pupils.

Eye Verifier

- After locating circles, an eye verifier checks all circles that are found and decides which two have the correct characteristics of the eyes based on size and distance from one another.

- Once the eyes are verified, we will use their location as our measurement for the Kalman filtering stage

4. Kalman Filtering

Kalman filtering estimates the state of a dynamical system in each sample frame using the measurements obtained up to that time. It proceeds in two stages:

- 1. Prediction: In this step, we predict the location of the eyes using the past estimate and the state transition model.

- 2. Update: In the update step, we use the current measurement to refine the predicted state.

The estimates gained from the Kalman filter are generally more precise than the individual measurements since they consider both the current and the past measurements.

Results

Original Image

Projections

Eye Region Extraction

Homomorphic Filtering

Hough Transform

Eye Verifier

kalman Filter

Conclusions and Future Work

We developed a robust pupil tracking algorithm that can be used for various applications. It performs in real-time, at 15 frames per second. In the future, we will work to speed it up further to reach 30 frames per second. In addition, we will work to improve the accuracy of the algorithm and build the necessary hardware for it.

Acknowledgements

We would like to thank Arye Nehorai and Ed Richter for their effort to making the Undergraduate Research Program accessible to so many students at Washington University in Saint Louis.

We would also like to thank Phani Chavali, our mentor, for guiding and helping us to complete this project.