Difference between revisions of "EyeTracking ImageProcessing"

| (4 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

In this part we used different methods that helped us overcome the challenges we faced during the project. The technics we used are: | In this part we used different methods that helped us overcome the challenges we faced during the project. The technics we used are: | ||

| − | Discarding Color information | + | |

| + | '''Discarding Color information:''' | ||

We convert the images from all the frames into to their corresponding gray scale images. To do this, we average the pixel values in the entire three color channel to obtain a gray scale image. | We convert the images from all the frames into to their corresponding gray scale images. To do this, we average the pixel values in the entire three color channel to obtain a gray scale image. | ||

'''Low pass filtering:''' | '''Low pass filtering:''' | ||

We use low-pass filtering to remove the sharp edges in each image. This also helps to remove the undesired background light in the image. | We use low-pass filtering to remove the sharp edges in each image. This also helps to remove the undesired background light in the image. | ||

| + | [[File:LPF1.jpg|200px|Low Pass Filtering]] | ||

| + | [[File:LPF2.jpg|200px|Low Pass Filtering]] | ||

| + | [[File:lpf.jpg|200px|Low Pass Filtering]] | ||

| + | |||

| + | |||

'''Scaling:''' | '''Scaling:''' | ||

Latest revision as of 17:33, 19 December 2011

In this part we used different methods that helped us overcome the challenges we faced during the project. The technics we used are:

Discarding Color information: We convert the images from all the frames into to their corresponding gray scale images. To do this, we average the pixel values in the entire three color channel to obtain a gray scale image.

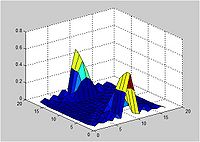

Low pass filtering:

We use low-pass filtering to remove the sharp edges in each image. This also helps to remove the undesired background light in the image.

Scaling: We scale down the filtered images to obtain lower resolution images. This serves two purposes. First, since the dimension of the image decreases, scaling improves the processing time. Second, the averaging effect removes the undesired background light.

Template Matching: We used a template matching algorithm to segment the darkest region of the image. Since after discarding the color information and, low-pass filtering, the pupil corresponds to the darkest spot in the eye, this method was used. We used a small patch of dark pixels as a template. The matching is done using exhaustive search over the entire image. Once a match is found, the centroid of the block was determined to the pupil location. For the experiments, we used a block size of 5 x 5 pixels.

Determining the search space: Since the exhaustive search over the entire image to find a match is computationally intensive, we propose an adaptive search method. Using this method, we choose the search space based on the pupil location from earlier frame. In this manner, using the past information, we were able to greatly reduce the complexity of the search. We used a search space of 75 X 75 pixels around the pupil location from the last frame.