|

|

| (40 intermediate revisions by the same user not shown) |

| Line 1: |

Line 1: |

| | [[File:IMG_1248.jpg|400px]] | | [[File:IMG_1248.jpg|400px]] |

| | | | |

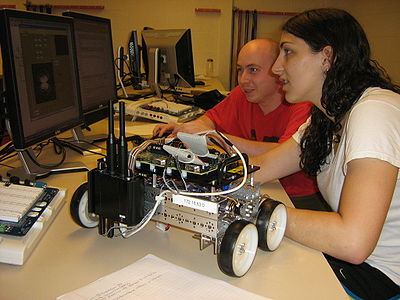

| − | ESE297 - Introduction to Undergraduate Research was created for students who wish to do Undergraduate Research projects in [[media:Robotic_Sensing_V4.pdf|Robotic Sensing]] under [http://ese.wustl.edu/people/Pages/faculty-bio.aspx?faculty=11 Professor Nehorai], the ESE Department Chair. This course is offered as ESE297 for 2 credits and is typically offered in the spring and summer. Students will learn how to implement sensor array signal processing algorithms on the [[LabVIEW for Robotics|LabVIEW for Robotics Starter Kit robots]] shown above using both Matlab and LabVIEW and develop Brain Computer Interface (BCI) algorithms using EEG signals. Students can then apply this knowledge to individual research projects in Robotic Sensing in subsequent semesters. ESE297 does not qualify as an EE electvie. | + | ESE297 - Introduction to Undergraduate Research was created for students who wish to do Undergraduate Research projects in [[media:Robotic_Sensing_V4.pdf|Robotic Sensing]] under [http://ese.wustl.edu/people/Pages/faculty-bio.aspx?faculty=11 Professor Nehorai], the ESE Department Chair. This course is offered as ESE297 for 2 credits and is typically offered in the spring and summer. Students will learn how to implement sensor array signal processing algorithms on the [[LabVIEW for Robotics|LabVIEW for Robotics Starter Kit robots]] shown above using both Matlab and LabVIEW and develop Brain Computer Interface (BCI) algorithms using EEG signals. Students can then apply this knowledge to individual research projects in Robotic Sensing in subsequent semesters. ESE297 does not qualify as an EE elective. |

| | == Logistics == | | == Logistics == |

| − | * '''Meeting Time''': Tues-Thursday, 1-4 pm in Bryan 316 from 5/24 - 7/16 | + | * '''Meeting Time''': Fri, 1:30-5:30 in Bryan 316 |

| − | * '''Holidays''': 5/28, 7/4 | + | * '''Holidays''': Fall Break, Thanksgiving |

| − | * '''Instructor''': Ed Richter | + | * '''Instructor''': Ed Richter, Bryan 201E |

| − | * '''[[media:Syllabus-sp12.pdf|Syllabus]]''' | + | * '''T/A''': Stephen Gower (sgower@wustl.edu) |

| − | * '''Expectations''': The work load is estimated to be 10 hours/week. In the summer, the expectation is 20 hours/week. That is, students who earn an A will spend many unsupervised hours outside of the class meeting times. Grading is based on your Homework and your Projects. Late work will be accepted with a penalty. Please see the syllabus for due dates. | + | * '''Office Hours''': Mon,Tues 2:30-4 (Ed), Thurs 8-10pm (Steve) |

| | + | * '''[[media:Syllabus-FL15.pdf |Syllabus]]''' |

| | + | * '''Expectations''': The work load is estimated to be 10 hours/week if you take it during the Fall or Spring semesters (20 hours/week for a summer semester). That is, students who earn an A will spend many unsupervised hours outside of the class meeting times. Grading is based on your Homework and your Projects. Late work will be accepted with a penalty of 3 points per day. Please see the syllabus for due dates. |

| | | | |

| | + | = Announcements = |

| | + | * Matlab available for Students now! Send email to support@seas.wustl.edu |

| | == Lecture Notes == | | == Lecture Notes == |

| − | * Topic 1: [[media:Presentation_Robotic_Microphone_Array.pdf|Acoustic Source Location Background and Theory]] (Slides 1-19) | + | * [[Accostic Source Location]] |

| − | ** Additional references:

| + | * [[Data Acquisition Basics]] |

| − | ***[http://ese.wustl.edu/ContentFiles/Research/UndergraduateResearch/CompletedProjects/WebPages/fl08/JoshuaYork/index.html Joshua York, Acoustic Source Localization, ESE497, Fall 2008]

| + | * [[Signal Processing Basics]] |

| − | ***[http://ese.wustl.edu/ContentFiles/Research/UndergraduateResearch/CompletedProjects/WebPages/fl09/rms3/index.htm Raphael Schwartz and Zachary Knudsen, Robotic Microphone Sensing: Data Processing Architectures for Real-Time, Acoustic Source Position Estimation, ESE497, Fall 2009]

| + | * [[Brain Computer Interface (BCI)]], [[media:BCI2000.zip|BCI2000.zip]] |

| − | ** Homework 1: Read the material that we discussed in our meeting today and the additional references listed above.

| |

| − | ** Homework 2: Using this [[media:MicrophoneArrayWithRotation.JPG|figure]], derive the general equations for the source location (x*,y*) which include the rotation of both pair, i.e., the intersection of the 2 lines. Verify that the formula on slide 10 of the lecture notes is correct for the special case where

| |

| − | ***y1 = y2 = 0

| |

| − | ***Rotation1 and Rotion2 = 0

| |

| − | ***X1=P/2

| |

| − | ***X2 = -P/2

| |

| − | * Topic 2: Data Acquisition Basics

| |

| − | ** [[media:LabVIEW_Introduction.pdf|LabVIEW Tutorial]]

| |

| − | *** Code up examples in LabVIEW for slides 11, 14, 27, 31, 36, 38, 41. Put each one in a separate VI and demo to me or T/A.

| |

| − | *** Configure LabVIEW options as shown in slides 15-17

| |

| − | *** Exercises 1,2,3

| |

| − | ** Homework 3 - Finish Exercises

| |

| − | ** Assign Project1 - Simulation

| |

| − | ** Homework 4 - Finish ComputeAngle.vi (in Project1 -> RoboticSensing.zip -> micSourceLocator.lvproj -> My Computer -> ComputeAngle.vi) and ComputeIntersection.vi

| |

| − | ** Additional Resources

| |

| − | *** Conditionally append values to an array in a loop

| |

| − | *** [http://cnx.org/content/m12220/latest/ How to Create and Array on the Front Panel]

| |

| − | *** [http://zone.ni.com/devzone/cda/tut/p/id/7521 LabVIEW tutorial], [http://www.ni.com/academic/students/learnlabview LabVIEW 101]

| |

| − | ** [[media:Data_Acquisition_Basics.pdf|Data Acquisition Basics]]

| |

| − | *** Homework 5 - Finish exercise | |

| − | *** Homework 6 - Connect wires from A00 and AO1 to AI0+ and AI1+ (remove wire from Banana A to AI0+). Make sure that the Prototyping Power is on. Modify your vi from Homework 5 to collect samples from both AI0 and AI1. Then open [[media:DelayedChirp2DAC.zip|DelayedChirp2DAC.vi]] and run this vi. You shouldn't modifiy DelayedChirp2Dac.vi. Run your modified Homework 5 vi and zoom in in the time and frequency domain to examine the waveforms in detail. Describe in detail what you see. Measure the difference in time between both channels. Hint: Start and stop your Data Acquisition vi until the entire signal is in the middle of the buffer.

| |

| − | **[[media:CrossCorrelation.pdf| Cross Correlation]]

| |

| − | *** Homework 7

| |

| − | ****Plot the Cross Correlation of the 2 channels from Homework 6 and see if the peak is shifted from the middle, the number of samples you measured from the previous step.

| |

| − | ***** Hints:

| |

| − | ****** Functions -> Express -> Signal Analysis -> Conv & Corr -> Cross Correlation

| |

| − | ****** This function requires that you extract the 2 channels from the DDT. To do this, use Functions -> Express -> Sig Manip -> From DDT -> Single Waveform -> Channel 0 and then again for Channel 1. Connect the outputs of these to the X and Y inputs.

| |

| − | ****** Before you plot the Cross Correlation, extract the 1D array of scalars using the From DDT so that the X-Axis is in samples.

| |

| − | ****** Look at the help on the Cross Correlation for details.

| |

| − | **** Plot the Spectrogram of Channel 0.

| |

| − | ***** Hint: There is a good Spectrogram example that ships with LabVIEW. Go to Help -> Find Examples... and search for STFT -> STFT Spectrogram Demo.vi. You can copy from this example and paste it into your code.

| |

| − | * Topic 3: Signal Processing Basics

| |

| − | **[[Media:SS_Tutorial_New.pdf|Tutorial]]

| |

| − | ***Homework 8- Finish exercise from tutorial.

| |

| − | ***Homework 9- Use the Signal Processing Palette in LabVIEW to generate 2 sinusoid waveforms (Signal Processing -> Waveform Generation -> Sine Waveform) with two different frequencies. Add these together and implement 2 separate filters for this signal (Functions -> Express -> Signal Analysis -> Filter) to extract the original sinusoids. Plot these outputs in the time domain. Also, plot them in the frequency domain (Express-> Signal Analysis -> Spectral). Make sure you can identify the frequencies corresponding to the input sinusoids in the frequency domain. Next, add (as in addition) Gaussian White Noise to the sum of the 2 sinusoids (Signal Processing -> Waveform Generation -> Gaussian White noise). Plot the spectrum of the unfiltered signal and identify the frequencies corresponding to signal and noise again. Increase the standard deveiation of the WGN and modify your filter to improve the quality of the filtered signal. Also, looking at the sum of the 2 sinusoids and the noise, what is the relationship between the Standard Deviation of the WGN and the amplitude of the noise. Plotting the histogram of the noise (Express -> Signal Analysis -> Histogram) might help? Note: If your graph X-axis is in absolute time instead of seconds, right click on the graph and select Properties -> Display Format -> X-Axis and set it to SI units.

| |

| − | **[[Media:DSP_ESE497.pdf|DSP Lecture by Dr. Jim Hahn]] (for reference)

| |

| − | **[[Media:DSPConfigurations.pdf|DSP Configurations Lecture by Dr. Jim Hahn]] (for reference)

| |

| − | * Topic 4: Brain Computer Interface (BCI)

| |

| − | **[http://www.bci2000.org/wiki/index.php/User_Tutorial:Performing_an_Offline_Analysis_of_EEG_Data Performing an Offline Analysis of EEG Data using BCI2000]

| |

| − | ***BCI2000 is installed at c:\BCI2000. The tools and data folders referenced in the tutorial are at c:\BCI2000\tools and c:\BCI2000\data

| |

| − | ***To launch BCIViewer, browse to tools\BCI2000Viewer and double click on BCI2000Viewer.exe. Pay particularly close attention to States StimulusBegin and StimulusCode.

| |

| − | ***To launch OfflineAnalysis, browse to tools\OfflineAnalysis and double click on OfflineAnalysis.bat

| |

| − | **[[media:IntroductionToSignalDetection.pdf|Introduction to Signal Detection/Classification]]

| |

| − | **[[media:BCIStimPresentation.pdf|How to collect EEG data using BCI2000 and the Emotiv headset]]

| |

| − | **[[media:Electroencephalography_(EEG)-Amanda.pdf|Introduction to EEG Physiology (reference)]]

| |

| − | <!--

| |

| − | From Jim Hahn

| |

| − | | |

| − | ***[[media:AssignmentForDSPLecture1.pdf|Homework 9]]

| |

| − | -->

| |

| − | | |

| − | | |

| − | <!--

| |

| − | From Phani

| |

| − | **[[Media:SS_Tutorial_New.pdf|Tutorial]]

| |

| − | ***Task 6- Finish exercise from tutorial.

| |

| − | ****[[Media:task1.zip|Solution]]

| |

| − | -->

| |

| | | | |

| | == Projects == | | == Projects == |

| − | * [[Project1:_Implement_algorithm_using_microphone_array]] | + | * [[Project1:_Implement_algorithm_using_microphone_array| Project1: Implement algorithm using microphone array]] |

| − | * [[Project2:_Triangulation_with_sbRIO_robots]] | + | * [[Project2:_Triangulation_with_sbRIO_robots|Project2: Triangulation with sbRIO robots]] |

| − | * Project3: BCI Offline Analysis - Signal Detection

| + | * [[BCI Projects]] |

| − | **Plot R^2 for all Channels/Frequency Pairs

| + | == 2013 Upgrade work-around == |

| − | **[[media:AnalyzingBCI2000DatFiles.pdf|Analyzing BCI2000 dat Files]]

| + | * Copy [[media:RobotMicSourceLocator.vi|RobotMicSourceLocator.vi]] to RoboticSensing\MicSourceLocator |

| − | **Start with [[media:OfflineAnalysisSpectral.m|OfflineAnalysisSpectral.m]]. This is the file you will modify for this project. | + | * Copy [[media:MoveWheels (Host).vi|MoveWheels (Host).vi]] to RoboticSensing\Examples\MoveWheels (Host).vi (***NOTE*** Change '_' to ' ') |

| − | ***Open in Matlab. Click on File-Set Path and "Add with subfolders" the Tools folder from the BCI2000 folder

| + | * Copy [[media:MoveRobot.vi|MoveRobot.vi]] to RoboticSensing\MicSourceLocator |

| − | ***Click the Run arrow. Select eeg1_1.dat, eeg1_2.dat and eeg1_3.dat. Click Open

| |

| − | **Remove the DC (average) for all 64 channels

| |

| − | for ch=1:size(signal, 2)

| |

| − | signal(:, ch)=signal(:, ch)-mean(signal(:, ch));

| |

| − | end

| |

| − | **Implement CAR Spatial Filtering using the BCI2000 funtion carFile

| |

| − | signal = carFilt(signal,2) ;

| |

| − | **For every channel compute the power spectrum for every segment of 80 samples every 40 samples for both condition==0 and condition==2 and average them. Create two 3D array of PowerSpectra of size(NumFrequencies,NumChannels,NumTrials) for both conditions.

| |

| − | **Compute the R^2 function for all Channel/Frequency pairs | |

| − | **Plot the R^2 values for all Channel/Frequency pairs using the BCI2000 function calc_rsqu. It should look similar but not identical to the graph on the tutorial.

| |

| − | **Useful Matlab Functions:

| |

| − | ***find

| |

| − | ***spectrogram

| |

| − | ***surf

| |

| − | * Project4: BCI Offline Analysis - Signal Classification

| |

| − | ** Combine best Channel/Frequency pairs using eeg1 (use [[media:Lda.m|lda.m]])

| |

| − | ** Collect your own data using the Emotiv Headset | |

| − | ** Combine best Channel/Frequency pairs using your own data

| |

| − | ** Plot the power in the 4 best Channel/Frequency pairs for the action and the rest conditions using the eeg1 data vs. trial

| |

| − | ** Plot the action and rest results of the LDA vs. trial

| |

| − | ** Plot the ROC for the 4 best Channel/Frequency pairs and the LDA

| |

| − | ** [[media:OfflineAnalysisLDASolutionResults.pdf| Results]]

| |

| − | **Useful Matlab Functions:

| |

| − | *** subplot

| |

| − | *** save, load

| |

| − | *** squeeze

| |

| − | * Project 5: Move sbRIO robots based on BCI algorithm using EEG1 data

| |

| − | ** Re-Package your Matlab code into 2 functions that

| |

| − | *** 1) Read the trials from the .dat files and return 1 trial every time it is called.

| |

| − | *** 3) Computes the LDA measure from the current trial.

| |

| − | ** Test these new functions in a for loop in Matlab and make sure you LDA results match the results from before

| |

| − | ** Call your matlab functions from inside BCI.vi and move the robots every time the action is detected. Verify that you get the number of errors you expected and that the LDA calculation matches from before.

| |

| − | <!--

| |

| − | SP2010

| |

| − | * Project5: Pick one of the following projects - due last day of class:

| |

| − | ** Move robots based on the eeg1 and your own eeg data using the frequency/channel pairs from the R^2 analysis and the threshold from the ROC curve. Use a similar style to the MicSourceLocator code where you select between simulation and real data. If you have time, implement the real data portion. | |

| − | *** LabVIEW hints: | |

| − | **** Use a Matlab Script Node to call load_bci_dat and send out one rest or action trial for every iteration of the main loop. | |

| − | **Record 3 more sessions of the fist clenching experiment and determine an appropriate training/testing method. Also, look at all possible combinations of rest and action for the eeg1 data set and your own data. Comapre the performance. Also, consider the entire class's data to try to find unversal measures (combined with LDA) that work.

| |

| − | ** Implement a computationally less intense way to compute the LDA model for the 4 frequency/channel pairs. Show that the performance doesn't change based on the R^2/ROC analysis using your computational method. This is important if you want to implement BCI on an embedded platform. | |

| − | -->

| |